This no longer works, because zwave-ping.yaml is not a valid name. When you Check Configuration it shows an error about an “Invalid slug” and suggests a rename to zwave_ping.yaml, which does work.

hello,

having same issues, is there some final / reviewed/ tested solution for that bug?

Thanks!

What or which bug are you referring to?

that some of my zwave devices got into the DEad status ; and i have to ping them manually… ie script that can monitor DEAD devices and in case dead one is detected just ping it - to make it online again.

thanks!

The problem is the chip used in the Aeotec gen-7 stick.

The manufacturer is SiliconLabs, and even the latest release notes for their development kit (from which Aeotec derives the firmware for the gen-7) mentions a “non-resolved issue” with the software, ie software jams fro time to time, making the device unresponsive.

This bug is already present for some time now, but never resolved.

Best solution if you want to keep zwave: use a gen5+ stick, no problems whatsoever.

Gotcha - just wanted to make sure you weren’t referring to something with the automation script. For the root cause, the other reply answered that but don’t worry - when it’s fixed, I expect the cheers from the masses will be heard across the globe. But really, just follow this thread and you’ll be kept in the loop.

hey i have that one and still 2 my qubino devices switch randomly to dead state.

as it occurs i should use that automation script if possible.

any idea here?![]() thanks!

thanks!

Sorry - what are you asking?

Interesting post!

Apart from the discussion about ZwaveJS and 700 or 500 series. I do have an Aeotec 500+ Zwave stick and it is running flawless for over 5 years with 70 devices (I use only zwave+ devices). However, there seems a difference in quality; Some devices seems to drop out once a year (like my Heatit thermostat) and I do have one that will drop out every month (ECO-DIM dimmer). Maybe that’s because of interference, but I think ECO-DIM is not quite solid). However, not often, devices might become not responding or seen as dead. That seems generic problem with technology: it can fail sometimes ![]()

I use this package to watch that and automatically try to repair. The package is containing the sensor, a timer and a script . it is all based on the work that nice people have shown here in this topic. However I have modified it to run only when necessary. Therefore the script get’s triggerd for the first time based on the state of the sensor becoming anything else than 0. After running, and thereby pinging, I start a timer. The script will end and since the sensor remains > 0 it will only be triggered when the timer expires. This way, the script is not running in the meanwhile, waiting for a delay. Also it writes a warning to the system log when nodes are pinged.

system_dead_node_handling:

# Package based on https://community.home-assistant.io/t/automate-zwavejs-ping-dead-nodes/374307

# 2024-05 PDW

template:

- sensor:

- name: "Dead ZWave Devices"

unique_id: dead_zwave_devices

unit_of_measurement: entities

state: >

{% if state_attr('sensor.dead_zwave_devices','entity_id') != none %}

{{ state_attr('sensor.dead_zwave_devices','entity_id') | count }}

{% else %}

{{ 0 }}

{% endif %}

attributes:

entity_id: >

{{

expand(integration_entities('Z-Wave JS') )

| selectattr("entity_id", "search", "node_status")

| selectattr('state', 'in', 'dead, unavailable, unknown')

| map(attribute="object_id")

| map('regex_replace', find='(.*)_node_status', replace='button.\\1_ping', ignorecase=False)

| list

}}

timer:

dead_node_ping:

icon: mdi:robot-dead-outline

automation:

- id: '029098239872316'

alias: Ping Dead ZWave Devices

mode: single

max_exceeded: silent

trigger:

# Initial trigger when sensor becomes not 0

- platform: state

entity_id: sensor.dead_zwave_devices

from: '0'

# Timer triggered as long as the problem exists

- platform: event

event_type: timer.finished

event_data:

entity_id: timer.dead_node_ping

action:

# Stop when the problem has been solved

- condition: template

value_template: '{{ states("sensor.dead_zwave_devices") |int(0) > 0 }}'

- repeat:

count: '{{ states("sensor.dead_zwave_devices") |int(0) }}'

sequence:

- service: button.press

target:

entity_id: '{{ state_attr("sensor.dead_zwave_devices","entity_id") }}'

# Add notice to system log (optional)

- service: system_log.write

data:

message: "ZWave Dead node detected and pinged: {{ state_attr('sensor.dead_zwave_devices','entity_id') }}"

level: 'warning'

# Keep trying, but use a timer and avoid this script eating your cpu

- service: timer.start

target:

entity_id: timer.dead_node_ping

data:

# set timer for one minute (change as you like)

duration: "00:01:00"

Let me know what you think of it…

Hi, thanks

could you provide more details where one should incorporate it?

Just save the file as .yaml in a the packages folder and make sure your config.yaml contains this:

packages: !include_dir_merge_named packages

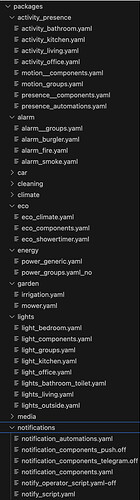

I have all my automations and accompanying helpers, templates, groups etc in packages, because they are related (see image below)

Does not seem to work. I put the flow into node red and no errors. But today I have a dead node and flow does not do anything. Any tips ?

update : Seems _node_status is not visible in node red. So the flow will never run. I have my zwave-js-ui running in a docker and connected tot HA with MQTT. Node status seems not to be visible in node red because of this other way of connecting my devices to HA. Anyone a solution for that ?

update 2: solved. changed fom MQTT to WS-Server connection. Then node_status is well communicated and scripts are working fine now.

What if you have a truly dead node? This script will never end.

You’re right; if a node is truely dead, it will not fix it. But then is it reported like this:

So i know it is truely dead and I can take action…

Yaml for this card is:

- type: custom:fold-entity-row

head:

entity: sensor.placeholder

name: Dode nodes

icon: mdi:cube-off

group_config:

state_color: true

entities:

- type: custom:auto-entities

card:

type: entities

state_color: true

filter:

include:

- state: dead

exclude:

- device: integration

sort:

method: state

note: sensor.placeholder is an empty helper sensor (does nothing) that I use everywhere when I need an entity, but have no relevant entity available.

Why don’t you just get the device and have the automation call zwave-js: ping action on it instead of a button press?

Had that before, but that seems to be replaced by the button press method?

Not that I know of.

I just use this automation. I don’t actually care about which nodes are dead, so I don’t bother with a template sensor. And usually one ping does it for me.

I could also simplify the entity condition with a template trigger, but as my list of z-wave nodes is static (and easy to add to via the GUI), I just list them all:

- id: '1728847220844'

alias: Ping dead node

description: ''

trigger:

- platform: state

entity_id:

- sensor.foyer_light_node_status

- sensor.yard_lights_node_status

- sensor.river_lights_node_status

- sensor.kitchen_island_node_status

- sensor.front_door_lock_node_status

- sensor.living_room_3_way_node_status

- sensor.rob_s_garage_door_node_status

- sensor.living_room_pole_node_status

- sensor.dining_room_lights_node_status

- sensor.garage_door_lights_node_status

- sensor.kitchen_hall_light_node_status

- sensor.kitchen_spotlights_node_status

- sensor.library_pole_light_node_status

- sensor.rob_s_bedroom_light_node_status

- sensor.upstairs_hall_light_node_status

- sensor.athena_s_office_light_node_status

- sensor.front_driveway_lights_node_status

- sensor.lights_outside_garage_node_status

- sensor.living_room_spotlights_node_status

- sensor.upstairs_range_extender_node_status

- sensor.downstairs_range_extender_node_status

to:

- unavailable

- dead

condition: []

action:

- action: zwave_js.ping

target:

device_id: ' {{device_id(trigger.entity_id)}}'

data: {}

mode: single