I have ChatGPT set up through the OpenAI integration, and I have Home Assistant assist set up as well. Both work fine. How can I store the responses? I want to display the text on my dashboard. There doesn’t appear to be any attributes from the conversation entities that would be helpful. I know about input_text, but I don’t know how to pass the data to it (plus the character limit is only 255, which isn’t ideal).

I’m interested in something very similar.

I want to enable chatgpt on multiple voice assistants in the house so that also the kids could start using it.

Mainly because of the kids, I want the following :

To have a log in a dedicated file with :

Time ; Device_name : question_asked : answer_received

Is this doable?

I’m also looking for a way to save the answer or the spoken text, to use it in the “input_text” or as a variable.

With Alexa it is available in plain text in the attributes “last_called_summary”

Does anyone have an idea?

Here’s what I came up with for saving the response from chatgpt, displaying the response on a dashboard, and casting that to my google nest hub. With the nest hub, unfortunately TTS didn’t seem to work at the same time as displaying the dashboard, but TTS is an option if you have a different device or don’t care about being able to see the text.

In configuration.yaml, add this:

var:

ai_response:

initial_value: "Waiting for response..."

restore: false

ai_response_2:

initial_value: "Waiting for response..."

restore: false

ai_response_3:

initial_value: "Waiting for response..."

restore: false

For my automation:

alias: AI response

description: ""

triggers:

- trigger: conversation

command:

- "{question}"

conditions: []

actions:

- action: notify.mobile_app_s23

metadata: {}

data:

title: AI response

message: "{{ chatgpt.response.speech.plain.speech }}"

- choose:

- conditions:

- condition: zone

entity_id: person.example

zone: zone.home_expanded

sequence:

- action: dash_cast.load_url

metadata: {}

data:

entity_id: media_player.google_nest_hub

url: http://192.168.x.x/dashboard-experimental/0

force: true

enabled: true

- data:

agent_id: conversation.chatgpt

text: >-

"{{ trigger.slots.question }}" Give responses that can be easily

read via text and can be read out loud without any weird

characters. Do not spell out numbers. Use 12 hour time and

imperial units. Give short answers when possible, but give more

detail when necessary.

action: conversation.process

response_variable: chatgpt

- variables:

full_response: "{{ chatgpt.response.speech.plain.speech }}"

- data:

entity_id: var.ai_response

value: "{{ full_response[:255] }}"

action: var.set

- data:

entity_id: var.ai_response_2

value: "{{ full_response[255:510] }}"

action: var.set

- data:

entity_id: var.ai_response_3

value: "{{ full_response[510:765] }}"

action: var.set

- delay:

hours: 0

minutes: 0

seconds: 45

milliseconds: 0

- action: media_player.turn_off

metadata: {}

data: {}

target:

entity_id: media_player.google_nest_hub

- conditions:

- condition: not

conditions:

- condition: zone

entity_id: person.example

zone: zone.home_expanded

sequence:

- data:

agent_id: conversation.chatgpt

text: >-

"{{ trigger.slots.question }}" Give responses that can be easily

read via text and can be read out loud without any weird

characters. Do not spell out numbers. Use 12 hour time and

imperial units. Give short answers when possible, but give more

detail when necessary.

action: conversation.process

response_variable: chatgpt

- variables:

full_response: "{{ chatgpt.response.speech.plain.speech }}"

- data:

entity_id: var.ai_response

value: "{{ full_response[:255] }}"

action: var.set

- data:

entity_id: var.ai_response_2

value: "{{ full_response[255:510] }}"

action: var.set

- data:

entity_id: var.ai_response_3

value: "{{ full_response[510:765] }}"

action: var.set

mode: single

Var.ai_response kept saying “unknown” if the response was longer than 255 characters, so I split the answer into 3 chunks. I’m not sure if you can use input_text in a similar way in an automation like this or not. Obviously you need to set up the openAI integration if you want to use chatgpt. My automation takes my message that I say or type to the home assistant cloud assist that I set up, and it sends it to chatgpt.

I display the answer on a dashboard using this (you’ll need wallpanel, dashcast, and button-card from HACS if you want to do it this way):

wallpanel:

enabled: true

fullscreen: true

hide_sidebar: true

idle_time: 0

views:

- title: Home

cards:

- type: custom:button-card

variables:

background: /media/black_picture.jpg

var_font_size: 50px

var_input_text: This is a dynamic input text message.

var_wrap: normal

styles:

grid:

- grid-template-areas: input

- grid-template-rows: 1fr

- grid-template-columns: 1fr

card:

- background: black

- background-size: cover

- color: white

- padding: 0px

custom_fields:

input:

- font-size: '[[[ return variables.var_font_size ]]]'

- white-space: '[[[ return variables.var_wrap ]]]'

- padding: 1px

- border-radius: 1px

- width: 96%

- text-align: start

- text-wrap: wrap

- justify-content: center

- align-self: center

- padding: 3%

- background-color: rgba(0, 0, 0, 0.7)

- color: white

custom_fields:

input: >

[[[ return states['var.ai_response'].state + ' ' +

states['var.ai_response_2'].state + ' ' +

states['var.ai_response_3'].state ]]]

type: panel

Chatgpt told me that you can save the response to a text file as well, but I didn’t try that. @adynis

@newneo You can download and use a helper in HACS that’s called “attribute as sensor”. Then you should be able to display the newly created sensor on a dashboard or use it in other ways.

It took me way too long to figure this stuff out, and my code might not be fully optimized, but it works for me and is hopefully helpful as a jumping off point for anyone looking to do a similar thing! If anybody wants more info, some of the resources I used were the View Assist documentation (mostly to make the dashboard): Info and Infopic Views | View Assist and this code/youtube video: A.I. in your Smart Home - by FutureSmartHome

@renee1 : Thanks for posting. I didn’t succeed t oproceed on the details because I got stuck in understanding the basic:

So in order to get the “question” and “answer” you used an automatio nwith trigger: conversation.

But in the docu. I find:

A sentence trigger fires when Assist matches a sentence from a voice assistant using the default conversation agent. Sentence triggers only work with Home Assistant Assist. External conversation agents such as OpenAI or Google Generative AI cannot be used to trigger automations.

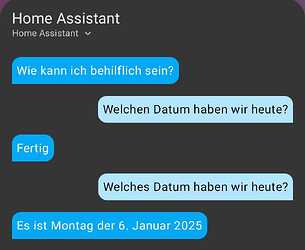

So … I’m confused how it works. I see that: command: “{question}” , does that mean that it’s triggered everytime I say something to the Assist Device, even if I use OpenAI ? (but then how it gets the answer … because that comes later … ![]() )

)

Command: “{question}” is triggered every time you use the home assistant Assist. (You can also set it up to be something like “ask ai {question}” which would trigger whenever you say to Assist: “ask AI xyz” if you only want it to trigger for specific things.) The action that includes the following specifies that whatever is in {question} will get sent to chatgpt:

- data:

agent_id: conversation.chatgpt

The trigger has to use Assist, but for the actions you can change the agent ID to be whichever service you want to process the question. Does that info help? I’m not sure what you have set up now for voice assistants as far as the hardware side goes. To use Assist, some options include: using a USB microphone hooked up to the computer that’s running Home Assistant, using something like the new Home Assistant Voice Preview device, or setting up a tablet or phone to be able to listen for a wake word and respond (View Assist is one of many projects that do it that way). Otherwise you can type or talk to it in the Home Assistant app. There are also people who have made wall dashboards with a tablet where you press a button for Assist instead of using a wake word.

Thanks @renee1 for explaining.

I need to digg more ![]()

I quickly tested only:

alias: "tmp: test speech automation"

description: ""

triggers:

- trigger: conversation

command: "{question}"

conditions: []

actions:

- action: notify.mobile_app_mobil_adi

metadata: {}

data:

message: "{{ chatgpt.response.speech.plain.speech }}"

mode: single

And it fails on chatgpt (although I have chatgpt and it’s working), and also when I have this automation chatgpt answers me exclusively: “done” to whatever I’m asking him.

I will expect my Vocie PE (this week) and I will re-test with that ![]()

You’ll need to include the conversation.process action. I was able to ask chatgpt if we can change the response from Assist, and it turns out that it’s possible, so I added the set_conversation_response action. Try this:

alias: "tmp: test speech automation"

description: ""

triggers:

- trigger: conversation

command: "{question}"

conditions: []

actions:

- action: conversation.process

metadata: {}

data:

agent_id: conversation.chatgpt

text: >-

"{{ trigger.slots.question }}"

response_variable: chatgpt

- set_conversation_response: "{{ chatgpt.response.speech.plain.speech }}"

- action: notify.mobile_app_mobil_adi

metadata: {}

data:

message: "{{ chatgpt.response.speech.plain.speech }}"

mode: single

very good. Unfortunately it doesn’t work with “conversation.home assistant” the answer is always “Done” and not all commands are carried out.

@newneo Hm yeah, I tried testing it with the above code and am having the same issue when trying to process the question with Home Assistant. Did you set up Attribute as Sensor for the Alexa attribute? How do you have Alexa integrated into home assistant? I don’t use Alexa so I’m not as familiar with it, but if you include the code for the automation you’re currently using, I can try to figure it out. But yeah, as far as I know, my method doesn’t work if you’re trying to only use home assistant Assist.

But it works with Chat GPT !!!

thanks a lot !!

It’s not straight forward for me to understand why it works (for me it looks like the automation “steals” the question going to chatgpt and then it “calls again” chatgpt with the same question only for the purpose to be able to get the answer from it). But important is that it works and I can use the question: {{ trigger.slots.question }} and the answer: {{ chatgpt.response.speech.plain.speech }} to log it in a file ![]()

When I will have the VoicePE device I will try to see if I can get also the device or the area which triggered this ![]()

Later Edit: I tried with an m5stack and it’s easy to take also the device id / name, e.g.:

Device '{{ device_attr(trigger.device_id, "name") }}' from '{{area_name(trigger.device_id)}}' received question '{{ trigger.slots.question }}' and answered '{{ chatgpt.response.speech.plain.speech }}'

You’re welcome. Glad I could help! Yep, that’s basically my understanding for how it works too: home assistant sends the question to chatgpt, then looks for the response.

Something I observed, maybe it’s helpful:

I had in my automation a reference to device_id to notify me about which device (see my previous posts) was the triggering one. After I introduced this update, when I was trying to ask chatGPT something from the browser (so not thhrough an assist device) I was getting as answers only:

“done”

(from the assist device it was still working fine, only from the computer was “failing”)

As a result I just put a condition to check: if device_id is valid, then the question comes from the browser and I should not make the automation have errors by trying to use device_id. If device_id is valid, then I can request the device name and area .

So … conclusion: it looks like if this autiomation is failing, then … Assist will just answer: “done” to whatever you ask … Avoid having errors in the automation ![]()

lateredit:

I’ve wrote an answer with the full solution inspired from this discussion: Log to a file everything "discussed" with ChatGPT on all Voice Assist devices - #2 by adynis