Thank you! So in addition to a task that retrieves the latest version data (every 120 minutes) there’s a task that performs a connectivity check (and both tasks use the same base URL).

The only fly in the ointment is that the connectivity check’s polling interval doesn’t appear to match the observed interval:

# Connectivity

self.sys_scheduler.register_task(

self._check_connectivity, RUN_CHECK_CONNECTIVITY

)

I assumed all polling intervals were expressed in seconds but that would imply a 30-second interval! Even if it were in minutes it still doesn’t jibe with the observed 10-minute interval.

There’s still a missing piece to this puzzle.

It’s throttled in the function in the bottom of that file if internet is available

Yeah, my mistake, I didn’t examine check_connectivity's code.

It explicitly mentions it performs a full version check “each 10 min”. I assume the 30-second interval represents the ‘clock tick’ used to execute the task but the task itself will poll the URL at a different interval.

Thanks again! I need some time to understand what it’s doing exactly before I attempt to modify it.

Don’t modify it;

- It’s lost on updates

- several functions inside the supervisor blindly rely on that functioning as written

I have a test and a production system. I intend to modify the code used in the test system and observe the results. If there are no ill effects over several days then I’ll consider modifying the production system.

Thanks for the warning about losing changes on updates. I’m aware of this because I run a patch on the homekit_controller integration every time I update the production system.

Modify an integration’s source code inside a docker container.

FWIW, the integration reports light level as a float which results in generating more data than I find to be necessary. The patch simply truncates the value to int. Practically speaking, I’ve found no need for a light level of 165.23 vs simply 165.

UPDATE

I’ve increased the connectivity check’s polling interval to 2 hours with a full version-check occurring every 24 hours. Nothing seems to have been negatively affected. For convenience, I’ve created a shell script to ‘patch’ the code because, as mentioned, the modifications are undone when Supervisor is updated.

That all works fine on my production system running Home Assistant Supervised on Debian. However, my test system runs Home Assistant OS and it performs two independent connectivity checks.

- Supervisor checks connectivity (in the same way as in Home Assistant Supervised)

- The OS itself performs a connectivity check every 5 minutes (via NetworkManager).

I’ve tried to modify NetworkManager.conf, following the documentation’s instructions, but it seems to reject all modifications to connectivity. I’ve posted an Issue in the Operating System repo to determine if this is by design or a bug.

tl;dr

Currently, I am unable to prevent Home Assistant OS from performing an Internet connectivity check every 5 minutes. In contrast, I’ve had success with Home Assistant Supervised.

UPDATE

Sadly, my simple modification of Supervisor’s source-code is no longer permitted.

The following PR 2789 was implemented in supervisor-2021.04.3 and now checks Supervisor’s source-code for modifications. If it encounters any, it marks the instance as being unsupported and unhealthy.

Although the PR claims the following:

People can run what ever they want, it should just not show as supported.

It didn’t meet its stated goal; you can’t run whatever you want. Now that the system is deemed to be unhealthy it prevents you from installing additional Add-ons.

In addition, of the two systems I have (both running Home Assistant Supervised on Debian) the one on an RPI3 prevented me from opening the existing, installed Add-ons. I’m not sure why it behaved differently from the other (which is installed on a generic Intel-based machine) but it certainly got my attention because that’s how I discovered it was unhealthy due to ‘source_mods’.

I suspect the PR was introduced to protect users from malicious changes to Supervisor. However, anyone able to make such changes can also disable the new self-checking function (thereby rendering the PR moot).

I don’t want to disable the self-check function in order to throttle connectivity/version checks, so I’ve asked the development team to consider making the intervals user-definable. For example, the mods I made (consisting of simply altering two integer values) reduce the connectivity check to every few hours and the version check to once a day.

On a separate note, if you were the victim of malicious changes to Supervisor, there’s no ha supervisor command to replace the existing Supervisor docker image with a fresh copy (update won’t work if you’re already running the latest version, neither will repair). I imagine you’ll have to use docker pull assuming you know something about docker while you’re freaking out that you somehow managed to install a hacked copy of Supervisor.

Just wanted to drop a  about this , I’m as frustrated by home assistants near compulsive version checking as you are. Hopefully someone will acknowledge this issue and allow the system to chill !

about this , I’m as frustrated by home assistants near compulsive version checking as you are. Hopefully someone will acknowledge this issue and allow the system to chill !

The linked GitHub Issue (above) explains how I mitigate it now. The first post in the Issue’s thread describes the two integer values that I change in tasks.py. The last post describes the Home Assistant CLI command to disable content checking (i.e. refrain from detecting source code modifications). The result is that my instance is flagged as unsupported but not unhealthy. That arrangement allows the patched source code and permits the use of the Add-on store and to upgrade all software.

Ideally, additional control should be provided over Supervisor’s configuration, including the frequency of connectivity and version checks. If you have not voted for the following Feature Request yet, please consider it:

https://community.home-assistant.io/t/allow-some-degree-of-control-over-supervisor/306830/7

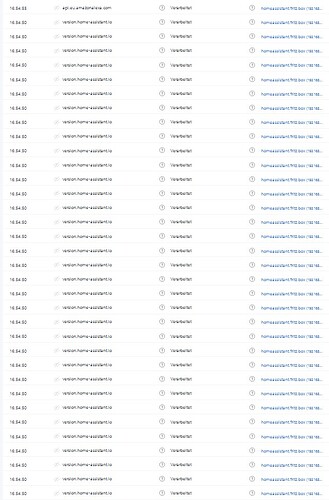

I know this is old, but I stumbled on the same issue today for the version.home-assistant.io domain. Lots and lots of DNS requests in my Pi-Hole.

I thought of one new piece of info that hasn’t been discussed yet.

The frequency of a DNS lookup is related to the TTL of the DNS record. You won’t see a record in the PiHole for every call HA makes to the version.home-assistant.io domain.

Any network client making a request (like home assistant) will query DNS to look up the IP address of the remote server. The DNS response will contain a value called the “time-to-live” or TTL. The TTL is the duration that a client should cache the result. If the client makes another request before the TTL expires, the client’s OS should serve up the response from its own DNS cache.

Once the TTL expires, the next call to that server will cause a new DNS lookup.

Looking at the TTL for version.home-assistant.io: MX Toolbox DNS Lookup

It has a rather short TTL of 5 minutes. This explains why we see so many DNS requests. The cache period is very short.

Too short?

Valid reasons for a short cache period might be that the owner feels they might need to relocate the services, or fail over quickly in the event of an incident.

Looking at Pi-Hole, I’m seeing a LOT of hits to version.home-assistant.io. This is 3,500 over the last 24 hours. That’s crazy and excessive.

Found this thread because i saw an enormous amount of DNS requests from HA, to my Pihole DNS.

This request for DNS to “version.home-assistant.io” is easily the “top permitted domain” with 5 times as many requests as number 2 in the list.

I currently have 2561 requests made in the last approximately 24 hours.

I find it highly unlikely that HA is unable to function properly, if it is not able to check the version or check if it is online, THAT often.

I"m in the same spot, but I have 18,000 requests to version.home-assistant.io, presumably in the last 24 hours, as I’m pretty sure that’s what my pi-hole shows by default. So that’s a request every 5 seconds.

Hi Everyone,

Here this is 1120 requests per 24 hours, perhaps a little too chatty. It is my second most requested address after google, which is over-requested by moode-audio. Each 10 minutes, I’ve got a bunch of 20 requestion to version.home-assistant.io.

I’m using Home assistant as my home automation system for not too much relying on external services, this is not exactly what occurs. And I even not speak about the CO2 equivalent production generated the thousands of servers requesting thousands times a day if internet is online or if a new version of home assistant is available ;-).

If I add to that score, all the arpa DNS queries to my internal addresses (eg: 184.0.168.192.in-addr.arpa) which produce “not found Domain” responses, Home assistant is generating one third of my daily DNS traffic.

Some way to reduce this traffic would be highly welcome.

All the best, and happy new year to the developers

I also encountered this with recent releases on a HASSOS based RPi4. Can’t put my finger on the exact release but it was likely with one of the 2021.12.* branch. It even DDos’ed my pihole instance (on a spare RPi3) b/c of the 200 req/s limit so everything went haywire. Back to AGH now where I at least knew where to turn off those limits. Nevertheless, there is something seriously going wrong. Don’t know on which side, though.

Edit: @coissac : As for your internal queries, on AGH you have the possibility to add a block like

[/fritz.box/]192.168.178.1

[/168.192.in-addr.arpa/]192.168.178.1

to your upstream resolver list that in my cases forwards these queries to my router (a FB obviously).

you have 20x every 10 minutes? I checked my dns logs and I have 1 query every 10 minutes.

Sounds like a bug, I would expect only 1 call every 10 minutes.

Yeah, could be an ISP thing since I’ve observed similar things for msftconnect.com and the synology online check recently. Will keep an eye out whether things return to normal eventually…