You could train a custom model if you wanted, but it’s quite a bit of work.

yeah,sounds like. I think it is possible to make some function, to have additional button on the event, to give the image with the rectangle (upload) to reduce falsepositives. yeah i wait for somebody to do it as i have no idea how

So what do you use now? I use docker on win 10 not vm…

I’m using win 10 cuz i have blueiris installed on it as well.

I did the passthrough of the GPU.

Everything is way smoother but i still have huge buffering time gaps. The CPU is 8% with 8 cores and RAM is still available.

Is there any change to speed up the buferings?

Thanks!!!

Does anyone know if there is a way to access the most recent clip?

I can access the clips using the web interface, or the media browser in lovelace but I am looking to create a sensor that has the path to the newest clip. I have attempted the http api for this but I have no idea how to extract the api data to get the most recent clip id and build out from there.

Thanks

The http API just returns json, so you can use a RESTful sensor in Home Assistant to pull the first value of the returned events array.

Something like this would probably work to get the ID of the first event that has a clip (untested):

sensor:

- platform: rest

name: Latest Frigate Clip ID

resource: http://yourfrigateaddress:5000/api/events?has_clip=1

value_template: '{{ value_json[0].id }}'

You should then easily be able to construct the path to the clip with the ID.

buffering time gaps, you mean delay? 8% with 8 cores is fine, i gave HASS only 4cores

I have not done any other settings and yet have no problems as there is a few sec delay which i dont care,

i am now going through other person config, that motioneye when have motion detection will give command to frigate mqtt to turn on motion object detection and then the frigate mqtt will give command to motioneye API to start recording to local network share. As Frigate dont have it out of the box. (have been trying for several days) And this is the only solution that should work.

Or i just leave frigate motion detection and just read the MQTT and then send to motioneye.

When i setup all cameras will see where is the minimum CPU usage

Why not let frigate record the clips? When running both motioneye and frigate, they both have to decode the video stream, so you are doubling up on CPU usage. Frigate has motion detection built in, so it isn’t running detection all the time.

I have send you PM :-X with explanation why frigate cant record as i need :-X <3

Hi, sorry to PM you directly, i have run out of options and ideas on this forum.

I have HASSOS on proxmox, and installed frigate NVR addon to home assistant.

I am not able to mount network share to frigate NVR.

I am able to mount it in hassos,i see the files in media sidebar that i put to the network share by myself.

But when i run the frigate NVR add on, it just create/overwrites and remove the mount share or made its own environment folder?

As there are a lot of clips i want to save them on the network share directly. It is a lot of data stored inside the HASS VM and it is making troubles, like big backups,out of free space,etc.

Thank you <3

P.S. i found only that you need to move the database localy when you use network share. But no other documentation how to set it up in frigate nvr so it can see it.

Actually the motion eye have a seetings with stream passthrough.

So no i want to set armcest to read motion detection from camera trigger directly.

Then frigate should turn frigate motion detection analyse, then trigger motioneye network clip saving.

So there should not be any CPU doubling i hope. Still setting it up.

I’d also be interested in figuring out how to map external storage. I’m running hassOs on proxmox as well and only have so much room in the VM so right now I don’t do clips to save space.

I have frigate running under docker on my synology with a coral and it works great. Uses only 20% CPU for 4 1080p streams.

Looking into proxmox, going to try running this and blueiris on it.

After playing around with the settings for a long time, I found that the re-streaming to the RTMP feed is causing the issues. As I didn’t manage to find the right settings to get this fixed, so I decided to disable the RTMP re-feed for now.

According to documentation I can try to fix the errors:

Some video feeds are not compatible with RTMP. If you are experiencing issues, check to make sure your camera feed is h264 with AAC audio. If your camera doesn’t support a compatible format for RTMP, you can use the ffmpeg args to re-encode it on the fly at the expense of increased CPU utilization.

In which section do I need to specify the parameters? Can that be done globally or do I need to set it for each camera?

More info wanted! Especially how I can copy that to proxmox, ubuntu, docker and frigate!

I had a similar issue with the audio stream of one of my cameras and suggest you try to change “-c copy” to “-c:v copy -c:a aac” in your output args. This will make sure your audio stream is compatible.

So i have tested it:

This is checking motion detection from the dahua trigger itself (anymotion detection)

conf yaml

amcrest:

- host: 192.168.5.30

username: xxx

password: xxx

name: "CAM0"

stream_source: rtsp

binary_sensors:

- motion_detected

- online

Then i am trigerring MQTT Frigate to start motion detection.

After that if in MQTT frigate person>=1 im sending commands to motioneye webcontrol with

switches.yaml

- platform: command_line

scan_interval: 35

switches:

cam9md:

command_on: 'curl -k "http://192.168.5.6:7999/1/detection/start"'

command_off: 'curl -k "http://192.168.5.6:7999/1/detection/pause"'

command_state: 'curl -s "http://192.168.5.6:7999/1/detection/status" | grep -q ACTIVE'

And the motion eye is saving all to network storage.

Everyting is fine, but the captured video is laggy. With 80% of my 4vCPU in proxmox.

When frigate was capturing it was without lags…

So i hope @blakeblackshear will wake up and tell us how to change the storage to local network, otherwise it is not usable much… ;-( will test it with all the cameras when i have more time.

It is strange as motioneye is caching it to ram, why are there lags…

Feel free to ask me anything if you interested

EDIT 1:

I put 8vCPU and 8GB ram

50% usage, still lags…

EDIT 2: WOOHOOO DONE !!! WORKING PERFECTLY !!! WOOOHOOO

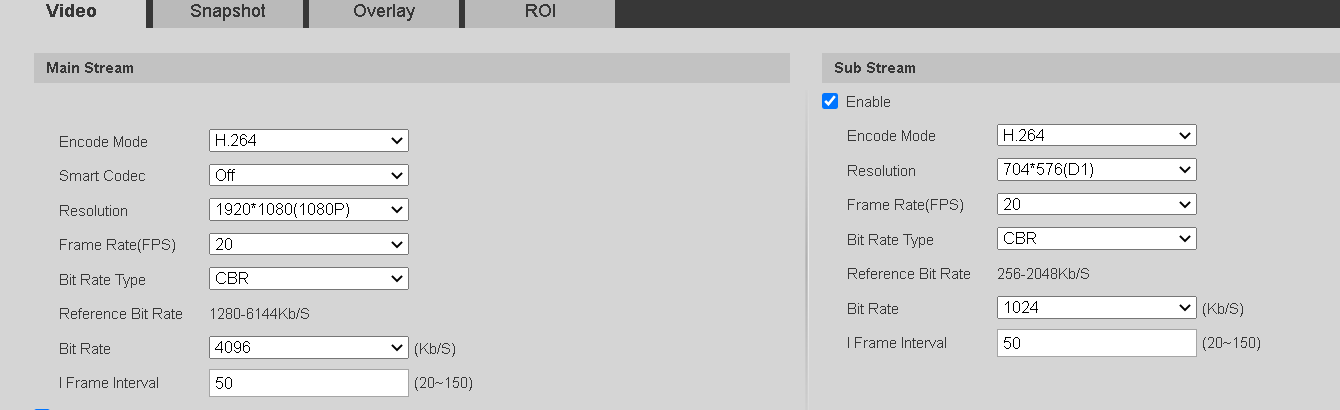

The problem was the motioneye frame rate settings and the dahua FPS as per picture:

NOW WORKING PERFECTLY FINE !!! When i will have time, will setup all the cameras

It will be a big pain in the ass with proxmox. Someone else will have to help you there. VMs are the enemy of Coral.

:))))))))))))))))))))) SOLVED ![]()

Until @blakeblackshear will make network share i will use this solution ![]()