Possibly, from what I have found so far it’s not so much a CPU overhead, but more a bandwidth overhead. So it’s a larger stream from start, rather than say having two separate streams. Granted it still relies on both the camera itself supporting it (dubious at best) and then a decoder properly understanding it, which is the next main issue, how to effectively tell the ffmpeg/decoder process what to pull from the stream. But is something that in theory my cameras support, and ffmpeg also in theory has some level of support for. But whether it bears anything useful only time will tell, at the very least I can manipulate the iframe intervals to get things working well in the current form, then play with SVC and see if it yields any benefits.

HI @claymen ,

I’m trying to follow your explain, your suggestion is to reduce the iframe at the minimum the camera allows you to do and keep a higher frame rate?

that’s correct?

Quick question for those who have the Coral USB accelerator. What do you typically see for inference speeds? Mine is currently between 80-150 as shown on the debug page. I was expecting it to be much faster than that because regular CPU (NUC 8th gen i5) seems to have consistent speeds between 50-80. I get the following lines on startup so I am sure that the coral device is being utilized.

detector.coral INFO : Starting detection process: 37

frigate.edgetpu INFO : Attempting to load TPU as usb

frigate.edgetpu INFO : TPU found

Are you running in a VM?

Yes. I’m running Ubuntu 20.10 as my host OS and HASSOS in a KVM setup through virt-manager.

Does a VM matter? Maybe I missed something in the documentation. Protection mode is set to off.

Edit: Just wanted to add how much I appreciate all the work you put into this project, blakeblackshear. This is easily my most used integration in Home Assistant.

There are industrial machine vision cameras that will give you a raw stream, e.g. FLIR GigE or Basler GigE. These are more expensive than a security camera and you need to provide your own lens and housing.

It’s almost certainly because you are running in a VM. I would recommend trying to run directly on the host with docker and comparing speeds. The documentation definitely advises against running in a VM.

This is the export:

[{"id":"90672d10.e9562","type":"mqtt in","z":"cc80fdd9.36949","name":"","topic":"frigate/porch/person/snapshot","qos":"2","datatype":"auto","broker":"da7b9fe8.ef7f","x":260,"y":180,"wires":[["9bf4f457.8e5e08"]]},{"id":"2006a8fa.a2a618","type":"file","z":"cc80fdd9.36949","name":"","filename":"","appendNewline":false,"createDir":true,"overwriteFile":"false","encoding":"none","x":1000,"y":180,"wires":[[]]},{"id":"9bf4f457.8e5e08","type":"change","z":"cc80fdd9.36949","name":"","rules":[{"t":"set","p":"filename","pt":"msg","to":"","tot":"date"}],"action":"","property":"","from":"","to":"","reg":false,"x":600,"y":180,"wires":[["94353c5f.fa451"]]},{"id":"94353c5f.fa451","type":"function","z":"cc80fdd9.36949","name":"","func":"msg.filename = \"/config/frigatesnaps/\" + msg.filename + \".jpg\";\nreturn msg;","outputs":1,"noerr":0,"initialize":"","finalize":"","x":830,"y":180,"wires":[["2006a8fa.a2a618"]]},{"id":"da7b9fe8.ef7f","type":"mqtt-broker","name":"mqtt","broker":"192.168.1.180","port":"1883","clientid":"","usetls":false,"compatmode":false,"keepalive":"60","cleansession":true,"birthTopic":"","birthQos":"0","birthPayload":"","closeTopic":"","closeQos":"0","closePayload":"","willTopic":"","willQos":"0","willPayload":""}]

It can’t hurt to try this I guess, but there has to be a more elegant solution (this is just a quick test method, being new to Node Red myself).

I added the set msg.filename etc to give each image a unique ID, rather than being overwritten each time. The frigatesnaps folder from the test now has hundreds of person images from my porch camera.

Hi, just installed Frigate, looks awsome!

But I would like to get some insights on how these values work?

objects:

track:

- person

filters:

person:

min_area: 5000

max_area: 100000

min_score: 0.5

threshold: 0.6

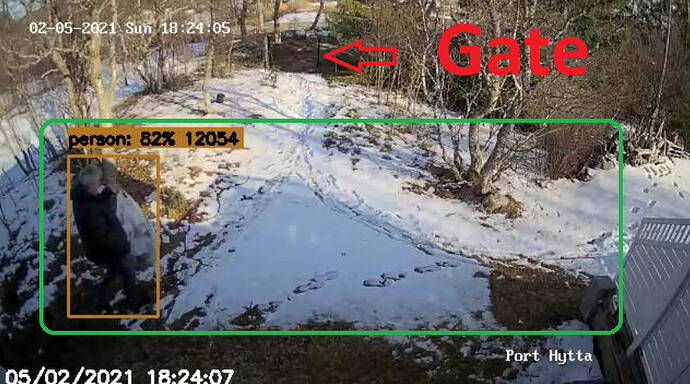

Like, I have a camera up under the roof pointing towards the gate.

roles:

- detect

… are set with the substream of the cam with

width: 640

height: 360

When testing, I got the confirmation/Best Image when the person are almost at the building, not when he was further away. How does the min_area/max_area work combined with the resolution of substream?

The images show a header with person: 82% 12054 , what are the value 12054?

Image attached show an example. It never detects and scores a person when the person are outside the green frame I added manually, like coming in from the gate. It finally scores when the person are from the side just escaping out of the image on lower left or lower right…

So, basically, how can I make Frigate detect the person a bit further away?

Sort of, depends on what your goal is. The issue I have is that to try and decode 12 x (2688x1520) resolution streams, that’s a lot of processing overhead, especially as it then has to run motion detection on what is effectively 240fps of higher resolution video data. I prefer having a higher frame rate for recording with CCTV, as it has yielded better footage when there have been incidents in the street, was able to pull plates off a moving car with more frames to pick from than had it been a lower rate.

In the past this has been fine as the actual motion detection has run off the secondary stream, which is a much lower resolution 640x360. But with the AI detection I have found that it doesn’t do as well picking up people who are further away due to far less pixels to work from. But feeding it all 12 streams at full resolution absolutely hammers the processor even with quicksync, as there is still motion detection that has to occur.

The problem is you can’t just simply run the 20fps stream at 5fps, if your iframe interval is quite high, which is often the case to reduce bandwidth or improve image quality at a given bandwidth. In my example of a 20fps stream with an interval of 20 for the iframes, that means there are 19 frames that all rely on that iframe, so you can’t just say decode 5fps, because it needs that iframe + 19 frames after to be processed one after the other. This is how h264 and other codecs work to reduce the video data size, by in a way storing the incremental changes between each frame from the iframe.

So the theory is, you can reduce your iframe interval, in my case going from an interval of 20 with 20fps, to an interval of 4 with 20fps video, and then telling ffmpeg to only decode iframes which would result in an effective 5fps required to process it. This means that the motion/AI detector has far less work to deal with, 60fps total. Which when it does detect a person in those frames, can then use MQTT with Node-Red to trigger BlueIris to start recording at the full frame rate (direct to disc).

In a perfect world, the camera would let me run two high res streams but at different framerates, one for motion detection at a lower frame rate, and one at the higher/full rate. But on the cameras I have, that isn’t an option, though may be possible with SVC, but that has its own set of complications.

Bear in mind, this is a very simplified view of it, this article has more info and some images which help to explain it.

https://www.securityinfowatch.com/video-surveillance/article/21124160/real-words-or-buzzwords-h264-and-iframes-pframes-and-bframes-part-2

You may be having the same issues I am, and my understanding is that there just aren’t enough pixels back that far for it to reliably determine it’s a person. I found swapping to the primary high resolution stream it would instantly pickup people at a similar sort of distance, hence why my line of thinking has been resolution to look for people in it.

Have you tested running it against the high resolution stream?

After reading your comment again and looking at the documentation, maybe I need to clarify. I installed Frigate as an addon so inside my HA VM, it is setup as a docker container. Is it ok for Home Assistant to be installed as a VM with Frigate as a docker container in HA without impacting Coral inference speed?

It will work, but you are running a docker container inside a VM that way. I suspect that is impacting the performance of the Coral. I would try running the frigate docker container on the host directly and comparing.

Ah, I see now. I’ll give that a shot and compare performance then.

Test this:

objects:

track:

- person

filters:

person:

min_area: 500

max_area: 100000

min_score: 0.5

threshold: 0.6

My understanding is that detection is trained on 300x300px squares, which Frigate resizes motion detected areas to, to detect for objects (someone correct me if I’m wrong).

At 640x360, someone walking through your gate is probably 40x40px at a guess on that resolution? This is the key part of the documentation:

For the

detectinput, choose a camera resolution where the smallest object you want to detect barely fits inside a 300x300px square.

So to detect the gate, I would see that you have 2 options:

- Increase the resolution

- Put another camera closer, where at 640x360 a person fits in 300x300px

I found something similar with a Fisheye camera I have outside, I needed to use the maximum resolution on the camera (3072x2048) to detect objects further away and cover the whole back garden (smallest object, fits inside 300x300px). The one I have covering the front drive, could/would work with a lower resolution as it was covering a smaller area (front gate is closer to the camera, than the back gate is).

I found, that inside, the minimum that worked for me generally was 1280x720 for detect - this would then allow me to pick up someone walking by a doorframe at the far end of the room (similar situation). Lower resolutions worked in the garage and if the camera was facing the door that someone was walking through.

Thanks for the input, this will be tested

The cam facing the gate only have up to 640 x 360 (or 352 x 288) in substream, but main stream do have 1920*1080. Should I test with mainstream, and perhaps more than 5fps?

I do also have another camera, but it is a Yunch and I have no documentation on this shitty cam (though, it is PTZ and do have 25x optical zoom) so I only have the mainstream at 1920 x 1080.

Frigate are running on an Intel NUC with i7. I am waiting for my Coral Accelerator but it wont arrive in the next weeks.

That’s more or less what I did - I found no benefit of going above the recommended FPS, even at that it can catch a dog running past.

But the detect resolution was key for me, working out the rough size of the object I wanted to capture, on the used resolution. I only actually use a substream on 1 of my cameras for detect, 1280x720 in the garage (main is 1980x1080)

Ok, so the 500 and 100000, what are those values actually? How do they impact the detection process?

Hi, I’m a Frigate newbie but got an inference speed of around 8.0 on:

CPU: E3-1225 v3 (Dell T20)

VM: ESXi 7.0 bare metal

OS: HASSOS hassos_ova-5.13.vmdk (Debian)

TPU: Google Coral USB using a separate USB PCIe card with passthru

Frigate: HA add-on

So maybe try with the vmdk image in your VM?