Biggest problem is the lack of beta testers. So few install the beta’s, so it is impossible to catch all bug before release. It gets even worse as some often give the advise not to install .0 releases, but to wait until .2 or even .3, so even less bugs get catched.

I install the beta every last Thursday of the week (Wednesday, the release it too late for me, I usually am in bed already), but since I don’t have many integrations, not much to report.

And im the exact opposite of Francis as I’ve matured my install I’m intentionally slowing my pace of accepting change. I will not beta test because frankly I dont have time and I prefer my live HA installation to be treated like an enterprise production platform. No changes unless necessary.

Basically remember unlike a lot of home automations platforms you DO NOT have to take the update.

Now I’m a 25year IT ops vet and will never tell you NOT to update but you don’t have to update everything every release…

For me it looks like ultimately quarterly will probably be my update cadence and it seems to offer me a good balance of maintenance v. Features and patches.

And in defence of HA updates, in the 3 years I have been running HA I have only had one issue caused by updating. This was fixed with a patch after 2 days.

I always update as soon as possible, I always read the breaking changes while the update is in beta, and prepare for any changes I need to make before updating.

The issues with updating every 4 releases is you will be up against a lot of breaking changes all at once. This will probably cause more issues than updating every month.

I think that no smarthouse software is so big and supports so many devices as HA does. Thus said, frequent updates are more or less needed in order all to work.

I think that it’s impossible to test all (few thousands, i guess) addons each time. Pathces come because users report a bug or non-working addon.

Also…hacs is a community thing, not official HA stuff, so whatever breaks from there it’s addon’s developer’s thing. I don’t think that nabu casa checks those addons much. We do experience now and then a patch release of certain hacs addons even before official HA update (that’s when it pops up, but we can’t install it, since we don’t have correct version yet).

One of “bad” things of less frequent updates would be: if something breaks we would wait longer for update to correct broken thing…

Well, it is indeed two-edged sword, and depending on situation there are good or bad sides of frequent updates… as usual. So just few my thoughts about this:

- frequent updates ensures that bugs not catched during beta tests are resolved as quickly as possible

- frequent updates ensures that HA is able catch in sometimes quite frequently changing APIs for devices we integrate (would you be willing to wait 3 month to get update that allows you to use your device again?). These changes areusually independent form HA developers.

- Companies are introducing new devices every month (if not week). Some are very cool, but useless if adjustment to respective integrations is not implemented and released to community. Good example is Shelly with tons of devices relesed over past few months, that would not integrate with HA unless integration is updated.

- There are some drawbacks too… one is that updates comes with some under the hood changes, that require reconfiguration of our instances (weather integration removing forecast attributes is one example). if such big changes happens too frequently, it might make HA less attractive, especially to people who prefere stability of installation over new functionality.

- Above point leeds me to to anothe conclusion, that I follow personally; changes (especially these breaking ones) are cumulating relatively fast, so skipping versions and waiting several months to update to latest version become risky. Finding and fixing alll such changes over several major skipped versions might be nightmare. So I update to every next version as soon as it is available. In majority of cases it brings nothin new to me in terms of functionality, but it ensures that every next move to new version would be easier.

That won’t really help. I do software testing for a living, and I can guarantee that no amount of testing will ever catch all the bugs prior to a release.

I’ve seen some nasty bugs make it out to production, even when something’s been tested by people who do this thing 8 hours a day. Now, compare this scenario to HA, where you’re relying on a (relatively) shrinking base of volunteer testers with no formal experience of the testing process.

Yes, you could extend your release schedule. You could have a longer testing period. You could advise people how they should go about the testing process. It still doesn’t guarantee a release won’t contain bugs. Hotfixes will still be required, and someone else will come along and make a post similar to yours down the line.

What I can almost guarantee is if the release schedule is extended, someone will complain that they have to wait too long for X feature to be released, because you can’t please all the people all the time.

Personally, I think the monthly release schedule is a good compromise between too fast and too slow (we have 2-week release cycles at work, so, yeah).

Hotfixes are being treated with the urgency they deserve, which is why there have been 3 hotfixes so far. The devs could have bundled everything in one hotfix to keep the number low, but that would have just pissed off people who were stuck waiting for their issues to be resolved.

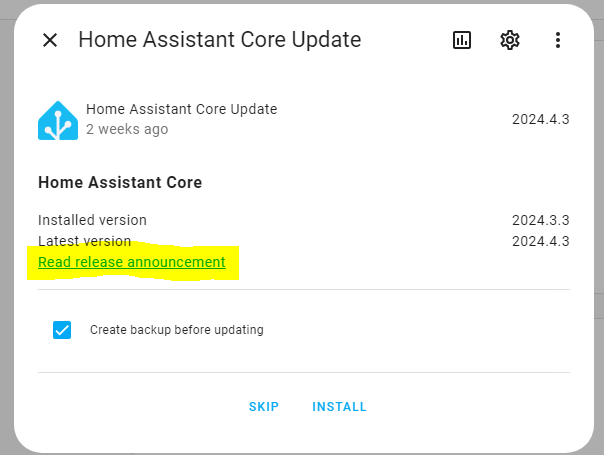

You’ll also find that the frequency of fixes isn’t linear - more issues are discovered in the first couple of weeks of a release than the following weeks. That explains why we’re at 2024.4.3 already.

Ultimately, the decision on when and whether to update to a given version rests with you. If you’re unsure about a particular release version, have a read through the corresponding release thread or github. Take frequent backups, and if you’re still unsure, update to the release prior to that.

You’re totally in control of your update frequency, so there’s really no need for everyone else to follow your pace.

If you’re worried now, this animation showing HA development 2013-2021 will make you want to go and lie down in a darkened room:

https://www.visualsource.net/repo/github.com/home-assistant/core.

Looks like Cold Fusion…

When i started HA 4 years ago, there where updates that frequently made something stop working.

Most of the time there was a quick fix, sometimes not and sometimes it was just because i did something wrong.

But now that my installations has matured, i seldomly have something braking.

Note that during the last 4 years also HA has matured a lot ![]()

Nevertheless it is a good practice to read the braking change list before rolling out an update.

More important, always make a backup before updating (i have an automated daily backup to my NAS)

Thanks - I hadn’t seen that but it does cover much of the same ground

I see what several commentors have said about needing frequent updates to keep up with new hardware, and for bug fixes. I can’t argue with that.

I guess it means we’re pretty much all beta testers, all the time. Maybe that’s inevitable in this space.

I think it would be nice if the forums here had an official Releases category where there would be a post every time there was an update to the core and to the OS. That would give a dedicated place to look to see if any issues had come up.

I mentioned Ubiquiti above, and they do this. I tend to wait until there are a significant number of comments about a release before I install it. If any complaints are about things I don’t have or am not doing then I feel more confident about updating.

Like several people said, backups are key. I’ve decided to make a snapshot or full VM backup before doing the updates. Then I can just go back to the way it was if something goes wrong. I still kind of hold my breath when I click the update button.

It’s typically posted in the Blog

And linked off the update notice

This is part of the reason why I run Container versus HAOS. I can quickly change the image tag and flip between versions quickly and fairly safely. I also run MariaDB as a container and can quickly switch between “versions” as I need to.

So, if a release of HA includes a DB schema upgrade, I clone both the DB and HA containers and config folders and stash them in a backup. Then I pull the new HA version, let it upgrade the DB and test it. Should I need to roll back, it’s as simple as restoring my config directories, changing my docker-compose file, doing another pull and getting back to where I was. I might lose a few hours or day of stats, but to me it feels a lot faster and safer. I’ve actually used this method on a test system of mine to answer questions about older versions of HA as well.

I do the same. I have never had to roll back far enough to a version with a older database schema. Do you know if HA will note a incompatible database structure and not start? Recovering my Postgresql database is the longest process of my backup/recovery workflow and I try to minimize doing recoveries of it.

Not really. What’s needed is more (beta) testers! HA is open source and it is virtually impossible to build test systems with every possible configurations/setups.

Reality proofs that most people just want stable software but not invest time of their own for testing beta/rc. This and the complexity/size of the projects leads to bugs in releases which then requires bug fix releases.

If you would have taking part in beta testing the roomba integration bug probably wouldn’t have shipped with stable.

Only roomba owners (like you!) can do this testing!

I’ve only had it happen to me once when I was still using sqlite3 as the DB engine and it spammed the log files with errors until it finally died. It was actually kinda funny to watch.

Just FYI, I’ve been REALLY tempted to leverage AWS Aurora as my DB engine eventually, especially with their blue/green deployment model.

File based backup/restores are usually pretty quick on a quality SSD (I only use NVME drives in my servers and 2.5" SSDs for longer term storage on my NAS). I can usually restore 40gb+ in less than 10 minutes (often MUCH faster than that). If I’m pulling from my NAS’s storage, it might take ~15-20 minutes MAX.

True, but that’s just the major release, not the smaller updates, and they don’t mention the OS updates at all.

Major updates have extensive release notes with a section for breaking changes. Every minor update links to the release notes, which then also holds a description of the fixes in that minor update. HAOS updates have links to notes too. I read them every time. What else could they possibly do?

You could have all the beta testers in the world and it still wouldn’t mean issues wouldn’t still find a way to make it into a release. Please read my reply for a full explanation, based on real-world experience.

Even if you had way more people testing, these are volunteers with a varying amount of time available to test. Most of them would not think to try reinstalling the integration from scratch, going through all the config options and monitoring for stability each time any code potentially affecting their integration gets merged to beta.