I don’t see much in the roadmap that excites me, to be honest. The new voice hardware and z-wave stick are about the only things I can think of that I would use. Everything else seems like extra load on the hardware that I use that I don’t really ever plan to make use of.

After all, the perfect app is no app, as in, opening an app to control your light bulb is less convenient than simply flicking a light switch, or better yet, just automating it.

I get the point, but there is a lot of things on my home now that doesn’t have a specific real switch, I only have them accessible via Home Assistant. So I use the app. Also, many times you can be so far away from that switch…

What my family really love is our mural tablet devices on wall. They are showing a Picture Elements card with almost everything in our home and backyard. We can control everything from there and also check all sensors at the same time with no need to read labels, they are just on the correct position of the house on the floorplan. What reminds me something… What about simplifying this? It’s very painful to add new elements manually to the Picture Elements card, how about drag & drop? ![]()

How many users use an iPad or Android tablet mounted on a wall with Home Assistant in kiosk mode? What interface do they use? How they interact?

On the other hand, I’m really looking forward to see the new hardware to finally add voice control to our home, it’s the best interface for kids. I just need time to teach them about the fact that Alexa has changed her name to okeynabu… ![]()

For a better quality-of-life experince can you please also add a ”radio migration” feature to the Z-Wave integration (similar to what the Zigbee integration alteady has) to make migrations between different Z-Wave Controller USB radio adapters easy and available from the UI?

Would be great it was simple for anyone to migrate your Z-Wave network from an old Z-Wave USB radio dongle/stick to the new official Z-Wave hardware once released!

It would also be awesome to see more feature-parity and closer user-experince in the UI when using both Z-Wave and Zigbee. Today both kind of work the same but the user-experince is very different, just look at for example when adding and removing devices.

From a user-experince point-of-view it today really shows that it is not the same UI/frontend developers working on Z-Wave and Zigbee, but it would be much nicer if it at least looked and felt like the UI/frontens for those was designed by the same people.

Please make the UI/frontend user-experince more cohesive and have closer uniformity between Z-Wave and Zigbee, …and of course also Matter which also shared many similarties to both of those, QR-code scanning for inclusion just being one.

Hi everyone,

Since you promised to read every reply in this thread (no pressure!), I hope this message about accessibility will find its way to the right hands.

First of all, I’d like to express my sincere gratitude for another year of intensive development of Home Assistant. The ability to categorize automations, scenes, scripts, and other entities, the significant improvements in user experience when creating automations, integrations with AI services from OpenAI, Google, and Anthropic, and the efforts to create a true voice assistant for the home are all features that bring me great joy. I deeply appreciate the enormous amount of work put into these elements of the system.

However, I’d like to bring attention to some accessibility issues I’ve encountered with one of the new features in Home Assistant—the dashboards with sections. I’m currently using HA version 2024.11.2 (latest). As a blind user relying on a screen reader (NVDA in my case), I’ve noticed several significant problems when editing these new dashboards.

The interface is in Polish, but I’ve provided translations to English for clarity. Here’s what NVDA reads when I navigate the dashboard in edit mode using the down arrow key:

Edit user interface

Edit title

Done

Help

Open dashboard menu

Home

Add view

Add badge

Edit

Delete

(empty)

(empty)

(empty)

(empty)

Add card

Edit

Delete

(empty)

(empty)

(empty)

(empty)

Add card

Create section

In the above, lines where NVDA reads “puste” (which means “empty” in Polish) are indicated as “(empty)”.

Main issues:

- Sections are not announced by screen readers: NVDA does not read the names or indicate the presence of sections. This makes it impossible to identify or manage them effectively.

- Cards within sections are inaccessible: The screen reader doesn’t provide any details about the cards added to sections. It only announces generic options like “Add card,” “Edit,” or “Delete,” without any context about which card or section these actions pertain to.

- Similar problems with badges: The same issues occur with badges on the dashboard, making it difficult to interact with them.

- Lack of structure and hierarchy: There’s no logical hierarchy or landmarks in the interface for screen readers to follow. This results in a confusing experience where all elements seem equally important, complicating navigation and editing.

Potential solutions could include:

- Adding proper ARIA landmarks and roles to sections and cards

- Implementing semantic HTML structure with appropriate headings

- Providing context for action buttons (e.g., “Edit Section: Living Room” instead of just “Edit”)

- Ensuring proper focus management when adding/editing sections and cards

Voice Assistant Hardware Suggestion:

Also, as a blind user who heavily relies on voice control, I’m incredibly excited about the upcoming voice assistant hardware. I currently have 14 Google Nest Mini speakers throughout my house, and I can’t wait to replace them with Home Assistant’s solution. It would be amazing if you could consider offering a version with a form factor similar to Google Nest Mini, as I have them mounted in special ceiling brackets. The ability to simply swap them out without requiring significant modifications to the mounting solution would be a real game-changer. I also hope the sound quality will be at least as good as the Google Nest Mini. This would make the transition much smoother for users like me who have already invested in smart speaker infrastructure.

By addressing these accessibility issues and considering the form factor suggestions for voice hardware, Home Assistant would become even more accessible and user-friendly. Thank you again for all your hard work and for making Home Assistant such an amazing platform.

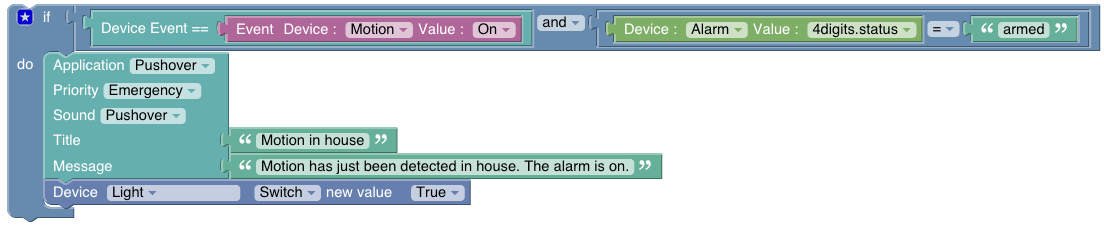

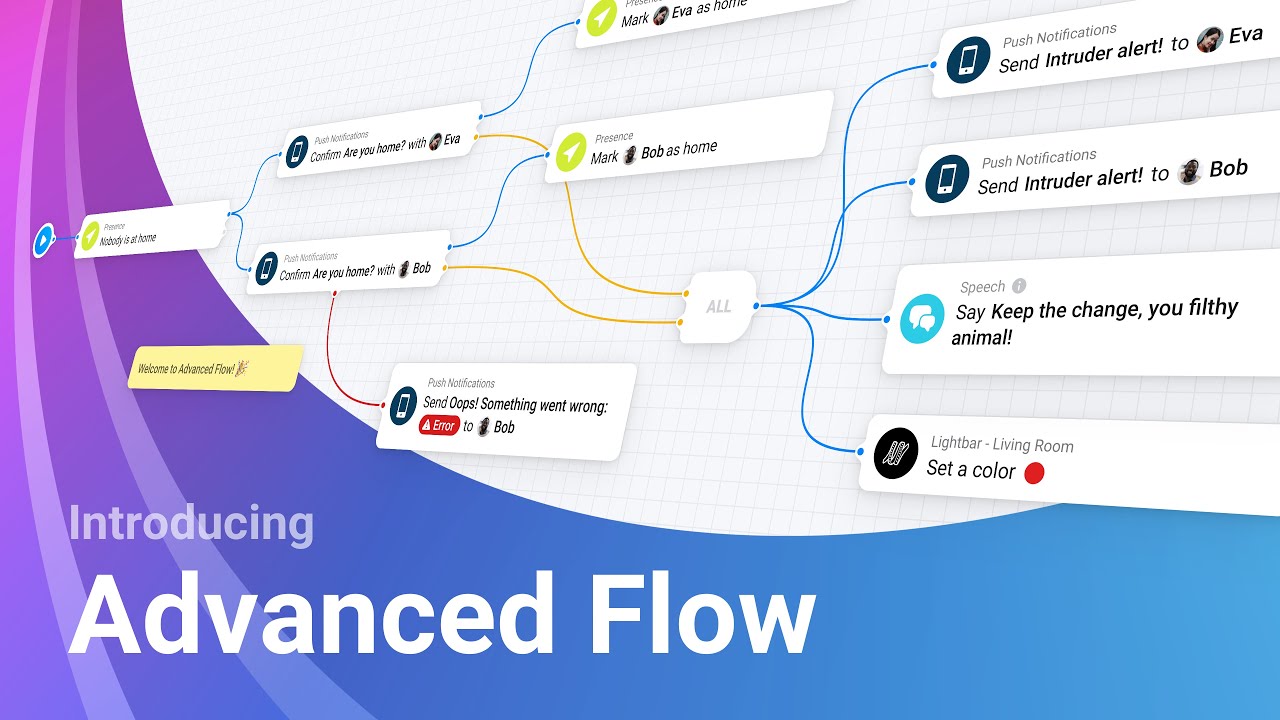

One huge request/idea but wondering if there is any chance of lead developers looking into adding some kind of optional visual programming system (VPS) / visual programming language (VPL) also known as diagrammatic programming, graphical programming and block coding, as a advanced flows option and alternative to simple flows in the automation editor, similar to ”Google Blocky” (such as example ”Snap” which is used in educational development platforms like Scratch), or Node-Red?

Block objects style flow-based visual editor UI for automations and scripts using graphical blocks via a drag-and-drop frontend included by default in Home Assistant’s frontend interface.

As proof-of-concept Blocky style visual programmering is available in both OpenHAB and Domoticz (competing home automation platforms):

Advanced flows scripting in Homey (Homey Pro) commercial home automation platform on the other hand has implemented Node-Red style visual programming:

Blocky style visual programmering interface been requested many times before:

Please also consider adding a ESP32-C6 or ESP32-H2 to the same PCB board as another co-processor but one that can be more or less dedicated as a Thread Border Router (for Thread based Matter devices) or Serial-over-IP remote Zigbee Coordinator following the same concept as Espressif’s ESP Thread Border Router / Zigbee Gateway board reference hardware (which combines one ESP32-S3 with one ESP32-H2 for this purpose) which is also sold as both Thread Border Router and Zigbee Gateway development board.

Having a extra module with Thread/Zigbee radio would allow users who have many of them to use to use a few as Thread Border Routers and one as a Zigbee Coordinator and the rest as stand-by fail-over nodes which the config can be restored to for redundancy and higher robustness. Depending on how many users have then some could also be used as Bluetooth Proxy as well, (though not a good idea to run multi-protocol on the same microcontroller/radio as most already know, so another excise to buy many of them ![]() ).

).

That second ESP32 would add more available GPIO pins so it could also act as a multi-sensor (if adding ability to add temperature/humidity, mmWave presence, and lumens/light sensors, etc.) that would not directly take resources from the main ESP32 that runs the voice assistent.

Suggest use similar concept with two ESP32 in the ESP Thread Border Router/Zigbee Gateway Board reference hardware which combine a ESP32-S3 with a ESP32-H2 radio module:

I also have many variants of Google Home smart speakers and various Google Nest smart display models too, so would be very nice to get a voice satellite model with a similar sized screens (one 7-inch size screen and one 10-inch sized screen). Would be awesome if couls just have several drop-in replacement PCBs for the most common models.

A cool option would therefore be to be able to buy ready-made updated replacement custom PCB variant of Onju-Voice with XMOS accelerator chip for drop-in circuit board swapping in older and newer hardware-generations of Google Nest Mini (second-generation with barrel-plug power-supply) / Google Home Mini (first-generation with USB-Micro power-supply. Check out:

And the very much related request/discussion here:

Question about Assist. Having it working all locally is great, but tbh, the hardware for it is not the best yet. Seeing Sonos integration is 33rd based on usage (pretty high, considering there are preinstalled integrations, such as Sun, Input boolean, Template…), means there is a lot of users having Sonos. I guess Sonos will not be keen on allowing more assistants run on their speakers, but question is: Did HA team at least to reach to them, to check if it’s possible having HA Assist on Sonos speakers?

Homeassistant develops great! I’m really happy that it focuses more on aesthetics and such. Love the possibilities to set up things in ui. However I hope the path for manually editing doesn’t get closed. Especially for bulk tasks this is indeed a real time saver!

My wishes, beside improvements: Less breaking changes. I still sometimes get the impression this is not the last, but among the first options.

What I am wondering:

Who decides about such roadmaps? (Like said, I like what’s on display)

Who decides (maybe finally), which features get implemented, which not?

I remember e.g. a call for sharing our dashboards and wishes, though I couldn’t participate I really liked that.

But for other things, I missed that - or it didn’t happen.

Wouldn’t it be a good move, to let the community decide more, if they e.g. prefer focussing on voice input, or dashboard improvement?

And - who can get contacted for general, really general, questions?

I two times asked about statistical data we can decide to share. Never got a reply. Who is to get contacted directly regarding such questions?

You guys are awesome. Thanks for all the great functionality that Home Assistant and its ecosystem provide!

I don’t get it (or maybe it’s that I do get it?), I’ll take ‘Okay Nabu’ over 'Okay Google" any day. I have my own wake word in mind, but I get that the back end has to be built first with consistant results… then you can integrate differing wake words (read: triggers). With the direction HA is going it’s only a matter of time before I can say ‘Hey C-3PO, what’s up’ and get to hear a breakdown of what the day looks like.

Too many syllables. (Apart from that, I think you are spot on)

Hi, first of: Thank you for writing this and putting effort into communication and clarify. Really appreciate it!

Question: Can someone please explain why HA devs put so much effort into development of ZHA when Zigbee2mqtt seems more capable? Most reviews favor z2m before zha, atleast those that I have read. Wouldn’t it make sense to pool resources into only z2m? I mean the old zwave implementation was dropped in favor of zwave-js. You can always play the choice card but since both are open source it feels unnecessary, what am I missing? ![]()

They only write/maintain one of those.

I just want to chime in that I’m also very much looking forward to the voice hardware. I can relate to much what I’m reading about the choice of wake word, I really hope that will be easy to change or choose another one. ![]()

Yes, same with zwave js. That started external and grew past their own implementation so in time their own implementation was dropped and zwave js became the official one. Right?

If the maintainer of the integration WANTS to be in core is step 1. HA cannot go and suck up people’s projects… Besides the fact they don’t have enough core paid Devs to do everything already.

Im fine with Z2M and Domo and all the other zigbee integrations (i think there’s at LEAST three now) being options.

I personally use Z2M myself and am fine with ZHA being the one of choice.

Openzwave made way for ZWaveJS as far as I understand because ZWaveJS was actively being maintained and brought up to modern needs while OZW wasn’t so. There was a need…

Here you have two or more perfectly good ways and one is well maintained in Core and one is well maintained out of core.

Pick which works best for you.

I’ll just come out and say it. The voice hardware will sell no matter what. This very much feels like a vocal minority. Nobody liked Google or Alexa or Siri on launch this isn’t any different. Sure you can ask people and they will say they hate it. Hell you get people complaining about hey vs ok after all these years. After some voice commands that all goes away. Also correct me if I’m wrong but the team never said no not going to happen right? So what’s the problem lol?

I think you’re making incorrect assumptions. I

both have some concerns about how the wake word decision was made, and will also buy several of the voice hardware.

Having just committed my first blueprint (I’d made some in the past for myself only), I can agree with the issue about making blueprints easier to use.

I think a major part of that is having some built-in way to package multiple elements in a blueprint. Because some automations need to read and write data to helpers in order to circumvent the limited scope of variables within an automation, or you would want to split up long code that you need to use often into separate scripts that can be called from the main automation with certain parameters/fields.

A framework that would allow such things within the blueprint yaml, or a way to package the helpers and scripts together with the blueprint would make it easier to write and use blueprints IMO.