I have a situation where the page I wish to scrape has embedded JSON in a script tag. The script tag has an id so I can grab it. It is hockey statistics so it is very large and hence needs to be an attribute. I would have thought I could do this in one step but as of yet, have not been able to figure out the “secret”.

What I have (note I use includes so this YAML is in a file called multiscrape.yaml):

- name: SOS scraper

resource: https://www.dailyfaceoff.com/nhl-weekly-schedule

scan_interval: 36000

sensor:

- unique_id: hockey_strength_of_schedule

name: Hockey Strength of Schedule

select: '#__NEXT_DATA__'

value_template: '{{ now() }}'

attributes:

- name: props

select: '#__NEXT_DATA__'

value_template: >

{{ value.replace("'", '"') }}

Now I have tried everything to parse “value” using “from_json” but it just does not look or operate correctly. So as shown in the above, I just store the string of JSON in an attribute on this sensor, and then use a template to parse that which does work perfectly (note using includes so this is in my template.yaml):

###

### Hockey Weekly Schedule

###

- name: Hockey Weekly schedule

unique_id: hockey_weekly_schedule

state: "{{ now() }}"

attributes:

sos: "{{ (state_attr('sensor.hockey_strength_of_schedule','props') | from_json) }}"

This works perfectly but I would have thought that I could have just used the “from_json” filter in the multiscrape. When I do even with the replace of ’ to " it just returns the string with single quotes back in place. I am also confused at why “select” is needed twice or I just don;t know how it all works.

What I mean is if I use this:

- name: SOS scraper test

resource: https://www.dailyfaceoff.com/nhl-weekly-schedule

scan_interval: 360000

sensor:

- unique_id: hockey_strength_of_schedule_test

name: Hockey Strength of Schedule Test

select: '#__NEXT_DATA__'

value_template: '{{ now() }}'

attributes:

- name: sos

select: '#__NEXT_DATA__'

value_template: >

{{ value.replace("'", '"') | from_json }}

I get this:

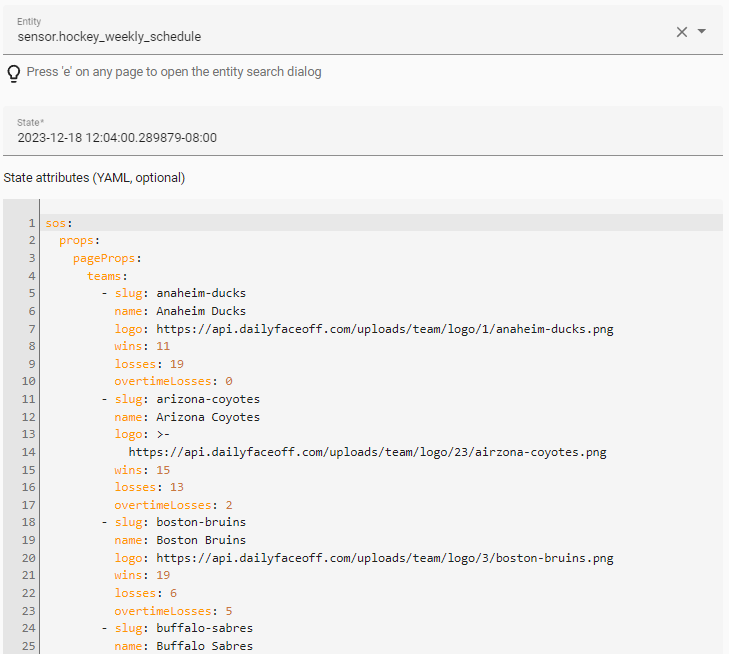

But for my workaround, I get this:

Any thoughts on what is wrong? The workaround is fine but why have two sensors when one is fine?