Using GPT-4o-Mini-Search-Preview with Voice Assist PE – Easy Workaround

This is a game changer for me. I found a very simple and effective way to integrate gpt-4o-mini-search-preview with my Voice Assist PE.

What can I do with it?

Voice Assist PE can now answer ANY question using web search. Pretty cool!

Examples:

- “How did Bayern play today?”

- “Is the cocktail bar Mojito open today?”

- “What’s the weather tomorrow?” (Location can be handed over via API request)

To trigger gpt-4o-mini-search-preview, you just need to use a specific trigger word or sentence.

How it works:

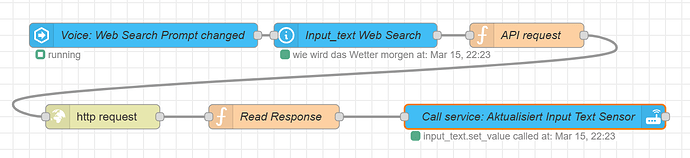

The core idea is simple: I set up a separate gpt-4o-mini-search-preview flow outside the normal assist pipeline.

- Define a trigger phrase, e.g.:

- “I have a question: How did Bayern play today?”

- “Question: How did Bayern play today?”

- Voice command with trigger phrase triggers an automation

- Automation saves the prompt to a text sensor

- Node-RED reads the prompt and sends an OpenAI API request

- Model: gpt-4o-mini-search-preview

- Location (if needed) is defined in the request

- Node-RED saves the response to a text sensor

- Automation detects the updated response and continues

- Voice Assist PE announces the response using

assist_satellite.announcevia TTS

alias: "Voice: Web Search"

description: ""

triggers:

- trigger: conversation

command:

- I have a question {websearch_prompt}

- Question {websearch_prompt}

conditions: []

actions:

- action: input_text.set_value

metadata: {}

data:

value: "{{ trigger.slots.websearch_prompt }}"

target:

entity_id: input_text.voice_web_search_prompt

- wait_for_trigger:

- trigger: state

entity_id:

- input_text.voice_web_search_prompt_response

- action: assist_satellite.announce

metadata: {}

data:

message: "{{ states('input_text.voice_web_search_prompt_response') }}"

target:

entity_id: assist_satellite.home_assistant_voice_xxxx_assist_satellit

mode: single

NodeRed flow: