Make sure your assistant has a speech-to-text engine, otherwise you’ll only get keyboard input.

Does this only happen with area names?

It is using the phone. Every other feature works as normal.

Super!

Please add passkeys aswell this year.

For everybody’ safety.

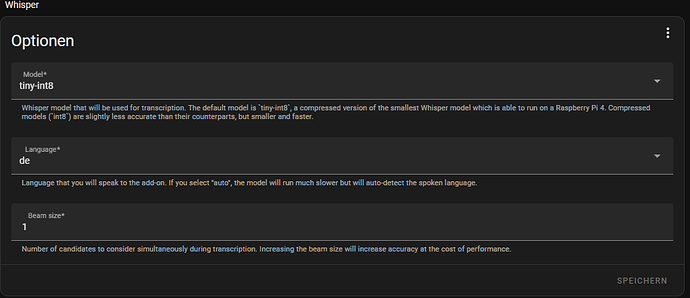

thanks man, I now installed both addons (whisper/piper). And the integration wyoming - mic icon shows up

I guess at the moment only english is possible. If you have a entity like: “Kinderzimmer Licht” + (cmd: on / off), it seems not to work properly.

Maybe you just have to pronounce it accordingly: Kindörsimmör Lickt

Has anyone seen the work by Justin Alvey @justLV on Twitter?

Basically replacing the pcb inside a nest mini with an esp32 s3 to setup his own voice assistant!

I would imagine it has everything needed for assist

I have not crunched the numbers, I am sure others have. An ESP to replace the brains of an existing smart speaker is a great idea even if it is not close to the capability of the original hardware. It is likely to be better than it would have been a year or two ago, and will likely get better over time with newer more capable hardware and software. I am pretty sure the hardware costs of the existing smart speaker is well in access of what they are actually sold for as the speaker is a conduit to provide the manufacturer more than a profit for the hardware. I have heard it said many times when it comes to smart speakers, you are not the consumer, you are the product. We have all seen the rise and fall of products that were produced to possibly upend the industry with a privacy focused, self hosted smart speaker. Then people are disappointed when it is not as economical or capable as those devices provide by the big suppliers. It has become obvious that the days may be numbered for the existing smart speaker market in its current form as many of the big companies are realizing significant losses in revenue in this area. I think the time is right for new approaches and hardware in this area, and I trust the will, knowledge, and desire of many will shift our outlook and expectations with smart speakers. I am excited to see the new ideas and the rapid pace that has been shown within the “Year of the voice”. It may not be able to do some of the things the current smart speaker may be able to do yet, but some of those things we likely don’t need and actually don’t want.

I’d like to see Bluetooth integration extended to support bluetooth headset profiles: A2DP (and aptX), AVRCP. There are plenty of smart speakers and other devices with speakers and microphones that could be extremely helpful in voice integration. Exposing raw messaging over a BT serial port profile (SPP) could also enable an entire class of integrations for bluetooth devices - like the various pixel speaker displays. Eventually it could be extended into protocol/device definitions like with the passive BLE sensors. And lots of it can be punted down to the OS layer with bluez.

It’s not directly related, but it’s close enough that I think it deserves attention.

For using piper/whisper locally, do you still need “https” to work?

Not when using Android.

I don’t get, why nobody is saying it: it is a browser limitation, not a HA limitation.

All modern browsers force you to use https, if you want to use the microphone of your computer, started from a website.

All other ways, like the companion app under Android do work with http (no ‘s’) while using the mic. If you don’t use a mic, you can work with http only.

I remembered someone connected Assist to his Google devices like a Google Home Mini, saying the phrase ‘Hey Google, Assist’ and then Assist could be used by voice via the Google servers. I am waiting for this function, is this still something that is going live sometime soon? Thanks in advance, just updated to Nabu Casa, keep up the good work!!

Sounds great, love these updates! I can’t wait for wake word support so I can replace my Nest speakers with ESP-32 or gut them and swap the brains out!

Great progress, thanks!

Unfortunately once you are not using English it is still mostly unusable for voice, only for text.

At least for my native language (Czech) the language model in Whisper is not good enough for regular usage.

So far I have tried only the base model which usually needs multiple very slow attempts for it to recognize even simple words. I can try medium-int8 (which is the best offered), and see if my HW will handle it and if it will improve anything.

A friend of mine reports the same for German. Running HA on a laptop , not a pi.

Is it possible, that aliases are not fully working?

I tested them recently in an automation using sentence wildcards and I always have to use the actual name, not the alias. Otherwise it does not work.

Is it possible in the voice intents to get the entity that received the speech? As I create Esphome based devices to listen for voice commands, I would want the command to behave differently based on the room it’s in. ‘Turn on the light’ should do different things based on what device I say it to.

So we need to subscribe to use it ?