I got the original code from discord. Can’t locate the original message but if I find it I will link it. A quick look on github and I don’t see it there either. Things may have changed but I still use the code I linked you too and it is working for me. Good luck!

And just after I posted I found the github link that I originally tried the code from.

anyone getting climate requests working. Z2M trv. Asking “set lounge radiator to 19” response “sorry I didn’t understand that”

using M5Stack Atom Echo

Not a fix but was having the same issue with “ok nabu” switched to “hey rhasspy” and it works better.

Hi all,

I wanted to try the porcupine1 wake word engine and also generated a custom wake word on picovoice.

It did a good job and I downloaded the .ppn file.

Is there a way to use this in the porcupine add-on?

Thanks,

Merc

Thanks will35.

Had not found that one despite googeling quite a bit.

Cheers,

Merc

I have a bunch of unused Android phones and tablets. They all have HA Companion App installed.

It would be fantastic if the HA app supported wake word detection. Is that on the roadmap?

Its in the works per mikes earlier comment in this thread:

Not sure if I should start a new thread but I have an existing ESP32 that was acting as a Bluetooth Proxy and wanted to add voice detection to it so I added the below code. It doesn’t have any errors (comiles fine and doesn’t give serial log errors while running) but I don’t get any wake word action from it so not sure where to go next…

Code ‘borrowed’ from this forum.

ESPhome code

i2s_audio:

- id: i2s_in

i2s_lrclk_pin: GPIO19

i2s_bclk_pin: GPIO18

microphone:

- platform: i2s_audio

adc_type: external

pdm: false

id: mic_i2s

i2s_audio_id: i2s_in

i2s_din_pin: GPIO23

voice_assistant:

id: va

microphone: mic_i2s

noise_suppression_level: 2

switch:

- platform: template

name: Use wake word

id: use_wake_word

optimistic: true

restore_mode: RESTORE_DEFAULT_ON

entity_category: config

on_turn_on:

- lambda: id(va).set_use_wake_word(true);

- if:

condition:

not:

- voice_assistant.is_running

then:

- voice_assistant.start_continuous

on_turn_off:

- voice_assistant.stop

- lambda: id(va).set_use_wake_word(false);

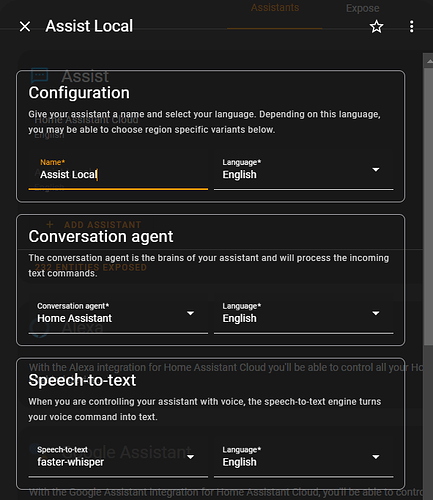

Voice Assist settings:

ESPhome logs

[10:15:40][D][voice_assistant:529]: Event Type: 0

[10:15:40][D][voice_assistant:529]: Event Type: 2

[10:15:40][D][voice_assistant:619]: Assist Pipeline ended

[10:15:40][D][voice_assistant:422]: State changed from STREAMING_MICROPHONE to IDLE

[10:15:40][D][voice_assistant:428]: Desired state set to IDLE

[10:15:40][D][voice_assistant:422]: State changed from IDLE to START_PIPELINE

[10:15:40][D][voice_assistant:428]: Desired state set to START_MICROPHONE

[10:15:40][D][voice_assistant:206]: Requesting start…

[10:15:40][D][voice_assistant:422]: State changed from START_PIPELINE to STARTING_PIPELINE

[10:15:40][D][voice_assistant:443]: Client started, streaming microphone

[10:15:40][D][voice_assistant:422]: State changed from STARTING_PIPELINE to STREAMING_MICROPHONE

[10:15:40][D][voice_assistant:428]: Desired state set to STREAMING_MICROPHONE

[10:15:40][D][voice_assistant:529]: Event Type: 1

[10:15:41][D][voice_assistant:532]: Assist Pipeline running

[10:15:41][D][voice_assistant:529]: Event Type: 9

[10:15:46][D][voice_assistant:529]: Event Type: 0

[10:15:46][D][voice_assistant:529]: Event Type: 2

[10:15:46][D][voice_assistant:619]: Assist Pipeline ended

[10:15:46][D][voice_assistant:422]: State changed from STREAMING_MICROPHONE to IDLE

[10:15:46][D][voice_assistant:428]: Desired state set to IDLE

[10:15:46][D][voice_assistant:422]: State changed from IDLE to START_PIPELINE

[10:15:46][D][voice_assistant:428]: Desired state set to START_MICROPHONE

[10:15:46][D][voice_assistant:206]: Requesting start…

[10:15:46][D][voice_assistant:422]: State changed from START_PIPELINE to STARTING_PIPELINE

[10:15:46][D][voice_assistant:443]: Client started, streaming microphone

[10:15:46][D][voice_assistant:422]: State changed from STARTING_PIPELINE to STREAMING_MICROPHONE

[10:15:46][D][voice_assistant:428]: Desired state set to STREAMING_MICROPHONE

[10:15:46][D][voice_assistant:529]: Event Type: 1

[10:15:46][D][voice_assistant:532]: Assist Pipeline running

[10:15:46][D][voice_assistant:529]: Event Type: 9

[10:15:51][D][voice_assistant:529]: Event Type: 0

[10:15:51][D][voice_assistant:529]: Event Type: 2

[10:15:51][D][voice_assistant:619]: Assist Pipeline ended

[10:15:51][D][voice_assistant:422]: State changed from STREAMING_MICROPHONE to IDLE

[10:15:51][D][voice_assistant:428]: Desired state set to IDLE

[10:15:51][D][voice_assistant:422]: State changed from IDLE to START_PIPELINE

[10:15:51][D][voice_assistant:428]: Desired state set to START_MICROPHONE

[10:15:51][D][voice_assistant:206]: Requesting start…

[10:15:51][D][voice_assistant:422]: State changed from START_PIPELINE to STARTING_PIPELINE

[10:15:51][D][voice_assistant:443]: Client started, streaming microphone

[10:15:51][D][voice_assistant:422]: State changed from STARTING_PIPELINE to STREAMING_MICROPHONE

[10:15:51][D][voice_assistant:428]: Desired state set to STREAMING_MICROPHONE

[10:15:51][D][voice_assistant:529]: Event Type: 1

[10:15:51][D][voice_assistant:532]: Assist Pipeline running

[10:15:51][D][voice_assistant:529]: Event Type: 9

[10:15:56][D][voice_assistant:529]: Event Type: 0

[10:15:56][D][voice_assistant:529]: Event Type: 2

[10:15:56][D][voice_assistant:619]: Assist Pipeline ended

[10:15:56][D][voice_assistant:422]: State changed from STREAMING_MICROPHONE to IDLE

[10:15:56][D][voice_assistant:428]: Desired state set to IDLE

[10:15:56][D][voice_assistant:422]: State changed from IDLE to START_PIPELINE

[10:15:56][D][voice_assistant:428]: Desired state set to START_MICROPHONE

[10:15:56][D][voice_assistant:206]: Requesting start…

[10:15:56][D][voice_assistant:422]: State changed from START_PIPELINE to STARTING_PIPELINE

[10:15:56][D][voice_assistant:443]: Client started, streaming microphone

[10:15:56][D][voice_assistant:422]: State changed from STARTING_PIPELINE to STREAMING_MICROPHONE

[10:15:56][D][voice_assistant:428]: Desired state set to STREAMING_MICROPHONE

[10:15:56][D][voice_assistant:529]: Event Type: 1

[10:15:56][D][voice_assistant:532]: Assist Pipeline running

[10:15:56][D][voice_assistant:529]: Event Type: 9

[10:16:01][D][voice_assistant:529]: Event Type: 0

[10:16:01][D][voice_assistant:529]: Event Type: 2

[10:16:01][D][voice_assistant:619]: Assist Pipeline ended

[10:16:01][D][voice_assistant:422]: State changed from STREAMING_MICROPHONE to IDLE

[10:16:01][D][voice_assistant:428]: Desired state set to IDLE

[10:16:01][D][voice_assistant:422]: State changed from IDLE to START_PIPELINE

[10:16:01][D][voice_assistant:428]: Desired state set to START_MICROPHONE

[10:16:01][D][voice_assistant:206]: Requesting start…

[10:16:01][D][voice_assistant:422]: State changed from START_PIPELINE to STARTING_PIPELINE

[10:16:01][D][voice_assistant:443]: Client started, streaming microphone

[10:16:01][D][voice_assistant:422]: State changed from STARTING_PIPELINE to STREAMING_MICROPHONE

[10:16:01][D][voice_assistant:428]: Desired state set to STREAMING_MICROPHONE

[10:16:01][D][voice_assistant:529]: Event Type: 1

[10:16:01][D][voice_assistant:532]: Assist Pipeline running

[10:16:01][D][voice_assistant:529]: Event Type: 9

Any tips of what to test?

Currently the code which is on github uses just a single part of the Esspressif ADF and has the VAD turned to max which is the most restrictive, likely due to false detection.

Also there seems to be little data on initial input volume or AGC and likely an option to trigger and capture the pcm so you can have a quick look in something like Audacity would tell much and having a setting for VAD threshold would likely be a good idea.

I guess a search for Event Type: 2 as must be documented somewhere but likely will have to trawl code.

I know of this software but how do you suggest using it in this instance?

Dunno as its often an ommision but a option to record to file is a god send for debugging and setup to get optimal volumes and testing.

As in record to file using the ESP? No idea how I would do that…

Nope your getting events so what audio are you recieving?

But also a websockets to ALSA source to actually test the equipment in setup or android app would likely be wise.

The events are coming through just during ambient background sound. Where do I see/hear what the ESP is actually picking up?

Well Googling ALSA didn’t help me (just a heap of Australian companies using that acronym), sorry, can you please explain?

https://wiki.archlinux.org/title/Advanced_Linux_Sound_Architecture

Or portaudio which is a cross platform audio lib.

Currently you can hack it as the input to the KWS is a chunked raw audio stream that you could pipe into aplay on linux so at that point in the code pass it to stdout or save as a file.

It really needs devel I guess as it doesn’t have a setup app or debug currently as far as I know, as not a user.

Seems extremely complicated…

Until its added likely needs a smattering of python, apols.

Do that as is there is a debug