Yes, or at least it’s not creating them with my config.

You are right, looks like the NAT traverse might be another issue. I can see the stream when running in docker with host net access. I will dig more and update here if I find a solution, thanks.

OK, it was way easier than I thought. I enabled the integrated go2rtc web UI in HA config and added the doorbell as generic camera in integration panel. The go2rtc now shows:

…and I have the entity.

The doorbell now light’s up, but I can’t hear myself. I’m accessing HA via domain with Let’s Encrypt certificate. Any idea what’s wrong?

Edit: modifying the go2rtc to include #audio=opus#audio=copy didn’t help.

That I would say is an issue with ffmpeg. Check what the original post to make sure the ffmpeg command is the exactly the same. I see you have a bad query parameter at the end.

browser-mod is only used to create the popups. This is using the default picture card (for the first popup without 2way audio) and the webrtc card (for the second popup with 2 way audio).

I’m uncertain if the card you shared provides 2 way audio but you could modify the existing blueprint code to use it.

Ok, so as @victorigualada suggested: my issue was networking. HA is running in a docker with limited exposed ports. go2rtc need to be run with a big range of UDP ports exposed, or with network_mode: host.

The only solution I was able to find is to run go2rtc in separate docker with `network_mode: host and with this in HA config:

go2rtc:

url: http://172.17.0.1:1984

This go2rtc can be standalone or from frigate. With standalone, it’s not possible to use custom:advanced-camera-card as it’s not creating entities, but one can still use custom:webrtc-camera like in this blueprint. Just remove the webrtc integration and set the correct go2rtc’s ip when adding it back again.

To make it work outside my network I also had to forward the WebRTC port (8555) to my server and this to go2rtc config:

webrtc:

candidates:

- stun:8555 # if you have dynamic public IP-address

The blueprint’s custom:webrtc-camera defaulted to MSE mode, so I had to add mode: webrtc. Thanks @victorigualada ![]()

Hi everyone,

I’m not an expert, but I recently bought a Reolink WiFi doorbell after reading this post. It seemed like the perfect solution I had been looking for years.

After spending a whole day working on the integration, I was able to see the video and receive a popup notification on my Home Assistant device when the doorbell rings, along with hearing what is said on the doorbell. However, I couldn’t find any way to talk back from my device to the doorbell.

I tried several configurations, and after all that, my good friend ChatGPT explained the following to me:

"This doorbell does NOT support two-way audio (talkback) via RTSP, ONVIF, or HTTP.

You can only send audio to it using the official Reolink app or the Reolink Client for Windows.

This is because Reolink uses a proprietary protocol for the audio return channel (talkback).

Although it exposes an RTSP stream for incoming video and audio, it does not provide an RTSP channel for outgoing audio."

If anyone has managed to talk to the doorbell, a detailed explanation of how it was done would be greatly appreciated.

That seems to me like LLM alucination.

Is the device you are showing the popup on connected to the HA instance through HTTPS? That’s required in order to use the device’s microphone.

Established a connection through Nabu Casa.

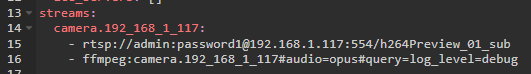

Ok, I managed to get two-way audio working with the following configuration in go2rtc, but the delay is high and the audio cuts out after the first word.

- rtsp://xxx:[email protected]:554/h264Preview_01_sub

- fmpeg:rtsp://xxx:[email protected]:554/h264Preview_01_sub#audio=opus

Well, ChatGPT was partly wrong, it is possible to send audio to the doorbell, but the results are still not good enough for me to install the device at home.

I’d suggest not trust any LLM with information analysis at that level.

About your configuration:

- You should be able to use

ffmpeg:camera.2way_audio_doorbell#audio=opus#audio=copywherecamera.2way_audio_doorbellis the name of your stream as in the example. - You are not using

audio=copy, which tellsffmpegto preserve the original audio without encoding it, which is faster and might be the reason why you see experience the delay. Mainly if you have a slow server like a RPi. - I see you have a WiFi doorbell, so ensure you have a good connection to it.

ChatGPT was not just “partly wrong”, it was 100% wrong about all the afirmations you posted. I would consider not trusting it for anything you are not ready to manually verify.

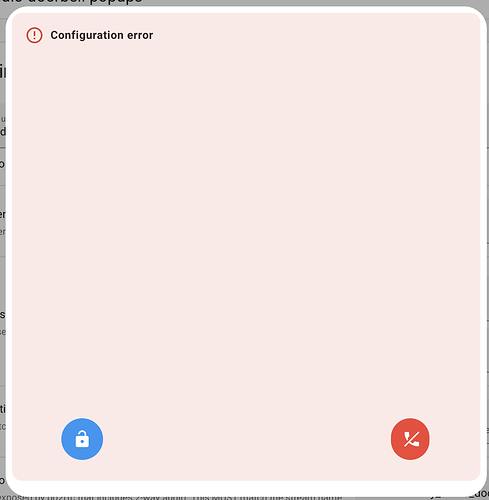

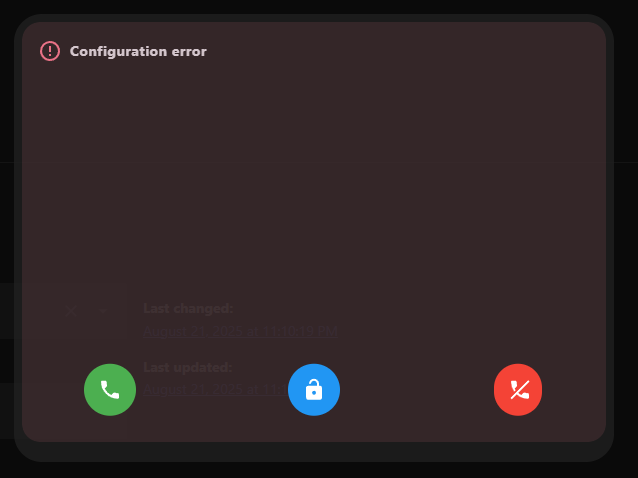

Has anybody been able to get this working with a Unifi Protect G4 Doorbell? I’m able to view the feed but when accepting the call I get a configuration error on the second popup

Thanks!

This was reported already by another issue, but he stopped responding so no way to dive deeper on the issue.

I would say that this is a problem with the go2rtc stream. If you are using Docker you should check a few comments above on how @RipperChile fixed it.

If you are not, check that the name of the stream names matches the one in the blueprint.

how is your experience with two way audio with the frigate setup?

Amazing solution! Regarding the gate entity, how would I go about setting this up for a Nuki lock?

I have the same issue with a Reolink. Did you manage to fix it?

Not yet, I gave up for now. I heard there might be some PRs open in Home Assistant though to support two way audio natively. This may or may not work however as I’m not sure there is support in the Unifi streams.

This guide is focusing on the reolink doorbell, so for Unifi you would have to adapt the RTSP stream defined in go2rtc.

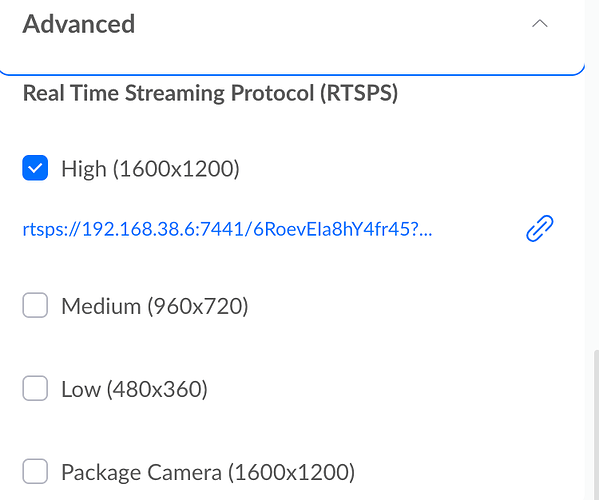

There are a few posts about this. One states the following:

In Protect go to Unifi Devices > [camera] > Settings > Advanced and enable the desired stream resolution. Protect will present you with an RTSPS url to copy. To convert the RTSPS url to the available RTSP url: 1)Change the protocol from “rtsps” to “rtsp” 2)Change the port from “7441” to “7447” 3)Delete the “?” and everything that follows.

e.g.

From: rtsps://192.168.1.2:7441/irwurnfayer?enableSrtp

To: rtsp://192.168.1.2:7447/irwurnfayer

This is an image I found online:

So:

- you need to go to the Unifi phone app (or web UI, not sure) and find the

RTSPSstream url. - copy that URL and follow the above steps to use the

RTSPurl and not theRTSPS. - edit the

go2rtcconfig and replace thestreamswith the corrected URL:

streams:

camera.2way_audio_doorbell:

- rtsp://192.168.1.2:7447/irwurnfayer # replace this line with the url obtained above

- ffmpeg:camera.2way_audio_doorbell#audio=opus#audio=copy

This might need some tweaking. I can’t test this as I don’t own a G4 Unifi doorbell.

I am struggeling to get this to work.

I fixed the first big issue with the listen :8555/tcp

The new issue is the camera stream before selecting accept or ignore.

I am using the fluent camera stream: camera.reolink_doorbell_fluent

Any ideas?

This blueprint dosnt work in anyway. waste of time

I got the same error, and still can’t get the two way audio after I pick up.

I’m using a Fully Kiosk browser on a wall mounted tablet and if I put my local HA ip with http address I got the first pop up with the error and the stream without 2way audio. If I put the Nabu https URL the pop up doesn’t appear at all… So, I think I have some problem about the https setup, but I’m not entirely sure what should I do more