This is here if somebody else stumbles upon this problem in the future. It took me two days to finally figure out a solution for this very simple and common use case: Setting a temperature for a climate device via Assist and the openai integration.

My initial situation and my problem

- Having Assist setup with openai integration for conversation with gpt-4o and gpt-4o-mini.

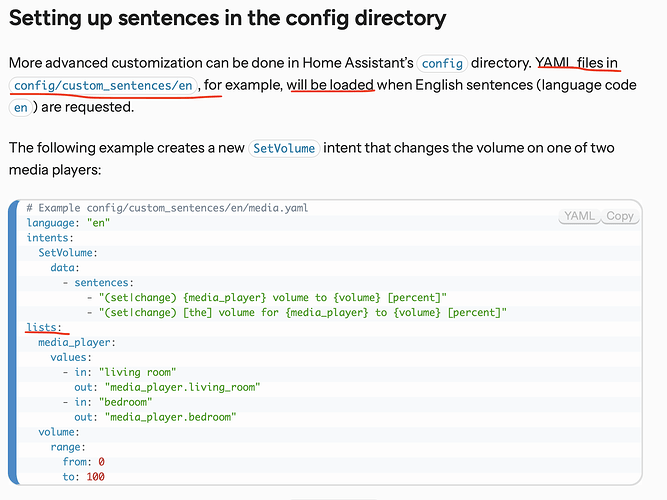

- There is documentation on how to use custom_sentences but this doesnt apply to openai integration, since this doesnt use the custom_sentences integration.

- Instead it directly acts on the intents. There are also docus about the intents: builtin intents, the intent integration docu and the intent repository.

- About the intents

- Builtin intents work fine, the openai model can access all builtin intents correctly

- But when I create custom intents, it is not possible for the model to pass the parameters correctly to the intent. Example

In configuration.yaml according to the intent docu:

intent_script:

ClimateAreaSetTemperature:

description: "Set the temperature for the specified area"

action:

- service: climate.set_temperature

data:

area_id: "{{area}}"

temperature: "{{temp}}"

speech:

text: "The temperature for {{area}} has been set to {{temp}} degrees."

The error we get is:

{

"error": "MultipleInvalid",

"error_text": "not a valid value @ data['temp']"

}

I debugged this in grat detail, I tried endless variations and I also made sure that the model is correctly calling the intent. If you dont pass parameters to the intent, then it can execute the intent. But when you start passing parameters, there are always errors.

When using the assist chat, you can actually debug quite well with the model the situation. It uses the “tool_uses” function to do function calls to homeassistant. And it can tell you all its available functions that it can call. There are all the builtin intents but also your custom intent listed. And it also knows which paras to pass to the custom intent. It can also tell you exactly what it did, here is an example from my chat with it:

Here is the function call I made along with the error received:

### Function Call:

json

{

"name": "light.freddy",

"brightness_pct": 30

}

### Error:

json

{

"error": "MultipleInvalid",

"error_text": "not a valid value @ data['brightness_pct']"

}

Summary: Custom intents with openai only work if you dont need to pass parameters to the intent. Otherwise you always get the MultipleInvalid error, doesnt matter how you pass the parameter to the intent.

My solution to this

Finally, I found out that the model does not only have access to the intents, but also to the scripts that are available in Homeassistant. And here parameter passing works perfectly.

- Simply create a script and expose it to homeassistant

- Give it a good description such that the gpt model knows how to use it.

Example for a script, which is well understandable by the openai model:

description: >-

A script to set the temperature for a specific climate device via its

entity_id. Use this if the temperature should be set and figure out the

correct entity_id from the available entities.

fields:

entityid:

description: The entity id that should be used.

example: thermo_livingroom

temperature:

description: The temperature between 0 and 30 that should be set for the area.

example: 20

sequence:

- action: climate.set_temperature

metadata: {}

data:

temperature: "{{ temperature }}"

target:

entity_id: climate.{{ entityid }}

alias: set_temperature_entity

icon: mdi:oil-temperature

Thus, when you prompt the model in the Assist Chat:

- It gets a list of all available entities in the system

- A list of function calls it can make

- Out of available builtin and custom intents

- And the available scripts

- And finally, it gets the prompt template which you provide in the openai integration configuration

- Then when you prompt it, it has all this information to decide which function call to do

And thanks to the script, it is possible to successfully pass parameters to it.

My prompt template

Here is my prompt template, which also makes it possible to execute multiple function calls to home assistant in one prompt, by not using the multi_tool_use.parallel function which doesnt work correctly for now for home assistant.

You are an assistant for Home Assistant. You help users control devices or perform custom actions by invoking intents or scripts with the correct parameters.

When setting parameters always be very carefull to check the available entities that are available and select the correct one with correct formatting. When areas are mentioned by the user but an entity is required for the functioncall, figure out the correct entity for that area.

Important is that you can only do one function call via "tool_uses" at once. NEVER USE "multi_tool_use.parallel". If you need to do multiple function calls, make them one after another via "tool_uses" and wait for each to execute before proceeding with the next one.

Summary

If you want to use openai together with home assistant. Simply install the openai integration. Expose devices and entities you want it to be able to access. It will support out of the box default actions like turning on lights etc. But for non built in actions, dont use custom intents. Use scripts. Simply create scripts and expose it to assist and the gpt openai model will be able to use those and decide on its own when to use it. Now nothing can stop you from setting up you perfect AI voice assistant to do whatever possible via natural language with GPT and Homeassistant.

Edit

Executing a script will always return sucess: true as response. Thus the Assistant does not know if the function call was sucessfull. The only way I found to somehow get a response is to write within the script a response to a helper variable, which you use for all function calls:

Example for an action in a script

- action: input_text.set_value

data:

value: Ok this was a test

target:

entity_id: input_text.result_output

And then you need a custom intent, since those are working fine, if they return data, as long as you dont need to pass data to it. So add this to your configuration.yml:

intent_script:

GetResult:

description: Gets the result of the last function call

speech:

text: "The result is {{ states('input_text.result_output') }}"

And then you can add to your prompt template of the OpenaiAI integration, that it should always call the GetResult function after every other function call.