If you are still with me, we are going to discuss how to integrate with Node Red. First off, I will be using pictures instead of exporting my flow . . . my flow is explicit to my slot values and my house. But this should get you down the path far enough to take it on your own.

This is what we will be talking through.

We have already discussed our HTTP in Node, so lets jump over to the Function node. The function node is going to do us a solid, and copy over the inbound JSON object to an area of the message object (msg.) that makes sense for us to use later.

msg.request = {};

msg.request = msg.req.body;

msg.request.query = JSON.parse(msg.req.query.jsonobj);

return msg;

Our Switch Node looks like this:

Note: the stuff on the “right side” of the equals should exactly match your slot values for “entity”. And note that the “left side” of the equals is the location that we copied data to (msg.request.query.entity). We do this as the “HTTP RESPONSE” Node needs the original message object to be as “identical” to what was sent that you would find in “Debug 12” (the first output anyway).

My “Lights Get Action” Switch looks like this:

Again, note that these are what I defined for my slot values for “action” and also, the property row (“left side” of the comparison) is now msg.request.query.action and we have now created some “logic” that allows for us to act on what has been said to Alexa, built into our JSON object, and passed to node red.

As we follow the “Link Out” node for both “on” and “off” (I made the design choice to separate the two for various reasons, although you could compress them into one “flow”), we will see that I link to a new Tab with these activities (ignore the Fan Nodes, I like to use them to keep my wires “clean”):

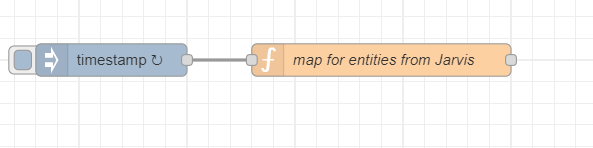

Before we jump into the Function Node from above, we have to diverge and build a data object for us to use. With that being said, a lot of these paths above are “duplicated” but unique, so I am going to show you the pattern I have implemented only, and let you customize. But we now have to think about how we do these interactions. Or how do I map the spoken word “kitchen” to “light.kitchen_overhead”. I have created a mapping object in another flow. This is down and dirty to start, but a picture is worth 1000 words:

You will have to have something like this, unless you want to be doing it all in a switch node. I chose this route for reusability as this “mapping” object gets stored in the global scope and can be used anywhere.

Back to the Function Node:

The “function 3” node looks like this:

var entitys = global.get("mappings");

var entity = entitys[msg.request.query.entity];

msg.payload ={};

msg.payload.target={};

msg.payload.target.entity_id ={};

msg.payload.target.entity_id = entity;

return msg;

this code GETS the mapping object from the global scope and then gets the “HA entity identifier” from it. While putting the “HA entity identifier” into payload.target.entity_id (this allows for the call service node to just “pick it up”.

My “Call Service Node” looks like this:

and the next function node (“Build Alexa Response”) is configured like this:

var speak = "I have turned "+msg.request.query.action+" the "+msg.request.query.entity+" lights.";

msg.payload = {"response":speak};

return msg;

fast forward through the “fan nodes” and we end our “flow” with the “HTTP Response” Node:

which is then placed into the variable resplights from our lambda call in our HouseLightsIntent

const resplights = await fetchURLwithJSON(houseLightsURL, jsonData);

this resplights variable will have an object called response under it, which we reference in the next line of our Lamda function:

speakOutput = JSON.stringify(resplights.response) + ' If you are finished, please tell me goodbye';

If i have done a good job describing this, and you have followed along through all 4 parts, then you should be able to go to the “Test” tab of your Skill Console, and type your utterance in (dont’ forget the “tell / ask / open” key words as part of your complete test statement. For example, when testing I use: “tell house jarvis to turn kitchen lights on”. Pressing enter SHOULD talk to your node-red endpoint, and Alexa should respond to you.