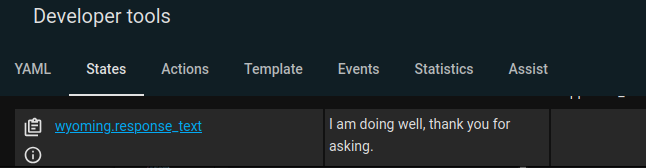

So I picked up the HA Voice Preview Edition, and it works well enough to capture and process commands, but the speaker is too anemic to be heard unless I’m up close to it. Can’t we send that output elsewhere?? I can trigger an automation off of tts.piper changing state (it seems to simply change to the last time it did something) or assist_satellite.home_assistant_voice_09e66a_assist_satellite changing to “Responding”, but I can’t then find an attribute of any of these entities that shows the output text (short of setting everything to debug and then digging through the logs). The logs refer to a chat_log but this doesn’t seem to be accessible anywhere.

I tried things like this, which don’t work:

- id: 'id_19.1'

alias: "Capture TTS Text"

trigger:

- platform: event

event_type: call_service

event_data:

domain: tts

service: speak

condition: []

action:

- service: input_text.set_value

target:

entity_id: input_text.say_this

data:

value: "{{ trigger.event.data.service_data.message }}"

Kind of irrelevant to the the first part, but the rest of the setup is this: I’m running in UTM on a Mac Mini, so I don’t need the cloud for local processing, and this Grok integration handles more complex queries really well. I have an automation that watches input_text.say_this; when it changes, the automation ssh’s a command to the host OS that uses the MacOS stt commandsay to speak the text to whichever AirPlay speakers are attached to Airfoil at the time.

Maybe this is an enhancement request or is already in the pipeline?