Overview

This is an attempt to control entities via Open AI.

I have created a custom component called extended_openai_conversation.

As you may assume, it is derived from openai_conversation with a couple of new features added.

Additional Features

- Ability to call service of Home Assistant

- Ability to create automation

- Ability to get data from API or web page

How it works

Extended OpenAI Conversation uses OpenAI API’s feature of function calling to call service of Home Assistant.

Since “gpt-3.5-turbo” model already knows how to call service of Home Assistant in general, you just have to let model know what devices you have by exposing entities

Installation

-

Install via HACS or by copying

extended_openai_conversationfolder into<config directory>/custom_components -

Restart Home Assistant

-

Go to Settings > Devices & Services.

-

In the bottom right corner, select the Add Integration button.

-

Follow the instructions on screen to complete the setup (API Key is required).

-

Go to Settings > Voice Assistants.

-

Click to edit Assistant (named “Home Assistant” by default).

-

Select “Extended OpenAI Conversation” from “Conversation agent” tab.

guide image

-

After installed, you need to expose entities from “http://{your-home-assistant}/config/voice-assistants/expose”.

Examples

1. Add to shopping cart

2. Send messages to messenger

3. Add Automation

4. Play Netflix on TV

5. Play Youtube on TV

Configuration

Options

By clicking a button from Edit Assist, Options can be customized.

Options include OpenAI Conversation options and two new options.

-

Maximum Function Calls Per Conversation: limit the number of function calls in a single conversation.

(Sometimes function is called over and over again, possibly running into infinite loop) -

Functions: A list of mappings of function spec to function.

Functions

Supported function types

-

native: built-in function provided by “extended_openai_conversation”.- Currently supported native functions and parameters are:

-

execute_service-

domain(string): domain to be passed tohass.services.async_call -

service(string): service to be passed tohass.services.async_call -

service_data(string): service_data to be passed tohass.services.async_call

-

-

add_automation-

automation_config(string): An automation configuration in a yaml format

-

-

- Currently supported native functions and parameters are:

-

script: A list of services that will be called -

template: The value to be returned from function. -

rest: Getting data from REST API endpoint. -

scrape: Scraping information from website -

composite: A sequence of functions to execute.

Below is a default configuration of functions.

- spec:

name: execute_services

description: Use this function to execute service of devices in Home Assistant.

parameters:

type: object

properties:

list:

type: array

items:

type: object

properties:

domain:

type: string

description: The domain of the service

service:

type: string

description: The service to be called

service_data:

type: object

description: The service data object to indicate what to control.

properties:

entity_id:

type: string

description: The entity_id retrieved from available devices. It must start with domain, followed by dot character.

required:

- entity_id

required:

- domain

- service

- service_data

function:

type: native

name: execute_service

Function Usage

This is an example of configuration of functions.

Copy and paste below yaml configuration into “Functions”.

Then you will be able to let OpenAI call your function.

1. template

1-1. Get current weather

- spec:

name: get_current_weather

description: Get the current weather in a given location

parameters:

type: object

properties:

location:

type: string

description: The city and state, e.g. San Francisco, CA

unit:

type: string

enum:

- celcius

- farenheit

required:

- location

function:

type: template

value_template: The temperature in {{ location }} is 25 {{unit}}

2. script

2-1. Add item to shopping cart

- spec:

name: add_item_to_shopping_cart

description: Add item to shopping cart

parameters:

type: object

properties:

item:

type: string

description: The item to be added to cart

required:

- item

function:

type: script

sequence:

- service: shopping_list.add_item

data:

name: '{{item}}'

2-2. Send messages to another messenger

In order to accomplish “send it to Line” like example3, register a notify function like below.

- spec:

name: send_message_to_line

description: Use this function to send message to Line.

parameters:

type: object

properties:

message:

type: string

description: message you want to send

required:

- message

function:

type: script

sequence:

- service: script.notify_all

data:

message: "{{ message }}"

2-3. Get events from calendar

In order to pass result of calling service to OpenAI, set response variable to _function_result.

- spec:

name: get_events

description: Use this function to get list of calendar events.

parameters:

type: object

properties:

start_date_time:

type: string

description: The start date time in '%Y-%m-%dT%H:%M:%S%z' format

end_date_time:

type: string

description: The end date time in '%Y-%m-%dT%H:%M:%S%z' format

required:

- start_date_time

- end_date_time

function:

type: script

sequence:

- service: calendar.list_events

data:

start_date_time: "{{start_date_time}}"

end_date_time: "{{end_date_time}}"

target:

entity_id: calendar.test

response_variable: _function_result

2-4. Play Youtube on TV

- spec:

name: play_youtube

description: Use this function to play Youtube.

parameters:

type: object

properties:

video_id:

type: string

description: The video id.

required:

- video_id

function:

type: script

sequence:

- service: webostv.command

data:

entity_id: media_player.{YOUR_WEBOSTV}

command: system.launcher/launch

payload:

id: youtube.leanback.v4

contentId: "{{video_id}}"

- delay:

hours: 0

minutes: 0

seconds: 10

milliseconds: 0

- service: webostv.button

data:

entity_id: media_player.{YOUR_WEBOSTV}

button: ENTER

2-5. Play Netflix on TV

- spec:

name: play_netflix

description: Use this function to play Netflix.

parameters:

type: object

properties:

video_id:

type: string

description: The video id.

required:

- video_id

function:

type: script

sequence:

- service: webostv.command

data:

entity_id: media_player.{YOUR_WEBOSTV}

command: system.launcher/launch

payload:

id: netflix

contentId: "m=https://www.netflix.com/watch/{{video_id}}"

3. native

3-1. Add automation

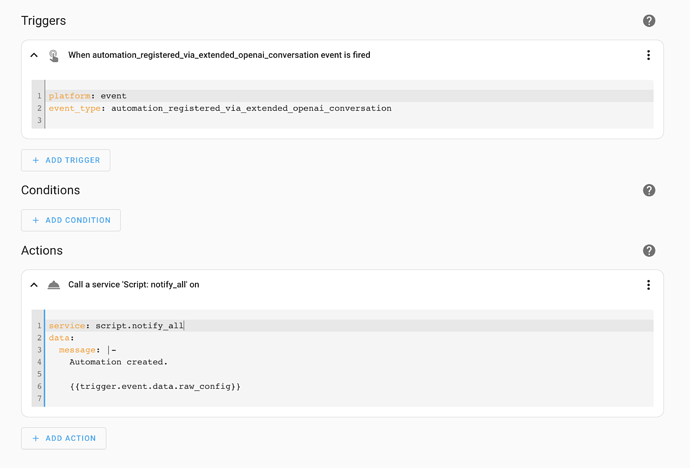

Before adding automation, I highly recommend set notification on automation_registered_via_extended_openai_conversation event and create separate “Extended OpenAI Assistant” and “Assistant”

(Automation can be added even if conversation fails because of failure to get response message, not automation)

Copy and paste below configuration into “Functions”

For English

- spec:

name: add_automation

description: Use this function to add an automation in Home Assistant.

parameters:

type: object

properties:

automation_config:

type: string

description: A configuration for automation in a valid yaml format. Next line character should be \n. Use devices from the list.

required:

- automation_config

function:

type: native

name: add_automation

For Korean

- spec:

name: add_automation

description: Use this function to add an automation in Home Assistant.

parameters:

type: object

properties:

automation_config:

type: string

description: A configuration for automation in a valid yaml format. Next line character should be \\n, not \n. Use devices from the list.

required:

- automation_config

function:

type: native

name: add_automation

4. scrape

4-1. Get current HA version

Scrape version from webpage, “https://www.home-assistant.io”

Unlike scrape, “value_template” is added at root level in which scraped data from sensors are passed.

scrape:

- spec:

name: get_ha_version

description: Use this function to get Home Assistant version

parameters:

type: object

properties:

dummy:

type: string

description: Nothing

function:

type: scrape

resource: https://www.home-assistant.io

value_template: "version: {{version}}, release_date: {{release_date}}"

sensor:

- name: version

select: ".current-version h1"

value_template: '{{ value.split(":")[1] }}'

- name: release_date

select: ".release-date"

value_template: '{{ value.lower() }}'

5. rest

5-1. Get friend names

- Sample URL: https://jsonplaceholder.typicode.com/users

- spec:

name: get_friend_names

description: Use this function to get friend_names

parameters:

type: object

properties:

dummy:

type: string

description: Nothing.

function:

type: rest

resource: https://jsonplaceholder.typicode.com/users

value_template: '{{value_json | map(attribute="name") | list }}'

6. composite

6-1. Search Youtube Music

When using ytube_music_player, after ytube_music_player.search service is called, result is stored in attribute of sensor.ytube_music_player_extra entity.

- spec:

name: search_music

description: Use this function to search music

parameters:

type: object

properties:

query:

type: string

description: The query

required:

- query

function:

type: composite

sequence:

- type: script

sequence:

- service: ytube_music_player.search

data:

entity_id: media_player.ytube_music_player

query: "{{ query }}"

- type: template

value_template: >-

media_content_type,media_content_id,title

{% for media in state_attr('sensor.ytube_music_player_extra', 'search') -%}

{{media.type}},{{media.id}},{{media.title}}

{% endfor%}

Practical Usage

See more practical examples.

Logging

In order to monitor logs of API requests and responses, add following config to configuration.yaml file

logger:

logs:

custom_components.extended_openai_conversation: info