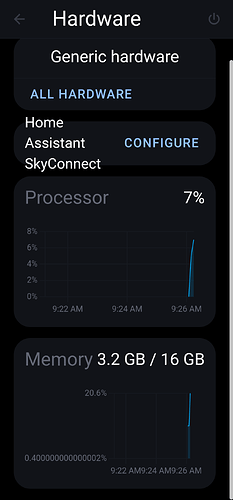

My HA is installed on Intell mini PC with 250GB SSD and 16 GB RAM. After last update I have around 25% disc available and couple times it’s show warning " Less than 1GB memory available"

Anybody else have same issue? What should I do to clean disk space and speed up my HA

DB file already deleted ( No changes) and I have DB purge and only 4 days of DB to store. I am not an expert in all this topics, please forgive me if I wrote something incorrectly.

Hello Yevgeniy,

Disk space being eaten up - #9 by mjvanderveen.

Could be your problem, or just too many backups in general on the drive.

Thank you for link and suggestion. I delete 2 backups from my disk before I took a screenshot.

Usually, I have 4 backups on Google drive and last 2 on the disk, just in case. Still showing 86% disk space occupied. Any other recommendations? Open for any solutions, except erasing HA and start from scratch ![]()

Did you look at the link in that message?

Not the same problem.

Yes, I did. Didn’t try this yet, because not quite understand what to do. I have ssh installed, but don’t understand commands which I have to use there. Need a step-by-step detailed instruction ( I am not familiar with ssh commands)

Also, is that will work with HA OS installed on PC or it’s just for Docker Container installation?

Thank you

Just type or paste into the terminal:

du -a / | sort -n -r | head -n 20

Thank you very much for help. @Sir_Goodenough and @Sandalman , I followed your recommendation and after I use rm -rf /root/backup/* command I delete 4 backup files and gain another 15% of the storage. Than I use du -a / | sort -n -r | head -n 20 command and this is what I got:

What should I do with this information and which files is safe to delete?

Also 5th time for this week( after last update) I got this message:

But processor and memory is less than 20% in use

My issue is very similar but more acute. I have HaOS on Proxmox installed on an i5 NUC. I have 37 GB allocated to the HA VM but df says the disk is full while du says I have only used 4.7GB.

du -h -d 1 / | sort -h

0 /dev

0 /proc

0 /sys

4.0K /addons

4.0K /lib64

4.0K /mnt

4.0K /opt

4.0K /srv

12.0K /home

52.0K /command

60.0K /ssl

80.0K /data

84.0K /tmp

356.0K /sbin

632.0K /run

1.4M /lib

1.7M /etc

2.5M /bin

2.7M /addon_configs

5.7M /package

14.7M /root

21.9M /media

95.1M /share

298.4M /usr

465.2M /var

1.4G /homeassistant

2.4G /backup

4.7G /

and df:

/ df -h

Filesystem Size Used Available Use% Mounted on

overlay 35.7G 34.8G 0 100% /

/dev/sda8 35.7G 34.8G 0 100% /homeassistant

/dev/sda8 35.7G 34.8G 0 100% /addon_configs

/dev/sda8 35.7G 34.8G 0 100% /addons

/dev/sda8 35.7G 34.8G 0 100% /share

/dev/sda8 35.7G 34.8G 0 100% /media

devtmpfs 2.9G 0 2.9G 0% /dev

tmpfs 2.9G 0 2.9G 0% /dev/shm

/dev/sda8 35.7G 34.8G 0 100% /data

/dev/sda8 35.7G 34.8G 0 100% /backup

/dev/sda8 35.7G 34.8G 0 100% /ssl

/dev/sda8 35.7G 34.8G 0 100% /run/audio

tmpfs 2.9G 0 2.9G 0% /dev/shm

tmpfs 1.2G 2.1M 1.2G 0% /run/dbus

tmpfs 1.2G 2.1M 1.2G 0% /run/docker.sock

/dev/sda8 35.7G 34.8G 0 100% /etc/asound.conf

/dev/sda8 35.7G 34.8G 0 100% /etc/resolv.conf

/dev/sda8 35.7G 34.8G 0 100% /etc/hostname

/dev/sda8 35.7G 34.8G 0 100% /etc/hosts

tmpfs 1.2G 2.1M 1.2G 0% /run/log/journal

/dev/sda8 35.7G 34.8G 0 100% /etc/pulse/client.conf

/dev/sda8 35.7G 34.8G 0 100% /var/log/journal

tmpfs 2.9G 0 2.9G 0% /proc/asound

tmpfs 2.9G 0 2.9G 0% /proc/acpi

devtmpfs 2.9G 0 2.9G 0% /proc/interrupts

devtmpfs 2.9G 0 2.9G 0% /proc/kcore

devtmpfs 2.9G 0 2.9G 0% /proc/keys

devtmpfs 2.9G 0 2.9G 0% /proc/timer_list

tmpfs 2.9G 0 2.9G 0% /proc/scsi

tmpfs 2.9G 0 2.9G 0% /sys/firmware

It looks like the OS is reporting disk space incorrectly but it stops HA from doing a backup unless I delete backups to create space.

Anyone can point me to what is happening here? Maybe I need to re-install?

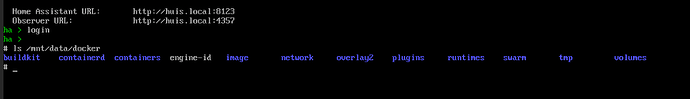

Try running these command from the proxmox HA Console. If there is a mismatch, it is because you probably run these from one of the ssh add-ons, and those run in a container.

Thanks for your reply.

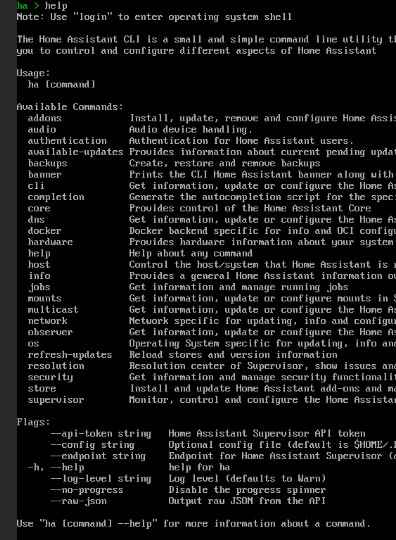

How do I get to a “normal” cli in the Proxmox console? I only have access to the “ha” prompt with limited commands.

Type login (no password)

Thanks. That’s a great help.

I found a heap of stuff in /mnt/data/supervisor (20.3 GB) and /mnt/data/docker (22.3 GB).

Now I have something I can investigate.

![]()

In /mnt/data/supervisor/addons/data/5c53de3b_esphome/cache/platformio I have:

du -h -d 1 | sort -h

15.7M ./platforms

663.8M ./cache

7.3G ./packages

8.0G

Am I ok to delete everything in /mnt/data/supervisor/addons/data/5c53de3b_esphome/cache/

knowing that it will load up again next time there is an update or edit?

EDIT:

Ok trying “Clean build files” in ESPHome for each device first.

“Clean build files” has not released any disk space so my question is; is it safe to delete everything in delete everything in /mnt/data/supervisor/addons/data/5c53de3b_esphome/cache/ ?

And also there are 1240 folders in /mnt/data/docker dating back 2.5 years. Is there anyway to know what could be deleted to clean up?

Sorry, the 1240 folders are in the overlay2 folder. My reading suggests that I can safely do “docker system prune -a” that will remove stopped containers etc…

Yes, that might work.

Thanks

And is it safe to delete everything in /mnt/data/supervisor/addons/data/5c53de3b_esphome/cache/ ?

I think so, but make a backup first ![]()

It seems that ESPHome needs to have some sort of cache clearing script incorporated. I have 11 ESPHome devices configured, 8 of them active, but 2 of them are large yaml files - 369 lines, 207 lines - and this seems to result in about 1.0GB of files in the cache folder after a round of updates.

That seems to require me to do this cache delete activity each month to keep within my 37GB of disc allocation. Your thoughts on making this a feature request? Clean build files does not do this.