Hi everyone!

I migrated HA to an SSD yesterday, and it’s not working very well at all… The issue is that HA feels really slow, it don’t start addons on reboot and it crashes after sometimes 5-10 minutes after restart or it could last some hours.

I followed this guide as for the migration.

Went pretty well, except that most of my addons was not carried over and I had to do a partial restore on them. I also noticed that the file size of the backup I took just before changing to SSD was much larger than the backup that was created when I setup the Hassio Goodle drive backup addon. The first one was 297,3 MB and the one created after I restored was only 106 MB. To me it indicates that not everything was “carried over”, might have been the missing addons… Or something else, which is causing this problem!

I have tried to search the logs in HA after I restart it (have to be done by pulling the power to the RPI) but I can’t find anything relevant, and perhaps I look in the wrong place.

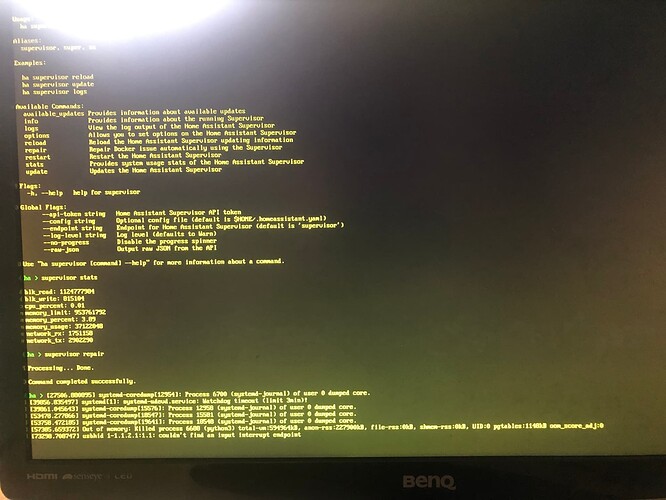

Connecting a monitor and a keyboard to the RPI gave me this:

I performed the “supervisor repair” yesterday and that seemed to do the trick, but apparently not… The RPI is unresponsive and has to be rebooted by cutting power.

When HA is up and running, I can access the RPI through the SSH terminal, if that could help with troubleshooting.

While typing this, HA went down and was unreachable and the RPI wouldnt take input from the keyboard. Jumped back up by itself for a while, and is now down again… I can however access deconz from VNC viewer. Sometimes that’s not possible and all my switches, lights, sensors etc won’t work.

BTW: Is there a way to access the RPI through VNC on a windows PC? I know it can be done through HA and the terminal add on, but since it’s going down, that method is unreliable…

System setup:

Raspberry Pi 3b+ with ethernet cable connected from router.

Official power supply 5.1V / 2.5A

Conbee 2 (no extension cable)

SSD Kingston SA400S37 120GB (on extension/data cable)

TooQ case for SSD

Home Assistant Core 2022.6.7

Home Assistant Supervisor 2022.05.3

Home Assistant OS 8.2

The RPI power checker in HA shows no problems, solid green light for a week back.

System health in HA (when it’s up…) gives me this:

|Version|core-2022.6.7|

| --- | --- |

|Installation Type|Home Assistant OS|

|Development|false|

|Supervisor|true|

|Docker|true|

|User|root|

|Virtual Environment|false|

|Python Version|3.9.12|

|Operating System Family|Linux|

|Operating System Version|5.15.32-v8|

|CPU Architecture|aarch64|

|Timezone|Europe/Stockholm|

### Home Assistant Community Store

[HANTERA](http://homeassistant.local:8123/hacs)

|GitHub API|ok|

| --- | --- |

|Github API Calls Remaining|5000|

|Installed Version|1.17.2|

|Stage|running|

|Available Repositories|1062|

|Installed Repositories|6|

### Home Assistant Cloud

[HANTERA](http://homeassistant.local:8123/config/cloud)

|Logged In|true|

| --- | --- |

|Subscription Expiration|1 januari 2018 01:00|

|Relayer Connected|false|

|Remote Enabled|true|

|Remote Connected|false|

|Alexa Enabled|false|

|Google Enabled|false|

|Remote Server||

|Reach Certificate Server|ok|

|Reach Authentication Server|ok|

|Reach Home Assistant Cloud|ok|

### Home Assistant Supervisor

|Host Operating System|Home Assistant OS 8.2|

| --- | --- |

|Update Channel|stable|

|Supervisor Version|supervisor-2022.05.3|

|Agent Version|1.2.1|

|Docker Version|20.10.14|

|Disk Total|111.1 GB|

|Disk Used|9.8 GB|

|Healthy|true|

|Supported|true|

|Board|rpi3-64|

|Supervisor API|ok|

|Version API|ok|

|Installed Add-ons|deCONZ (6.14.1), Grafana (7.2.0), Home Assistant Google Drive Backup (0.108.2), SSH & Web Terminal (9.0.1), File editor (5.3.3), Check Home Assistant configuration (3.9.0), ESPHome (2022.5.1), InfluxDB (4.2.1), Samba share (9.5.1)|

### Dashboards

[HANTERA](http://homeassistant.local:8123/config/lovelace)

|Kontrollpaneler|1|

| --- | --- |

|Resources|1|

|Views|1|

|Mode|storage|

### Recorder

|Äldsta starttid|17 juni 2022 08:17|

| --- | --- |

|Aktuell starttid|26 juni 2022 10:41|

|Estimated Database Size (MiB)|691.97 MiB|

|Database Engine|sqlite|

|Database Version|3.34.1|

### Core-Statistik

Processor usage:

2.6 %

Memory usage:

23.5 %

### Supervisor-Statistik

Processor usage

30.6 %

Memory usage

6.6 %

Not sure where to look for errors, so any help would be appreciated!