Hello. I develop an integration for managing a users energy from the supplier Octopus Energy. Unfortunately, for most users the data is not available in real time and is usually available the next day. I have a sensor that represents the accumulative consumption for the previous day, which changes daily. This sensor has a device_class of Energy, state_class of total and unit_of_measurement of kwh. The last_reset is set to the start of the day that the accumulation represents.

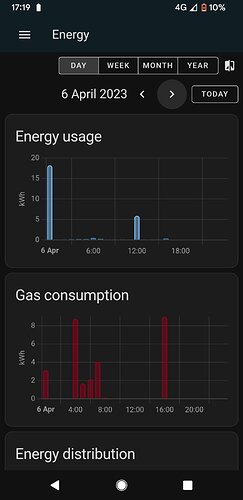

I’m now trying to import this data in the 30 minute increments that are available so that it can appear nicely in the Energy Dashboard. As you can see below I’m successfully importing the data

However in doing so, I’m still having spikes for today that represents the accumulation which I’m trying to remove.

Is this even possible? Below you can see the logic for importing the statistics and was wondering if anyone has any advice if this is possible.

async def async_import_statistics_from_consumption(hass: HomeAssistant, now: datetime, unique_id: str, name: str, consumptions, unit_of_measurement: str, consumption_key: str):

statistic_id = f"sensor.{unique_id}".lower()

# Our sum needs to be based from the last total, so we need to grab the last record from the previous day

last_stat = await get_instance(hass).async_add_executor_job(

statistics_during_period,

hass,

consumptions[0]["from"] - timedelta(days=7),

consumptions[0]["from"],

{statistic_id},

"hour",

None,

{"sum"}

)

statistics = []

last_reset = consumptions[0]["from"].replace(minute=0, second=0, microsecond=0)

sum = last_stat[statistic_id][-1]["sum"] if statistic_id in last_stat and len(last_stat[statistic_id]) > 0 else 0

state = 0

_LOGGER.debug(f'last_stat: {last_stat}; sum: {sum}; last_reset: {last_reset}')

for index in range(len(consumptions)):

charge = consumptions[index]

start = charge["from"].replace(minute=0, second=0, microsecond=0)

sum += charge[consumption_key]

state += charge[consumption_key]

_LOGGER.debug(f'index: {index}; start: {start}; sum: {sum}; state: {state}; added: {(index) % 2 == 1}')

if index % 2 == 1:

statistics.append(

StatisticData(

start=start,

last_reset=last_reset,

sum=sum,

state=state

)

)

# # Fill up to now to wipe the capturing of the data on the entity

target = now - timedelta(hours=1)

current = start + timedelta(hours=1)

while (current < target):

statistics.append(

StatisticData(

start=current,

last_reset=last_reset,

sum=sum,

state=state

)

)

_LOGGER.debug(f'start: {current}; sum: {sum}; state: {0};')

current = current + timedelta(hours=1)

metadata = StatisticMetaData(

has_mean=False,

has_sum=True,

name=name,

source='recorder',

statistic_id=statistic_id,

unit_of_measurement=unit_of_measurement,

)

async_import_statistics(hass, metadata, statistics)

You need to have previous sums otherwise you end up with even weirder results. I think the issue is the difference between long and short term statistics in HA. However there’s no way of injecting short term statistics so this might just be the best I can do.

You need to have previous sums otherwise you end up with even weirder results. I think the issue is the difference between long and short term statistics in HA. However there’s no way of injecting short term statistics so this might just be the best I can do.