I tried it and it works with llama3.2-vision ![]()

You don’t need to forward the port unless you want to access Ollama directly outside of your network. Since HA and Ollama both run on the same machine you can likely access Ollama from HA using http://localhost:11434.

Since HA runs in a docker container you probably need to change the network mode if you haven’t done so already. I’m not a docker expert, but I think you can achieve this with --network="host" for docker run or network_mode: "host" in docker compose respectively.

The LLM model is not aware what hardware it is running on or what ports are exposed, so you can ignore that.

Thanks for pointing this out, Valentin. I clearly wasn’t aware of it.

Using 127.0.0.1 as the IP address enabled me to configure the integration. Now let’s see what kind of trouble I can get myself into…

hi man。 You don’t need to do so much. Just check Ollama’s FAQ for answers. I have also encountered this before.

You’re welcome! If you have any questions, don’t hesitate to ask!

Right now I get a notification from Home Assistant that motion was detected by a camera, then it’s updated a second or two later with the AI description.

For at least one of my cameras I’m ok just waiting on the AI version. Is there a way to suppress the first notification??

I’m using the 1.3.1 version of the template.

The update should happen silently so you should only get one message. Are you on Android?

I am on iOS. The update is happening silently, which is awesome. I may just be looking at my phone too quickly and so I’m catching the initial notification. I was just hoping to almost delay the notifications by a few seconds to wait for the updated one.

For anyone who is using Node Red and calling this via a service node, if you are making changes to the prompt anything, you need to restart node red. Deploying won’t catch the updates. At least when using Ollama

I am now getting just a snapshot so I’d say the update is working much better. However, it now appears that the snapshot I get is for the previous event. For example, I have a camera on my back door telling me when my dog is back there so I can let her in/out. When she is ready to come in, I am notified about a dog at the back door except the snapshot is of her waiting to be let out.

edit: I just noticed that the HA integration wasn’t running the latest version. I will update that and check in later.

Hi , I downloaded version 1.3.1 and made an APIkey in google AI-studio.

When in further install and copy the API key I get the message invalid key.

What can I be doing wrong? I’m on a free of charge plan

SOLVED → I takes a while before the key works

Hi. Looking to get RIng working with this. I have Scrypted configured to work with Ring and Scrypted captures clips. I am not a user of their paid NVR tool. I was wondering if there was a way to point to the location where scrypted stores the clips to use for this? Getting the Generic Camera thing working seems extremely complicated so was wondering if between Frigate, SCrypted, Ring MQTT HA, there was a way to use this

I think I got this working. WIll test over the weekend. Scrypted lets you get an rtsp link to the camera that can be added as a generic cam in HA. I have it. The image is being captured, need to test if Ollama processes it now.

EDIT: It works. I need to upgrade the RAM on my server that’s running Ollama but Ring → Scrypted → RTPC URL → Generic Camera works. Also, the page on the website re Ring is extremely confusing.

@zuzzy has written an excellent guide on how to get Ring cameras working with LLM Vision. You can read it here:

Thanks. i found that to a very confusing write up. And it points to a very lengthy way of adding the Ring cams as generic cameras. I was able to add the Ring cams as generic cams via Scrypted

I wasnt able to get input_text to work, is that the best way to conditionally execute an action based on the LLM response? (if suspicious activity, notify, etc)

@valentinfrlch question: I have more than one llama3.2-vision model in Ollama (llama3.2-vision:90b and llama3.2-vision:latest (this is the 11b model))

In the blueprint, what model should I give if I wanted the system to exclusively use the 90b model? When I put llama3.2-vision:90b, I get an error but if I keep it to just llama3.2-vision, it works; I just don’t know which model it uses

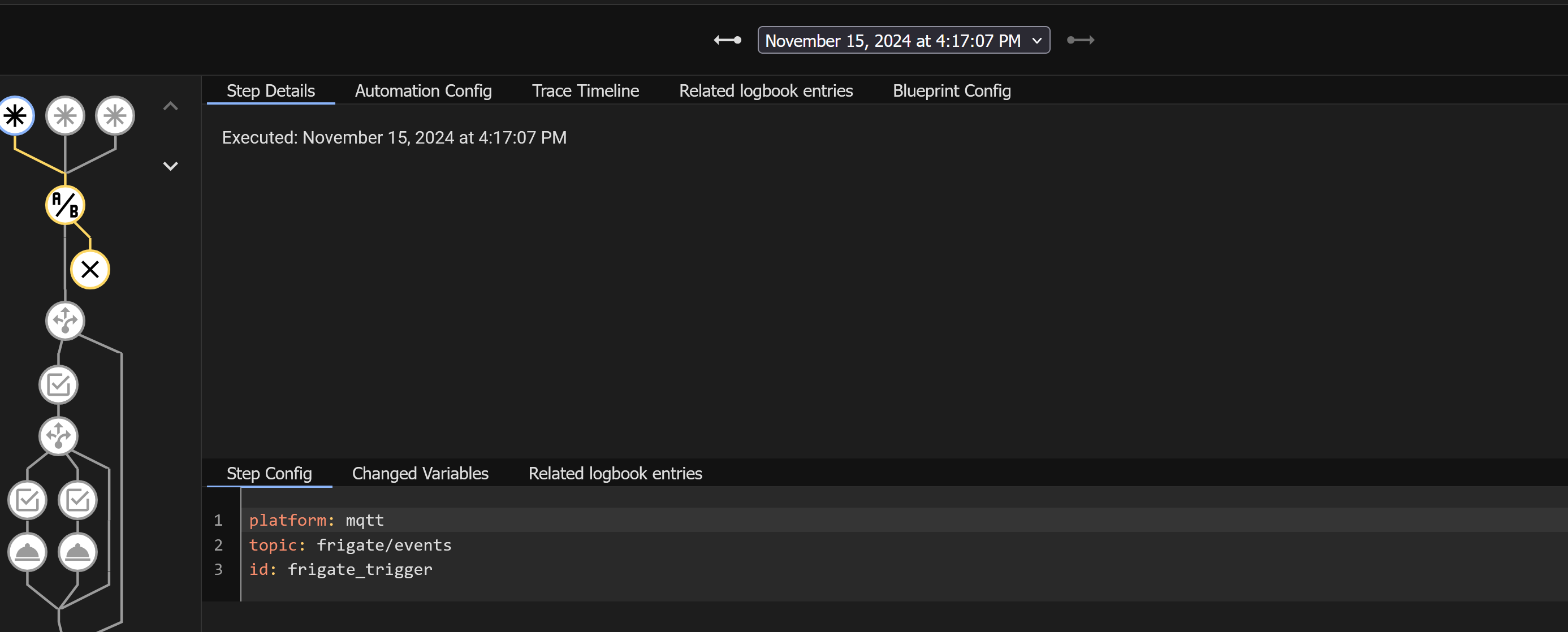

I seem to be having issues with the ai event summary script to execute.

Here is my trace log details and config details

I though maybe this had to do with my cooldown, I tried playing with 1 minute to 10 minute cool downs and it never makes it past this step.

Anyone have any suggestions?

What error do you get? The model name is passed to ollama directly so it should be the same model name that you pass to ollama run.

Did you set the camera entities? It will only send notifications if the event has been triggered by one of the camera entities you set.