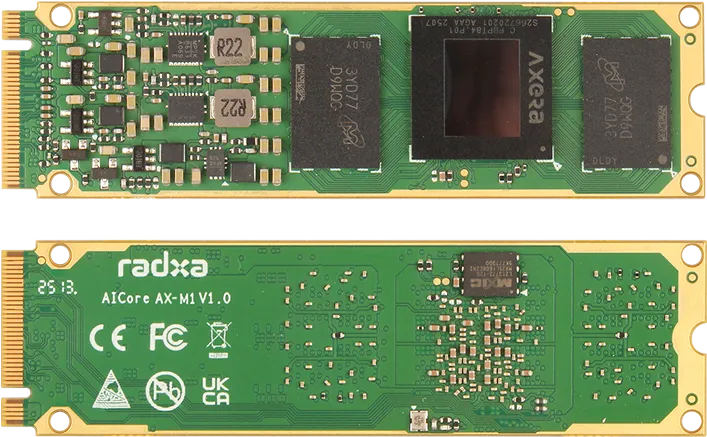

M5Stack LLM-8850 is a new M.2 M-Key (2242 NGFF socket) AI accelerator card based on Axera AX8850 24 TOPS @ INT8 NPU SoC:

This is a new LLM8850 M.2 AI accelerator module is less expensive alternative than competes with the Hailo-8 based the Raspberry Pi AI Kit and Waveshare Hailo-8 M.2 AI Accelerator Module, and just like those this too is designed primarly for “Edge AI” on single-board-computers such as Raspberry Pi 5 / CM5, Rockchip RK3588 SBCs (like example NanoPC-T6, NanoPi R6C, Orange Pi 5 Plus, Banana Pi BPI-M7), and small-form-factor x86-64 Mini-PCs (like Intel NUC series) with a spare M.2 Key-M socket.

On paper the Axera 8850 SoC Edge NPU coprocessor is howerver far superior on general AI acceleration / AI generation and thus more flexible then the Hailo-8 M.2 cards which in turn are more or less optimized for computer vision applications.

Wondering if we could get support for this by default in the official Home Assistant Operating System (Linux distobution)?

The M5Stack LLM-8850 card supports PCIe 2.0 ×2 lanes and onboard it has 8GB RAM (64 bit LPDDR4x @ 4266 Mbps) which NPU capable of up to 24 TOPS @ INT8 (based on Axera AX8850 Octa-Core Cortex‑A55 1.7 GHz CPU SoC). It also supports H.265/H.264 8Kp30 video encoding and 8Kp60 video decoding, with up to 16 channels for 1080p videos, so another use case would be on-the-fly video transcoding acceleration for Frigate NVR, other than real-time AI object detection (similar to the Google Coral M.2 and mini-PCIE card Edge TPU coprocessor). The main downside (other than it only having 8GB of VRAM) is that M5Stack LLM-8850 card runs a hotter so need a fan for active cooling as maximum load it uses 7W @ 3.3V and runs at 70 °C degrees (so it probably get way too hot to run inside the Home Assistant Yellow enclosure).

M5Stack sells the Axera AX8850 M.2 module for $99 US-dollar in its own online store (and on its on AliExpress store):

PS: M5Stack is a subsidiary of Espressif (of ESP32/ESP8266 fame):