I did get an error this morning (haven’t checked if I got more, but saw these after a restart:

Looks like these relate to the manual fixes mentioned above?

Unhandled database error while processing task CommitTask(): (MySQLdb.DataError) (1406, "Data too long for column 'event_type' at row 1") [SQL: INSERT INTO events (event_type, event_data, origin, origin_idx, time_fired, context_id, context_user_id, context_parent_id, data_id) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s)] [parameters: ('recorder_5min_statistics_generated', None, None, 0, datetime.datetime(2022, 12, 13, 9, 30, 10, 480968, tzinfo=datetime.timezone.utc), '01GM5D3XNGB91RANM0XT1CGHRQ', None, None, None)] (Background on this error at: https://sqlalche.me/e/14/9h9h)

Unhandled database error while processing task CommitTask(): (MySQLdb.DataError) (1406, "Data too long for column 'event_type' at row 1") [SQL: INSERT INTO events (event_type, event_data, origin, origin_idx, time_fired, context_id, context_user_id, context_parent_id, data_id) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s)] [parameters: ('recorder_5min_statistics_generated', None, None, 0, datetime.datetime(2022, 12, 13, 9, 35, 10, 427349, tzinfo=datetime.timezone.utc), '01GM5DD2JVKS40GFT2K9RQ38TE', None, None, None)] (Background on this error at: https://sqlalche.me/e/14/9h9h)

Unhandled database error while processing task CommitTask(): (MySQLdb.DataError) (1406, "Data too long for column 'event_type' at row 1") [SQL: INSERT INTO events (event_type, event_data, origin, origin_idx, time_fired, context_id, context_user_id, context_parent_id, data_id) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s)] [parameters: ('recorder_5min_statistics_generated', None, None, 0, datetime.datetime(2022, 12, 13, 9, 40, 10, 465530, tzinfo=datetime.timezone.utc), '01GM5DP7K13H400BZ997D4AXE3', None, None, None)] (Background on this error at: https://sqlalche.me/e/14/9h9h)

Unhandled database error while processing task CommitTask(): (MySQLdb.DataError) (1406, "Data too long for column 'event_type' at row 1") [SQL: INSERT INTO events (event_type, event_data, origin, origin_idx, time_fired, context_id, context_user_id, context_parent_id, data_id) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s)] [parameters: ('recorder_5min_statistics_generated', None, None, 0, datetime.datetime(2022, 12, 13, 9, 45, 10, 492551, tzinfo=datetime.timezone.utc), '01GM5DZCJWRZ033YA03ZYEAKFW', None, None, None)] (Background on this error at: https://sqlalche.me/e/14/9h9h)

Unhandled database error while processing task CommitTask(): (MySQLdb.DataError) (1406, "Data too long for column 'event_type' at row 1") [SQL: INSERT INTO events (event_type, event_data, origin, origin_idx, time_fired, context_id, context_user_id, context_parent_id, data_id) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s)] [parameters: ('recorder_5min_statistics_generated', None, None, 0, datetime.datetime(2022, 12, 13, 9, 50, 10, 441131, tzinfo=datetime.timezone.utc), '01GM5E8HG9SPAQHDXGM0GNG8XY', None, None, None)] (Background on this error at: https://sqlalche.me/e/14/9h9h)

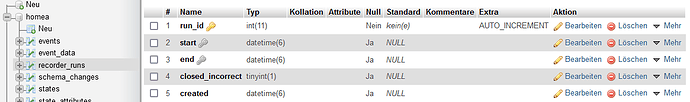

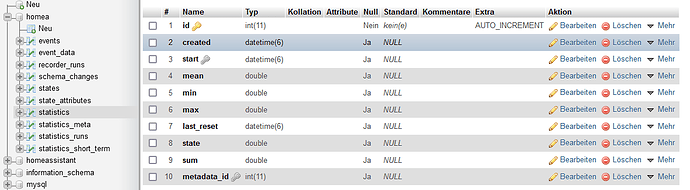

So just changed the allowed length on column ‘event_type’ from 32 to 64. Should resolve it if I understand the error message properly.