2023’s Year of the Voice built a solid foundation for letting users control Home Assistant by speaking in their own language.

We continue with improvements to Assist, including:

Oh, and “one more thing”: on-device, open source wake word detection in ESPHome! 🥳🥳🥳

Check out this video of the new microWakeWord system running on an ESP32-S3-BOX-3 alongside one doing wake word detection inside Home Assistant:

On-device vs. streaming wake word

microWakeWord

Thanks to the incredible microWakeWord created by Kevin Ahrendt, ESPHome can now perform wake word detection on devices like the ESP32-S3-BOX-3. You can install it on your S3-BOX-3 today to try it out.

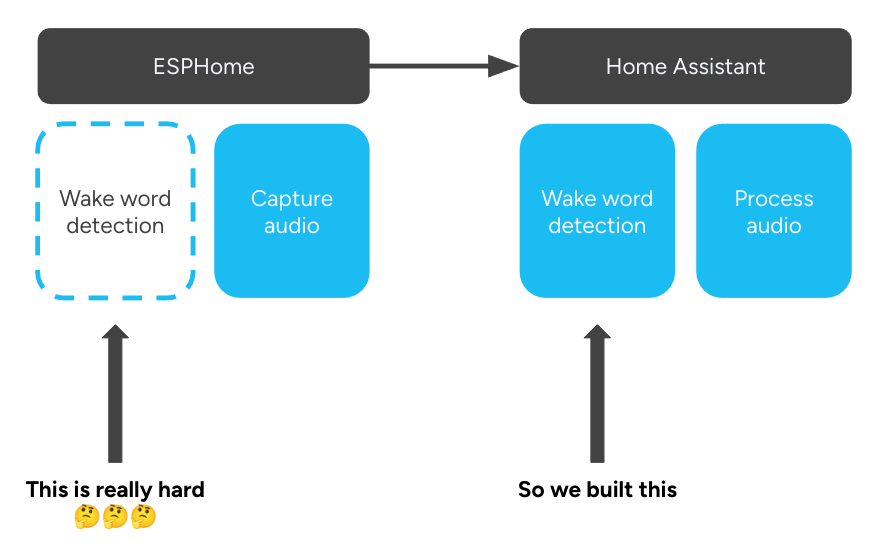

Back in Chapter 4, we added wake word detection using openWakeWord. Unfortunately, openWakeWord was too large to run on low power devices like S3-BOX-3. So we chose to run wake word detection inside Home Assistant instead.

Doing wake word detection in HA allows tiny devices like the M5 ATOM Echo Development Kit to simply stream audio and let all of the processing happen elsewhere. This is great, as it allows low-powered devices using a simple ESP32 chip to be transformed into a voice assistant even if they do not pack the necessary power to detect wake words. The downside is that adding more voice assistants requires more CPU usage in HA as well as more network traffic.

Enter microWakeWord. After listening to an interview with Paulus Schoutsen (founder of Home Assistant) on the Self Hosted podcast, Kevin Ahrendt created a model based on Google’s Inception neural network. As an existing contributor to ESPHome, Kevin was able to get this new model running on the ESP32-S3 chip inside the S3-BOX-3! (It also works on the, now discontinued, S3-BOX and S3-BOX-Lite)

Kevin has trained three models for the launch of microWakeWord:

- “okay nabu”

- “hey jarvis”

- “alexa”

You can try these out yourself now by following the ESP32-S3-BOX tutorial. Changing the default “okay nabu” wake word will require adjusting your ESPHome configuration and recompiling the firmware, which may take a long time and requires a machine with more than 2GB of RAM.

We’re grateful to Kevin for developing microWakeWord, and making it a part of the open home!

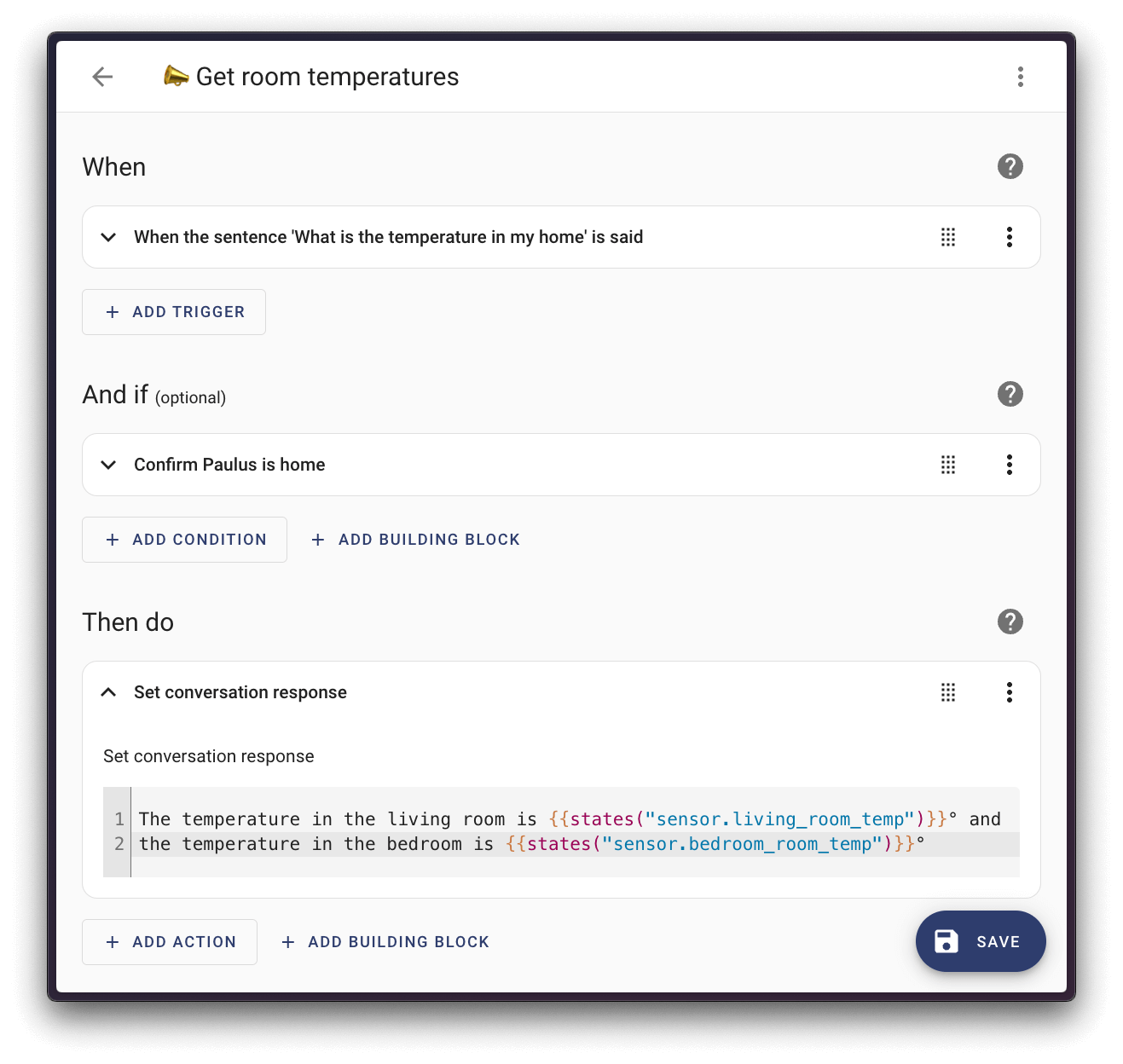

Sentence trigger responses

Adding custom sentences to Assist is as easy as adding a sentence trigger to an automation. This allows you to trigger any action in Home Assistant with whatever sentences you want.

Now with the new conversation response action in HA 2024.2, you can also customize the response spoken or printed back to you. Using templating, your response can refer to the current state of your home.

You can also refer to wildcards in your sentence trigger. For example, the sentence trigger:

could have the response:

Playing {{ trigger.slots.album }} by {{ trigger.slots.artist }}

in addition to calling a media service.

You can experiment now with sentence triggers, and custom conversation responses in our automation editor by clicking here:

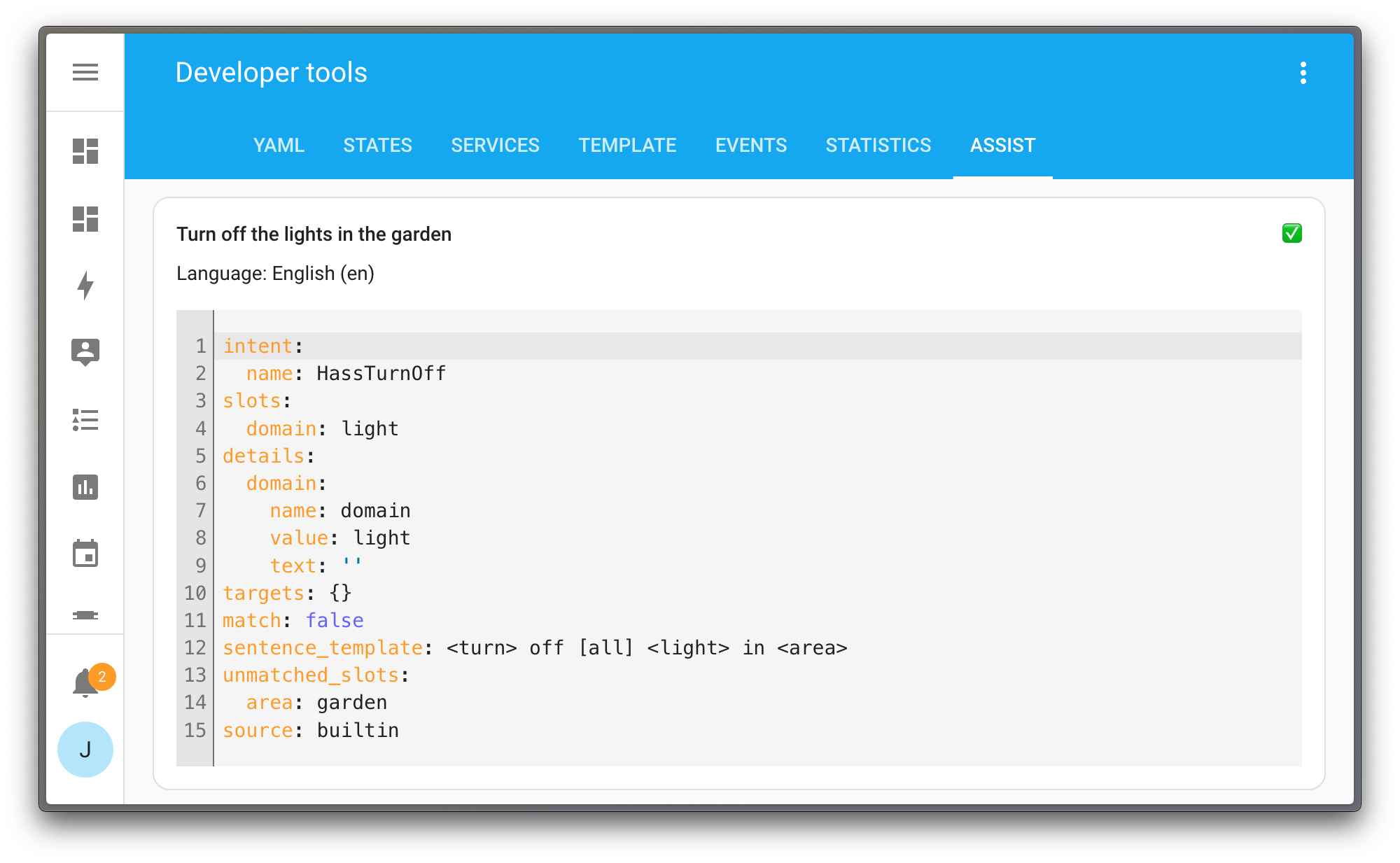

Improved errors and debugging

Assist users know the phrase “Sorry, I couldn’t understand that” all too well. This generic error message was given for a variety of reasons, such as:

- The sentence didn’t match any known intent

- The device/area names didn’t match

- There weren’t any devices of a specific type in an area (lights, windows, etc.)

Starting in HA 2024.2, Assist provides different error messages for each of these cases.

Now if you encounter errors, you will know where to start looking! The first thing to check is that your device is exposed to Assist. Some types of devices, such as lights, are exposed by default. Other, like locks, are not and must be manually exposed.

Once your devices are exposed, make sure you’ve added an appropriate alias so Assist will know exactly how you’ll be referring to them. Devices and areas can have multiple aliases, even in multiple languages, so everyone’s preference can be accommodated.

If you are still having problems, the Assist debug tool has also been improved. Using the tool, you see how Assist is interpreting a sentence, including any missing pieces.

Our community language leaders are hard at work translating sentences for Assist. If you have suggestions for new sentences to be added, please create an issue on the intents repository or drop us a line at [email protected]

Thank you

Thank you to the Home Assistant community for subscribing to Home Assistant Cloud to support voice and development of Home Assistant, ESPHome and other projects in general.

Thanks to our language leaders for extending the sentence support to all the various languages.

This is a companion discussion topic for the original entry at https://www.home-assistant.io/blog/2024/02/21/voice-chapter-6