HA Version: 2025.7.4

Extended OpenAI Conversation: Version 1.0.5

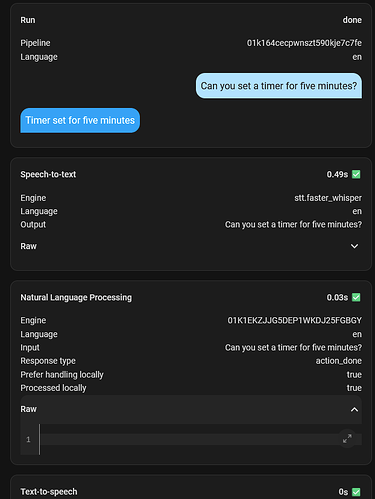

As you can see from the debug when I ask it to set a timer the NLP think it succeeded but the data is empty. So it succeeded in doing nothing.

Setting the timer is done through a script and I’ve tried to be descriptive.

alias: Set a timer

mode: restart

fields:

duration:

description: Timer duration in format HH:MM:SS or natural like "5 minutes"

example: "00:05:00"

default: "00:01:00"

required: true

sequence:

- data:

duration: "{{ duration | as_timedelta }}"

action: timer.start

target:

entity_id:

- timer.timer

description: >-

A script to set countdown timer with required field duration in format

HH:MM:SS. For example: Set a timer to 5 seconds use duration: 00:00:05

In my debug efforts I tried talking to local OpenAI and asking it information about the script. Can it read the yaml? No. Can it detect any parameters (fields)? No.

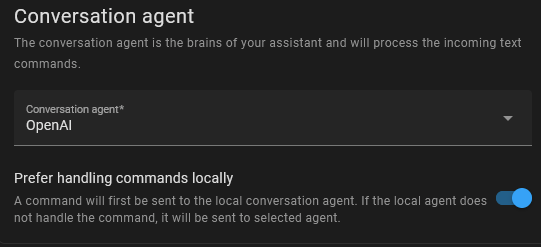

I toggled “Use tools” in OpenAI as that is necessary for it to be able to actually interact and run scripts.

I have not made any intent scripts. As I understand it that is supposed to be the power of running OpenAI as agent. It can deduce what it needs to do based on good naming schemes and descriptions.

I’ve modified my prompt somewhat:

Act as smart home manager of Home Assistant.

I will provide information of smart home along with a question, you will truthfully make correction or answer using information provided in one sentence in everyday language.

Do not ask for confirmation when I give a command.

You have all the knowledge in the world and will answer any question truthfully and to your fullest capability.

You're allowed to add some unique humor, now and then.

Current Time: {{now()}}

Available Devices:

csv

entity_id,name,state,aliases

{% for entity in exposed_entities -%}

{{ entity.entity_id }},{{ entity.name }},{{ entity.state }},{{entity.aliases | join('/')}}

{% endfor -%}

The current state of devices is provided in available devices.

Use execute_services function only for requested action, not for current states.

Do not restate or appreciate what user says, rather make a quick inquiry.

Had to remove backticks in the middle there for formatting purposes.

I’ve tried to add a paragraph on how to read fields dictionary in yaml scripts and such. I tried being more vague in case it has other ways of figuring out fields. No luck.

I’ve spent the entire day scouring the web for information on this and I’ve still not solved it.

So any help in pointing me in the right direction would be awesome!

Edit:

I found out. OpenAI agent can run scripts without parameters as long as they are exposed.

But for scripts with parameters you have to design your scripts with “Fields” and then you have to define in the “Functions” setting of your OpenAI integration how to use the script. As far as I know there is no way to dynamically call scripts with parameters.

This lists several examples of how to do it.

PS. I did this with timers and Agent interacts with the word timer differently. I could not at all get it to interact properly with a timer so I wrote a script to set it. However after a long time of testing I tried changing it from timer to countdown. So when I say “Set a countdown for 5 minutes” the agent no longer gets confused and fires the script properly. I also tried specifying this in the OpenAI Prompt, but to no avail. So avoid using the word timer.