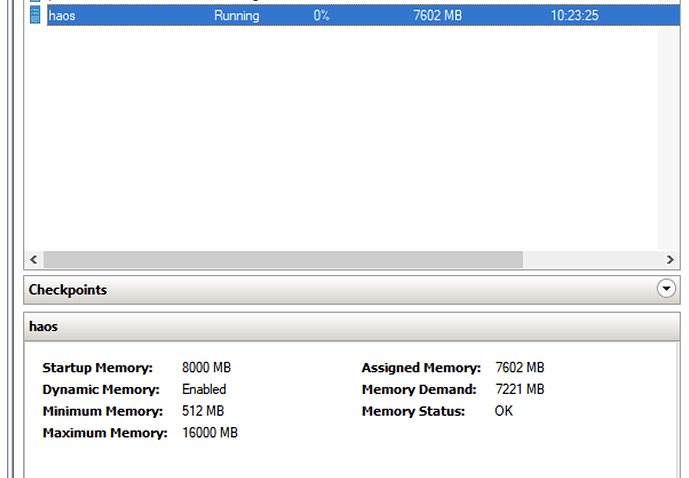

Hi! Please tell me, is it normal that home assistant installed on a hyper-v virtual machine reserves almost 8 gigabytes of RAM? I have 16 cores allocated and initially 4 gigabytes of RAM, but I could not update the system to the latest version with that amount of memory, I received a message that the memory was exhausted and processes were being terminated. Only after increasing the volume to 8GB was it possible to update.

At the same time, the home assistant interface (in the settings/hardware section) uses no more than 1.5 gigabytes of memory. And in the hyper-v panel almost 8 are reserved. Is this normal?

It is recommended to have a min of 32GB for HA.

Wow, never heard of this. They mostly use raspberry and other mini-systems; they rarely even have 4 GB of RAM. So this is normal system behavior?

Sorry, that is a min of 32GB of disk space. I have seen 4GB of RAM work ok unless there is an integration that is causing issues.

On ESXi I have 4 cores and 4GB. ESXi stats shows that while entire RAM is allocated to VM (which is normal, I’m not doing any over-provisioning on my system), very rarely +50% of this is active, so my system should easily survive having only 3GB allocated. Hyper-V is different, but not to such extreme. Perhaps something is going on due to such extreme number of cores being configured? On desktop class CPU 2 cores should be sufficient, 4 gives peace of mind for extreme situations.

You’ve given your vm “permission” to allocate up to 8GB of RAM, so that it allocates (not consumes / actively use) around 7,6 isn’t wrong.

16 cores is way, way too much. You are oversizing by a lot (which in turn can slow your vm down if you run more vms on the same host). You can turn it down to 2 or 4.

It seems to me that this is some kind of error in HA, since Hyper-V allocates memory very accurately, plus I have 2 more instances of Alpine Linux with PI-Hole running and for them the memory is allocated perfectly, about 600 megabytes)

I would not have paid attention to memory consumption if I had not encountered the problem that with 4 GB of RAM I was unable to update and was getting an Out of RAM error. I couldn’t find where this memory went, HTOP also showed less than 2GB in use, Hyper-V didn’t show anything (4GB was statically installed), 1.5 was used in the HA settings.

I previously used just hard-allocated 4 GB of RAM. I had to switch to dynamic allocation, since I could not update with either 4 or 6 GB of hard-allocated RAM.

(regarding 16 processor cores, I have a home virtualization system on an overpowered PC for these purposes, the CPU is never loaded and therefore I don’t mind giving 16 cores for HA))

It’s still way too much. You won’t get any noticeable performance gains. I run mine with 2 cores and 6GB RAM (on ESXi) without any issues.

I will try to cut cores to 4, maybe this will help with memory allocation too

It’s not a problem. If memory serves, HA (technically the underlying Linux OS) will use free memory as cache.

https://www.linuxatemyram.com/

Also see:

I was going to add HA(core) isn’t using that memory, some addon or custom_integration is. I have bare metal HAOS, but with 4gb available it has never used more than 3.

Its insane, but reduce the number of cores also reduces a used amount of RAM. 6GB with 8 cores and around 5 GB with 4 cores. (memory demand on screenshot)

It will likely go back up. If you check my post above you can see by running:

free -m

That most of that usage is likely to be caching.

Free -m

HA System Monitor

Proxmox

All this is absolutely true, I also have no more than 2 gigabytes of occupied memory. But I started this topic because 4 gigabytes of RAM was not enough for the update to 2024.5.0, and I’m trying to understand what the right thing to do is and what the mistake is. I even added SWAP by 10 gigabytes, but this did not help to update, only increasing the RAM.

Is it correct to assume that there was some kind of bug when trying to update and in normal operation the instance will perform fine, or, on the contrary, there is a memory leak somewhere and the node will again start spewing out of memory errors in a month or two.

Unused memory is wasted memory. It’s used for caching if available.

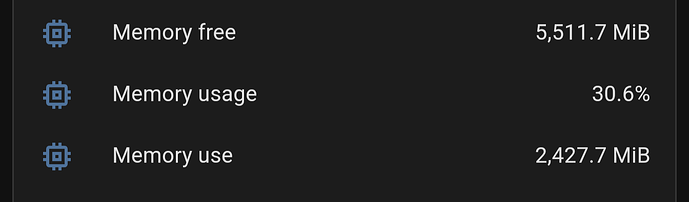

As a data point, I run HA in a Docker container on my 6GB RAM NAS with a 1.5GB reservation and 2GB hard limit:

Actual usage hovers around 30% most of the time: I get an alert if it spikes above 60% which usually sorts itself out.

Caching is handled by the host, which reports very little unused (free) RAM but over 2GB for cache:

total used free shared buff/cache available

Mem: 5914776 3471528 244908 240888 2198340 1907036

Swap: 2097084 1055604 1041480

Thank you for explanation!

Guys, I looked through the patch notes of the plugins that I have installed a little, it turned out that the integration with the Dreame vacuum cleaner was to blame, here is the link to the git.