You can keep whatever data as long as you want but you have to include whatever entities for so long so doing entire domains more then 30 days would make things grow really quick unless you just pick the entities you want to keep. You can keep some for a year but your DB is probably not going to grow real quick (20 to maybe 30 specific entities, maybe more) but 100, and depending on how much data those entities collect, it will add up rather quickly and use up more resources. I do wish there was a way to purge some records at 2 days and other much longer and maybe there is and I am just no aware of it. If anyone is, please let me know.

If you pick a just a few to run like that. Most stuff you don’t need to know anything more then 2 days, especially if you keep your recorder and history domains, included-domains (bad idea all around) and any specific entities that you need to keep and don’t keep the rest unless you care and are collecting it for SOME reason. Whether that is saving energy or using it via smart plugs and possible saving turning the TV on and off (unless they run through those smart plugs, then who needs those power sensors). Unless you are doing averages to see how much each is off or not.

Like most things in HA, look at your resources also and not just the drive because resources are actually more important. See what all is being written to the database, learn a few basic SQL queries. There are easy once you get the hang of the syntax. So we know there is a DB “domain” none as homeassistant using the default docs. That or hassio, one or the other but there are add ons for SQL explorers or programs you can download and connect and query the DB if needed.

Starting of with seeing the tables in a gui then connecting and doing a (select * from schema.table;) or whatever the syntax is. You will start to see patters, typically tables with id’s linked. Then you can do a (select * from schema.table where schema.tablle.column.entity_id =112); Because you saw in another table that entity_id was entity TV (or whatever). Main thing is if that resources are not overutilized and it’s not a disk space issue on the HA server/docker. As you can imagine, doing reordering and history on 3 years worth of a few entities maybe be 5GB (or more), but it’s also working less. When you record everything and have 200 entities then it really slows things down. AND adds up on space Now, backups take a while if you go the long roughs, no way around that, unless you are running HA on something a bit more powerful or doing a partial backup without MariaDB. A Pi 4 will take a good hour possible. It takes me close to 30 minutes and I am running it on a five year old Nuc, first one with an i5) with a sata nvme mini like an older laptop and 8GB of RAM although 2GB’s are almost never even in use. CPU is still at 25% to 30% during backups, and I don’t really to know how to look at the disk activity. I’m sure there are ways through terminal but I am not that Linux savvy unfortunately (although I have learned a lot)

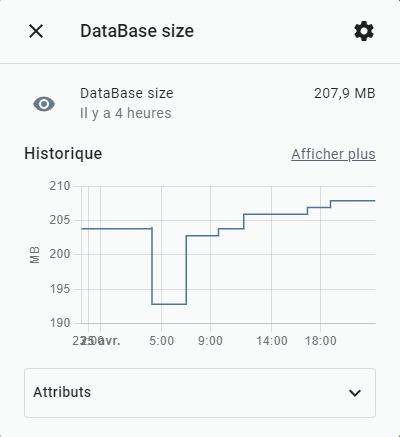

But as always, goes by the docks. It would appear you would need to do both a purge and rapack the database for it to actually reduce in side which can be done in Developer Tools under services although I do recommend writing an automation, just in case.

I may have said a lot for nothing, and you may already know. Just see way to many people add history and recorder to their configuration.yaml file and everything is just slow because it’s not just the data in the DB, it’s the amounts of different data, meaning more tables, meaning more complexity, which means more CPU and disk resources going to writing all that data constantly. Instead of just the needed things.

auto_purge boolean (optional, default: true)

Automatically purge the database every night at 04:12 local time. Purging keeps the database from growing indefinitely, which takes up disk space and can make Home Assistant slow. If you disable auto_purge it is recommended that you create an automation to call the recorder.purge periodically.

auto_repack boolean (optional, default: true)

Automatically repack the database every second sunday after the auto purge. Without a repack, the database may not decrease in size even after purging, which takes up disk space and can make Home Assistant slow. If you disable auto_repack it is recommended that you create an automation to call the recorder.purge periodically. This flag has no effect if auto_purge is disabled. I just the first option, that way you can just purge what you don’t need.

SERVICE PURGE_ENTITIES

Call the service recorder.purge_entities to start a task that purges events and states from the recorder database that match any of the specified entity_id, domains and entity_globs fields. Leaving all three parameters empty will result in all entities being selected for purging.

purge_keep_days integer (optional, default: 10)

Specify the number of history days to keep in recorder database after a purge.