Thanks! Going to give it a try this weekend.

Did you ever get a way of working with the Jetson stuff? Even with the CUDA docking image or anything?

I ended up getting a Jetson, it just arrived a few days ago. I haven’t gotten it working but my knowledge of containers is the main issue, not that it can’t be done. That and a lot of simple stuff I typically use doesn’t work on it, like Nano, not a fan of Vim (yes, i know it’s great once you learn it). I will give an update once I have some more time. Looks like I should have gone this route and just configured from another PC. I will give an update once I have had time to mess with it again.

It’s 100 percent double. There is even a package for it with all the voice stuff added. Some of the docker network stuff is just throwing me off a bit.

FYI [WIP] Add initial GPU support by edurenye · Pull Request #4 · rhasspy/wyoming-addons · GitHub should add GPU support to Whisper once merged (I hacked something together from that to get it working).

If on Fedora, you’ll need to have run the Ollama installer to configure a few things on your NVIDIA-bearing system, then you need to do the following (this is my own automation scripting language, but you can infer what it does):

nct = Pkg.installed("golang-github-nvidia-container-toolkit").requisite

cdi_path = File.directory("/etc/cdi", require=[nct]).requisite

cdi = Cmd.run(

"nvidia-ctk cdi generate --output=/etc/cdi/nvidia.yaml",

creates="/etc/cdi/nvidia.yaml",

require=[cdi_path],

).requisite

sebool = Selinux.boolean(

"NVIDIA access to Whisper",

name="container_use_devices",

value=True,

persist=True,

).requisite

Then the container should be run with arguments --device cuda otherwise it won’t accelerate.

SELinux: whichever data / cache directory you mount into the container must be registered with semanage fcontext (and attribute set on the directory) as container_file_t type and user system_u:

context_present = Selinux.fcontext_policy_present(

f"Set up SELinux contexts for share {share}",

name=share + "(/.*)?",

filetype="a",

sel_user="system_u",

sel_type="container_file_t",

require=[c],

).requisite

contexts.append(context_present)

context_applied = Selinux.fcontext_policy_applied(

f"Apply SELinux contexts for share {share}",

name=share,

recursive=True,

onchanges=[context_present],

).requisite

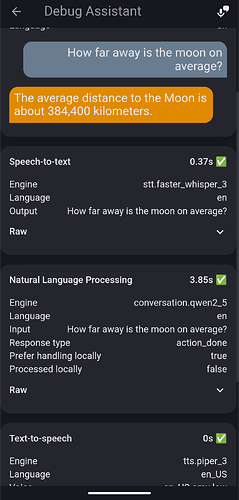

I meant to reply sooner but yes I got everything working on the Jetson. Using Ollama also. The GPU versions of whisper/piper are way faster but still not as accurate although I need to play around with the whisper models as it’s using tiny-int-8. Below are the debug times for a local voice command. Note, I noticed llama3.2 is slightly faster then qwen2.5 but have been playing with different models.

For use configuration with external whisper just run Whisper as you prefer (independent server, docker, VPS, external hosting, OpenAI API) and add to config .yaml . I recommend looking on huggingFace for a model adopted for your main language and quantize it to int8 and you will get even more speed and quality .

i use addon via hacs GitHub - neowisard/ha_whisper.cpp_stt: Home Assistant Whisper.cpp API SST integration

stt:

- platform: whisper_api_stt

api_key: "a132b20c-96be-467f-a15a-ed08aed67345" #Optional

server_url: "http://192.168.0.176:9000/v1/audio/transcriptions" #it can be OpenAI or local API

model: "whisper-1"

language: "ru"

temperature: "0.5"

i just start whisper.cpp server and it has server_url: “http://192.168.0.176:9000/inference”

or for example FastWhisper or another API.

Assistant config is :

Could you please share details about the model you are using for Russian. And what steps need to be taken to connect the model to wishper.cpp.

it work on Ubuntu 22 with GPU Nvidia GTX1650 and Tesla P40

model large v3+russian int8

but now(it smaller and faster) i using large-turbo q5

startup with flash atention and ru preferred :

/ai/whisper.cpp/build/bin/whisper-server -m /ai/models/whisper/ggml-large-v3-turbo-q5_0.bin --host 192.168.0.176 --port 9000 -l ru -fa -t 4 -sow -bo 2 -bs 4

use >

server_url: “http://192.168.0.176:9000/inference” #in HA

my script for update and make executable from git for moder card. For tesla P40 need use -DCMAKE_CUDA_ARCHITECTURES=61

CMAKE_ARGS="-DBUILD_DEPS=ON -DGGML_CUDA=on -DLLAMA_MAX_DEVICES=2 -DCMAKE_CUDA_ARCHITECTURES=75 -DLLAMA_CUDA_FORCE_MMQ=YES -DLLAMA_CUDA_KQUANTS_ITER=1 -DLLAMA_CUDA_FORCE_DMMV=YES DGGML_F16C=OFF -DGGML_AVX512=OFF -DGGML_AVX2=OFF -DGGML_FMA=OFF -DBUILD_TYPE=cublas"

cd /ai/whisper.cpp

git pull

make clean

cmake -B build -DGGML_CUDA_FORCE_MMQ=1 -DCMAKE_CUDA_ARCHITECTURES=75 -DGGML_CUDA=1 -DGGML_F16C=OFF -DGGML_AVX512=OFF -DGGML_AVX2=OFF -DGGML_FMA=OFF -DGGML_CCACHE=OFF

cmake --build build --config Release -j 8

Hey guys, I am having all kinds of trouble trying to set this up, just wondering if anyone had any pointers.

Docker ps:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2989cf650d6e rhasspy/wyoming-piper:latest "bash /run.sh --voic…" 3 hours ago Up 2 hours 0.0.0.0:10200->10200/tcp, :::10200->10200/tcp piper

2cd5b1887218 rhasspy/wyoming-whisper:latest "bash /run.sh --mode…" 5 hours ago Up 2 hours 0.0.0.0:10300->10300/tcp, :::10300->10300/tcp whisp

Compose files:

services:

piper:

image: rhasspy/wyoming-piper:latest

container_name: piper

restart: unless-stopped

ports:

- "10200:10200"

volumes:

- /mnt/cold_storage/piper/models:/models

command: --voice en-gb-southern_english_female-low --debug

services:

whisper:

image: rhasspy/wyoming-whisper:latest

container_name: whisper

restart: unless-stopped

ports:

- "10300:10300"

volumes:

- /mnt/cold_storage/whisper/audio:/data

command: --model tiny-int8 --language en

I cant even get a basic config going. It seems like the containers are running and ok. I have enabled debug logs for both and I am not seeing anything concerning or informational. This local test command spins forever making and audio wav file:

curl -X POST http://127.0.0.1:10200/synthesize -H "Content-Type: application/json" -d '{"text":"Hello world"}' --output output.wav

Here is my nginx config if that helps:

server {

listen 443 ssl;

server_name {FQDN};

ssl_certificate /etc/nginx/certs/cert.pem;

ssl_certificate_key /etc/nginx/certs/cert-key.pem;

location /whisper {

proxy_pass http://127.0.0.1:10300;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

# Piper proxy configuration

location /piper {

proxy_pass http://127.0.0.1:10200;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

# Default location (optional, for catching unmatched routes)

location / {

return 404; # Return 404 for any undefined paths

}

}

server {

listen 80;

server_name {FQDN};

return 301 https://$host$request_uri;

}

Though since I seem to be having problems locally, I am not sure the problem is NGINX. TLS seems to be working and resolving. I’d love to be working on GPU pass-through with yall, but I can’t even get a proof of concept down right now.

Edit: With a NGINX reverse proxy, would I put ‘https://{FQDN}/[whisper/piper]’ for the host, and 443 for the port?

You can even add Whisper from the UI, and it works flawlessly.

You’ll have to check your docker logs (or journalctl if running under systemd with podman) to see what the heck is going on. Also add -v to your CURL.

I do have debug logs enabled for the container:

DEBUG:__main__:Namespace(piper='/usr/share/piper/piper', voice='en-gb-southern_english_female-low', uri='tcp://0.0.0.0:10200', data_dir=['/data'], download_dir='/data', speaker=None, noise_scale=None, length_scale=No ne, noise_w=None, auto_punctuation='.?!', samples_per_chunk=1024, max_piper_procs=1, update_voices=False, debug=True)

DEBUG:wyoming_piper.download:Loading /usr/local/lib/python3.9/dist-packages/wyoming_piper/voices.json

DEBUG:wyoming_piper.process:Starting process for: en_GB-southern_english_female-low (1/1)

DEBUG:wyoming_piper.download:Checking /data/en_GB-southern_english_female-low.onnx

DEBUG:wyoming_piper.download:Missing /data/en_GB-southern_english_female-low.onnx

DEBUG:wyoming_piper.download:Checking /data/en_GB-southern_english_female-low.onnx.json

DEBUG:wyoming_piper.download:Missing /data/en_GB-southern_english_female-low.onnx.json

DEBUG:wyoming_piper.download:Downloading https://huggingface.co/rhasspy/piper-voices/resolve/v1.0.0/en/en_GB/southern_english_female/low/en_GB-southern_english_female-low.onnx.json to /data/en_GB-southern_english_fem ale-low.onnx.json

INFO:wyoming_piper.download:Downloaded /data/en_GB-southern_english_female-low.onnx.json (https://huggingface.co/rhasspy/piper-voices/resolve/v1.0.0/en/en_GB/southern_english_female/low/en_GB-southern_english_female- low.onnx.json)

DEBUG:wyoming_piper.download:Downloading https://huggingface.co/rhasspy/piper-voices/resolve/v1.0.0/en/en_GB/southern_english_female/low/en_GB-southern_english_female-low.onnx to /data/en_GB-southern_english_female-l ow.onnx

INFO:wyoming_piper.download:Downloaded /data/en_GB-southern_english_female-low.onnx (https://huggingface.co/rhasspy/piper-voices/resolve/v1.0.0/en/en_GB/southern_english_female/low/en_GB-southern_english_female-low.o nnx)

DEBUG:wyoming_piper.process:Starting piper process: /usr/share/piper/piper args=['--model', '/data/en_GB-southern_english_female-low.onnx', '--config', '/data/en_GB-southern_english_female-low.onnx.json', '--output_d ir', '/tmp/tmpw3qxsc2t', '--json-input']

INFO:__main__:Ready

Curl (with -v):

curl -v -X POST http://127.0.0.1:10200/synthesize -H "Content-Type: application/json" -d '{"text":"Hello world"}' --output output.wav

Note: Unnecessary use of -X or --request, POST is already inferred.

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0* Trying 127.0.0.1:10200...

* Connected to 127.0.0.1 (127.0.0.1) port 10200

> POST /synthesize HTTP/1.1

> Host: 127.0.0.1:10200

> User-Agent: curl/8.5.0

> Accept: */*

> Content-Type: application/json

> Content-Length: 22

>

} [22 bytes data]

100 22 0 0 0 22 0 0 --:--:-- 0:02:13 --:--:--

This wav file seems like it would go on forever, but I guess I havent left it for more than 10 minutes. When I inspect the file, it’s actually an HTML file with a gateway timeout

Something is up with your Piper container. That’s not normal.

That’s about as far as I have gotten too.

My setup really isn’t anything special, just an ext4 ubuntu vm with docker. I’m no cloud architect but I am certainly no stranger to docker apps either. I am new to “voice stuff” though and it really doesn’t help that there’s not a ton of documentation on this stuff yet. If you don’t see anything wrong with my compose file, I have already recreated the container many times, what would you suggest as far as troubleshooting? Is it time to create an issue on github?

I would.

In my case i plain run the thing with podman run and host networking. No compose nonsense at all. It just works and it is remarkable maintenance free.

Thanks, I spent the whole evening yesterday trying to make my own docker image trying those steps wyoming-faster-whisper-docker-cuda/Dockerfile at main · BramNH/wyoming-faster-whisper-docker-cuda and Wyoming whisper and piper on Proxmox with NVIDIA GPU PCI passthrough and CUDA support in Docker | The awesome garage (this solution would probably work, but it required the CUDA components installed on the host, I wanted everything other than the container runtime toolkit to come shipped with the image to have no dependencies on the host.

In the end I managed to build my own image and then found lscr.io/linuxserver/faster-whisper:gpu ![]()

Do you experience slow initial recognition when the model is used for the first time/after a long time?

I made my own container too! Based on another container that is submitted as a PR for the whisper rhasspy repo.

I experience slow STT only if for some reason whisper was not already loaded on the GPU. But “slow” here is still marginally faster than CPU STT with the model i chose.

I think whisper has an environment variable to preload the model, there’s also a TTS variable to ensure it stays loaded. This way you can speed up the process.

I have moved to using Speaches with wyoming_openai proxy so now I have that same instance of whisper and Piper/kokoro model available to both Home Assistant and Open WebUI

Cn you elaborate???

You can use this fork and branch of wyoming-addons to get a GPU based installation. Looks like its not ready for the default branch yet but it still works just fine, I am using it in my installation

@mateuszdrab might like this as well