I had an issue where downloading a backup from the web GUI timed out and stopped after 30 seconds. This plugin is a fantastic workaround to my problem and a much better solution overall! Donated €10.

Thanks a lot

Thank you for a great add-on - I use this on multiple setups.

I have run into som issues on my production instance though.

The add-on creates the backup just fine (I can see the backup locally), but it does not seem to upload the backup to my NAS.

The problem started 2022-12-29, where the last backup was uploaded to my NAS (I also back up to the cloud, so I’m not in a panic).

I can see a parse error on the log relating to ‘line 1 column 5’. I suspect it relates to the filename containing the date and writing a ‘:’ in the timestamp, but I don’t know how to troubleshoot it further.

I have the config and log below.

I hope you can help.

config:

host: 192.168.0.5

share: homeassistant

target_dir: /backups/production

username: 'username'

password: 'superseecretpassword'

keep_local: "2"

keep_remote: "10"

trigger_time: "10:30"

trigger_days:

- Mon

- Tue

- Wed

- Thu

- Fri

- Sat

- Sun

exclude_addons: []

exclude_folders: []

backup_name: "{type} Backup {version} {date}"

Log:

s6-rc: info: service s6rc-oneshot-runner: starting

s6-rc: info: service s6rc-oneshot-runner successfully started

s6-rc: info: service fix-attrs: starting

s6-rc: info: service fix-attrs successfully started

s6-rc: info: service legacy-cont-init: starting

cont-init: info: running /etc/cont-init.d/00-banner.sh

-----------------------------------------------------------

Add-on: Samba Backup

Create backups and store them on a Samba share

-----------------------------------------------------------

Add-on version: 5.2.0

You are running the latest version of this add-on.

System: Home Assistant OS 9.5 (amd64 / qemux86-64)

Home Assistant Core: 2023.2.0

Home Assistant Supervisor: 2023.01.1

-----------------------------------------------------------

Please, share the above information when looking for help

or support in, e.g., GitHub, forums or the Discord chat.

-----------------------------------------------------------

cont-init: info: /etc/cont-init.d/00-banner.sh exited 0

cont-init: info: running /etc/cont-init.d/01-log-level.sh

cont-init: info: /etc/cont-init.d/01-log-level.sh exited 0

s6-rc: info: service legacy-cont-init successfully started

s6-rc: info: service legacy-services: starting

s6-rc: info: service legacy-services successfully started

[23-02-07 10:23:19] INFO: ---------------------------------------------------

[23-02-07 10:23:19] INFO: Host/Share: 192.168.0.5/homeassistant

[23-02-07 10:23:19] INFO: Target directory: /backups/production

[23-02-07 10:23:19] INFO: Keep local/remote: 2/10

[23-02-07 10:23:19] INFO: Trigger time: 10:30

[23-02-07 10:23:19] INFO: Trigger days: Mon Tue Wed Thu Fri Sat Sun

[23-02-07 10:23:19] INFO: ---------------------------------------------------

[23-02-07 10:23:20] INFO: Samba Backup started successfully

[23-02-07 10:30:21] INFO: Backup running ...

[23-02-07 10:30:21] INFO: Creating backup "Full Backup 2023.2.0 2023-02-07 10:30"

parse error: Invalid numeric literal at line 1, column 5

[23-02-07 13:30:41] INFO: Backup finished

I have exactly the same config with one diff that is running smoothly. Notice the missing / in my config.

target_dir: backups/production

Perhaps that is the issue?

From the doc:

Option: target_dir

The target directory on the Samba share in which the backups will be stored. If not specified the backups will be stored in the root directory.

Note: The directory must exist and write permissions must be granted.

Hi @Janneman

Thank you for looking into this.

I have a staging setup with the exact same backup config (target_dir: /backups/staging instead), and this is working fine.

The user for the backup operation has r/w permissions to the directory.

The only difference I can see is the filesize of the backup, which is pretty large on the production setup - approx. 13GB (I know, I’m a data hoarder).

The same error with my setup as @carlhye reports:

s6-rc: info: service s6rc-oneshot-runner: starting

s6-rc: info: service s6rc-oneshot-runner successfully started

s6-rc: info: service fix-attrs: starting

s6-rc: info: service fix-attrs successfully started

s6-rc: info: service legacy-cont-init: starting

cont-init: info: running /etc/cont-init.d/00-banner.sh

-----------------------------------------------------------

Add-on: Samba Backup

Create backups and store them on a Samba share

-----------------------------------------------------------

Add-on version: 5.2.0

You are running the latest version of this add-on.

System: Home Assistant OS 9.5 (aarch64 / raspberrypi4-64)

Home Assistant Core: 2023.2.5

Home Assistant Supervisor: 2023.01.1

-----------------------------------------------------------

Please, share the above information when looking for help

or support in, e.g., GitHub, forums or the Discord chat.

-----------------------------------------------------------

cont-init: info: /etc/cont-init.d/00-banner.sh exited 0

cont-init: info: running /etc/cont-init.d/01-log-level.sh

Log level is set to DEBUG

cont-init: info: /etc/cont-init.d/01-log-level.sh exited 0

s6-rc: info: service legacy-cont-init successfully started

s6-rc: info: service legacy-services: starting

s6-rc: info: service legacy-services successfully started

[23-02-20 07:36:52] INFO: ---------------------------------------------------

[23-02-20 07:36:52] INFO: Host/Share: 192.168.2.199/Backup

[23-02-20 07:36:52] INFO: Target directory: Homeassistant

[23-02-20 07:36:52] INFO: Keep local/remote: 3/3

[23-02-20 07:36:52] INFO: Trigger time: 07:40

[23-02-20 07:36:52] INFO: Trigger days: Mon Tue Wed Thu Fri Sat Sun

[23-02-20 07:36:52] INFO: ---------------------------------------------------

[23-02-20 07:36:53] DEBUG: Backups local/remote: 3/0

[23-02-20 07:36:53] DEBUG: Total backups succeeded/failed: 0/14

[23-02-20 07:36:53] DEBUG: Last backup: never

[23-02-20 07:36:53] DEBUG: putting file samba-tmp123 as \Homeassistant\samba-tmp123 (0.0 kb/s) (average 0.0 kb/s)

[23-02-20 07:36:54] DEBUG: Posting sensor data to API at /core/api/states/sensor.samba_backup

[23-02-20 07:36:54] DEBUG: API Status: 200

[23-02-20 07:36:54] DEBUG: API Response: {"entity_id":"sensor.samba_backup","state":"IDLE","attributes":{"friendly_name":"Samba Backup","backups_local":"4","backups_remote":"0","total_backups_succeeded":"0","total_backups_failed":"14","last_backup":"never"},"last_changed":"2023-02-20T01:21:34.035736+00:00","last_updated":"2023-02-20T06:36:54.535604+00:00","context":{"id":"01GSPRM8C7QCREY9CNAPJA97K9","parent_id":null,"user_id":"69afdaa0c93c4de9a827e2cfe272548a"}}

[23-02-20 07:36:54] INFO: Samba Backup started successfully

[23-02-20 07:36:54] DEBUG: Starting stdin listener ...

[23-02-20 07:36:54] DEBUG: Starting main loop ...

[23-02-20 07:40:54] INFO: Backup running ...

[23-02-20 07:40:55] DEBUG: Backups local/remote: 4/0

[23-02-20 07:40:55] DEBUG: Total backups succeeded/failed: 0/14

[23-02-20 07:40:55] DEBUG: Last backup: never

[23-02-20 07:40:55] DEBUG: Posting sensor data to API at /core/api/states/sensor.samba_backup

[23-02-20 07:40:55] DEBUG: API Status: 200

[23-02-20 07:40:55] DEBUG: API Response: {"entity_id":"sensor.samba_backup","state":"RUNNING","attributes":{"friendly_name":"Samba Backup","backups_local":"4","backups_remote":"0","total_backups_succeeded":"0","total_backups_failed":"14","last_backup":"never"},"last_changed":"2023-02-20T06:40:55.515729+00:00","last_updated":"2023-02-20T06:40:55.515729+00:00","context":{"id":"01GSPRVKPV51M5QWAGNAQSM5ZJ","parent_id":null,"user_id":"69afdaa0c93c4de9a827e2cfe272548a"}}

[23-02-20 07:40:55] INFO: Creating backup "Samba Backup 2023-02-20 07:40"

parse error: Invalid numeric literal at line 1, column 5

[23-02-20 10:40:56] DEBUG: Posting sensor data to API at /core/api/states/sensor.samba_backup

[23-02-20 10:40:56] DEBUG: API Status: 200

[23-02-20 10:40:56] DEBUG: API Response: {"entity_id":"sensor.samba_backup","state":"FAILED","attributes":{"friendly_name":"Samba Backup","backups_local":"4","backups_remote":"0","total_backups_succeeded":"0","total_backups_failed":"15","last_backup":"never"},"last_changed":"2023-02-20T09:40:56.293271+00:00","last_updated":"2023-02-20T09:40:56.293271+00:00","context":{"id":"01GSQ357B5TCFW9TAGB4D4MT3P","parent_id":null,"user_id":"69afdaa0c93c4de9a827e2cfe272548a"}}

[23-02-20 10:41:06] DEBUG: Posting sensor data to API at /core/api/states/sensor.samba_backup

[23-02-20 10:41:06] DEBUG: API Status: 200

[23-02-20 10:41:06] DEBUG: API Response: {"entity_id":"sensor.samba_backup","state":"IDLE","attributes":{"friendly_name":"Samba Backup","backups_local":"4","backups_remote":"0","total_backups_succeeded":"0","total_backups_failed":"15","last_backup":"never"},"last_changed":"2023-02-20T09:41:06.508840+00:00","last_updated":"2023-02-20T09:41:06.508840+00:00","context":{"id":"01GSQ35HACJ3S3J3G65ZAWS430","parent_id":null,"user_id":"69afdaa0c93c4de9a827e2cfe272548a"}}

[23-02-20 10:41:06] INFO: Backup finished

Any idea?

I have same error (parse error: Invalid numeric literal at line 1, column 5) as above.

What I just noticed it that both @carlhye and @bulli as well as me are getting this error exactly 3 hours after backup has been started. Maybe system grew too big and we are facing some timeout because noone expected it would take so long?

Seems that @Gutek is right. I have put core_mariadb in exclude-list and now backup succeeds:

[23-02-23 18:45:22] INFO: Backup running ...

[23-02-23 18:45:23] DEBUG: Backups local/remote: 4/0

[23-02-23 18:45:23] DEBUG: Total backups succeeded/failed: 0/19

[23-02-23 18:45:23] DEBUG: Last backup: never

[23-02-23 18:45:23] DEBUG: Posting sensor data to API at /core/api/states/sensor.samba_backup

[23-02-23 18:45:23] DEBUG: API Status: 200

[23-02-23 18:45:23] DEBUG: API Response: {"entity_id":"sensor.samba_backup","state":"RUNNING","attributes":{"friendly_name":"Samba Backup","backups_local":"4","backups_remote":"0","total_backups_succeeded":"0","total_backups_failed":"19","last_backup":"never"},"last_changed":"2023-02-23T17:45:23.460422+00:00","last_updated":"2023-02-23T17:45:23.460422+00:00","context":{"id":"01GSZP2E844NDN6YAAKQKKWPZ0","parent_id":null,"user_id":"69afdaa0c93c4de9a827e2cfe272548a"}}

[23-02-23 18:45:23] INFO: Creating backup "Samba Backup 2023-02-23 18:45"

[23-02-23 18:47:34] INFO: Copying backup 7f7ec9d5 (Samba_Backup_2023_02_23_18_45.tar) to share

[23-02-23 18:47:35] DEBUG: putting file 7f7ec9d5.tar as \Homeassistant\Samba_Backup_2023_02_23_18_45.tar (79457.5 kb/s) (average 79457.5 kb/s)

[23-02-23 18:47:36] DEBUG: List of local backups:

{"date":"2023-02-23T17:45:23.675679+00:00","slug":"7f7ec9d5","name":"Samba Backup 2023-02-23 18:45"}

{"date":"2023-02-23T06:40:34.409503+00:00","slug":"5e6b356f","name":"Samba Backup 2023-02-23 07:40"}

{"date":"2023-02-22T06:40:36.657535+00:00","slug":"68b32141","name":"Samba Backup 2023-02-22 07:40"}

{"date":"2023-02-21T06:40:32.564216+00:00","slug":"118da3a6","name":"Samba Backup 2023-02-21 07:40"}

{"date":"2022-12-23T01:31:17.173395+00:00","slug":"211dc0eb","name":"Full Backup 2022-12-23 02:31:16"}

[23-02-23 18:47:36] INFO: Deleting 118da3a6 (Samba Backup 2023-02-21 07:40) local

[23-02-23 18:47:36] DEBUG: Command completed successfully.

[23-02-23 18:47:36] INFO: Deleting 211dc0eb (Full Backup 2022-12-23 02:31:16) local

[23-02-23 18:47:36] DEBUG: Command completed successfully.

[23-02-23 18:47:36] DEBUG: List of remote backups:

2023-02-23 19:45 Samba_Backup_2023_02_23_18_45.tar

[23-02-23 18:47:37] DEBUG: Posting sensor data to API at /core/api/states/sensor.samba_backup

[23-02-23 18:47:37] DEBUG: API Status: 200

[23-02-23 18:47:37] DEBUG: API Response: {"entity_id":"sensor.samba_backup","state":"SUCCEEDED","attributes":{"friendly_name":"Samba Backup","backups_local":"3","backups_remote":"1","total_backups_succeeded":"1","total_backups_failed":"19","last_backup":"2023-02-23 18:47"},"last_changed":"2023-02-23T17:47:37.543805+00:00","last_updated":"2023-02-23T17:47:37.543805+00:00","context":{"id":"01GSZP6H67QFT6DNK74TCZW23H","parent_id":null,"user_id":"69afdaa0c93c4de9a827e2cfe272548a"}}

Seems that way @bulli

I’m using the build in SQLite db, and is’t performing OK, despite the large dataset.

I think the add-on should be able to handle large backups though - unless there’s a specific technical reason not to.

The Google cloud back-up add-on works fine, but it’s cloud only, and I would like to have local back-up.

@thomasmauerer has already ideas to solve the issue, as I just saw: Replace CLI with direct API calls · Issue #79 · thomasmauerer/hassio-addons · GitHub

Thanks for this addon.

Sounds great!

I hope for a solution soon.

While we’re at it with ideas for further development; Generational backups would be a nice addition as well.

This is from the Google Cloud add-on - but I’d really like to have as much as possible in my own private cloud.

Thank you for a great add-on @thomasmauerer - it’s super to have local backup!

Hi. I’m getting this error:

Host/Share: 192.168.33.251/HomeAssistant

[23-02-28 09:58:15] INFO: Target directory: backups

[23-02-28 09:58:15] INFO: Keep local/remote: all/all

[23-02-28 09:58:15] INFO: Trigger time: 00:00

[23-02-28 09:58:15] INFO: Trigger days: Mon Tue Wed Thu Fri Sat Sun

[23-02-28 09:58:15] INFO: ---------------------------------------------------

[23-02-28 09:58:18] WARNING: cd ackups\: NT_STATUS_OBJECT_NAME_NOT_FOUND

[23-02-28 09:58:18] FATAL: Target directory does not exist. Please check your config.

Config matches what I connect to via MacOS. What is the weird character in the dir name about do you think?

Why are you using a backslash? Normally it should be a slash.

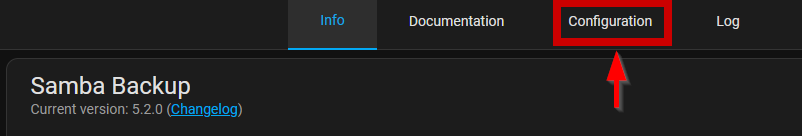

OK. I am brand new to Haas. Downloaded the official Samba Share and there was a tab for configuration. I downloaded your Add-on by adding your repository. But there is no tab to config. How is everyone doing all these configurations? If it matters, I’m on the HA OS, running on ODroid N2+.

So Basically you go to your home assistant (your.ip.of.homeassistant:8123/hassio/store) and then you should see the AddOn

Then go to “Configuration”

There you can add the SMB-Share/Fileserver Credentials you want to copy to.

Just out of curiousity because you mentioned the Samba-Addon, which is irrelevant for this Addon:

what do you want to accomplish (basically what is your intended plan)?

If you want to Use this Addon to Copy the Backup to your Homeassistant: this NOT be the intended way. (It might be technically possible, but in my opinion a technical overkill and totally overengineered)

This Addon is mainly to copy your backup to other PCs/Servers via the SMB-protocol.

If you want to make “normal” backups and have them available via SMB (with your Samba-Addon), just use the following automation/blueprint:

tl;dr:

Do you want to accomplish this:

Or do you really want to accomplish this:

When I install the addon, under the configuration tab it say: This add-on does not expose configuration for you to mess with…

When I hit start it says: Missing required option ‘host’ in Samba Backup (15d21743_samba_backup)

And if I go edit it says: This add-on does not expose configuration for you to mess with…

I am going to be migrating to MariaDB + InfluxDB in the coming weeks. Will this backup program take clean (e.g. NOT crash consistent) backups of these databases? Meaning, flush buffers, finish writes, etc. before a snapshot/backup is taken to ensure it will restore correctly?

I followed the instructions for getting the Samba sensor to start on a Home Assistant restart via Blueprint. It worked maybe 1-2x, then it fails after that. HA 2023.3.6. Any ideas?

I am struggling to get this add-on to start and not stop itself.

Here is my configuration details I am trying.

host: 192.168.1.100

share: HASS

target_dir: /media/backup/HASS

username: *****

password: *****

keep_local: all

keep_remote: "28"

trigger_time: "03:30"

trigger_days:

- Mon

- Tue

- Wed

- Thu

- Fri

- Sat

- Sun

exclude_addons: []

exclude_folders: []

backup_name: Local {type} Backup {year}-{month}-{day}

workgroup: WORKGROUP

Here are the details of my samba share from webmin. My samba share is just a USB stick plugged into the mac mini I am using to run Debian Bullseye/Home Assistant.

Unfortunately, the add-on keeps getting stopped with the following error.

Any help would be greatly appreciated. Thanks in advance.

If I look at my config try the following change, remove the first “/” and the target dir “HASS” as share is already HASS

target_dir: media/backup/