FWIW, I recently got into Home Assistant and I was happy to see there’s a Bayesian thingy. I’ll be playing with it soon.

I was using the bayesian sensor for over 3 years for detecting presence at home.

I can’t get it working anymore as before 2022.10, so I created a custom_component for it.

If you wan’t to use it, add GitHub - gieljnssns/bayesian: The bayesian binary sensor for Home-Assistant to HACS and search for bayesian.

It should work again as before…

I am working on a pull request to enable exactly this functionality using the numeric state entity:

Accept more than 1 state for numeric entities in Bayesian by HarvsG · Pull Request #80268 · home-assistant/core · GitHub

This is a great sensor. I use it for presence in rooms and I’m thinking about using it for presence in the house. I see all the changes that have been made and they are great. I’m loving it.

Hi,

100% agree with you. I’m using Bayesian for things like “should the home heat?”, “should it cool?”, “home sleeps?”, presence etc. Yes, like mentioned somewhere here, we have a lot of sensors … but … Making decision if to heat or cool based only on ext and int temperatures is not as great (and sophisticated) as using it with Bayesian together with some other data like season, month, presence etc. It is like kind of AI  and works much better then simple if > then.

and works much better then simple if > then.

I vote for it - for me it is an essential part of any automation.

M.

Hi,

so, my remarks from top of the head to think about …

-

Rename “Bayesian” to something like “Probability” as I mentioned here: Bayesian rename

-

Add some linear observation - at least templatable threshold. The higher/lower result the higher/lower threshold of the whole sensor or higher/lower given_true/given_false values.

Nothing else is crucial to me, I covered my needs so far

Generally I wish more integrations like this, more “soft” decision HA capabilities, like kind of machine learning, easy access to history values, AI etc.

Thanks, have a nice day,

M.

Can you expand on this, I am not quite sure what you mean? Allow the probability threshold to be variable/template-able?

If so can you explain in what situations you would want this?

Hi @HarvsG ,

OK.

I mean to control the probability sensor state not only by binary input states, but also by int/float values:

- The more is the Sun set the higher probability is turning lights on while observing also decreasing indoor illuminance.

- The faster I’m approaching home (different speed by car, by walk, etc.) the higher probability is to start heating to have comfort at the right time (maybe a bit overkill example).

- The farther are home members from home, the higher probability is to switch my Vacation state sensor on and do relevant actions.

- The more is night coming up the higher probability is that the home goes to sleep.

So would be nice to integrate also non-binary observations. Or at least have an option to control values which are currently fixed:

I can see useful when we could control the “probability_threshold” programmatically. So instead of using just one “hard” float value in sensor definition, would be nice to have an option to use a template for this value.

probability_threshold: "{{ template returning the value }}"

F.e. the probability threshold of heating switch could slightly decrease with decreasing outdoor and indoor temperatures. While one feels good when inside is 19°C and outside is 25°C, different feeling is when inside is 19°C and outside -5°C.

The same is with “prob_given_true” and “prob_given_false” properties to control particular observed sensors.

Best,

M.

This might be a neat idea. I could see some logic behind it.

What you are proposing is similar to what I’m trying to achieve in Bayesian - Accept more than 1 sate for numeric entities by HarvsG · Pull Request #80268 · home-assistant/core · GitHub

Except in this case it is organised into buckets rather than a continuous function.

If I understood correctly negative observations are taken into account to determine the propability since 2022.10? And the prop_given_true when observation is False is 1 minus prop_given_true when observation is true?

In that case I have some doubts if this is correct. Of course statistically this make sense but in the physical world this can cause some faults.

I noticed this with my Bayesian sleeping sensor which I use. To predict if everyone in my house is sleeping one of the observations I use is a template to check the time delta since the last time a motion sensor was updated. When this delta is less than 30min it is very unlikely that everyone is sleeping (Prop_given_true= 0.05). But when the delta becomes 31 min the observation becomes False and results in a very high propability that everyone is sleeping which is not correct.

Another generic example:

If I see a man with an umbrella walking on the street it is very likely that it is raining, P is for instance 0.99. But if I don’t see that man with an umbrella I can’t say that the probability is very low that it is raining because not every man is carrying an umbrella when it’s raining. Of course it says something but definitely not 1-0.99 = 0.01

Maybe it is an idea that the user can set all 4 propabilities (true positive, true negative, false positive and false negative)

If I understood correctly negative observations are taken into account to determine the propability since 2022.10?

This is correct

And the prop_given_true when observation is False is 1 minus prop_given_true when observation is true?

This is also correct, prob_given_true is the 'probability of {entity state} == {to_state} given {bayesain sensor = ‘on’} i.e ‘probability of motion in last 30mins given everyone sleeping’. This is different from ‘probability of everyone sleeping given motion in the last 30mins’ (This seems to be the number you are using). This may seem like a subtle and irrelevant distinction but is actually what defines Bayesian statistics and gives it its magic sauce.

As such it it is a mathematical truth (for binary observations) that the probability of observation given outcome + the probability of not observation given outcome = 1. Because for any outcome the only the observation can only be observed or not observed.

For completeness prob_given_false is the 'probability of {entity state} == {to_state} given {bayesain sensor = ‘off’}

Both the instances you describe can be corrected by configuring the probabilities correctly.

binary_sensor:

- platform: bayesian

prior: 0.35

name: "everyone_sleeping"

observations:

- entity_id: binary_sensor.montion_in_las_30s

prob_given_true: 0.05 # When everyone is sleeping I am very unlikely to detect motion, so when motion is detected it will have a significant impact on the probability

prob_given_false: 0.5 # When not everyone is sleeping I expect to detect motion half the time. This means that the absence of motion won't make much difference to the probability, as you desire.

platform: "state"

to_state: "on"

When this delta is less than 30min it is very unlikely that everyone is sleeping (Prop_given_true= 0.05)

As described above here you are confusing the probability of the data given the outcome with the probability of the outcome given the data.

If I see a man with an umbrella walking on the street it is very likely that it is raining, P is for instance 0.99. But if I don’t see that man with an umbrella I can’t say that the probability is very low that it is raining because not every man is carrying an umbrella when it’s raining.

You are exactly right, however we are not interested in ‘If I see a man with an umbrella walking on the street it is very likely that it is raining’ we are interested in ‘If it is raining, some people will have an umbrella’ and ‘If it is not raining I am very unlikely to see an umbrella’.

In bayesian stats it is the chance of the observation/entitiy-state given the event/situation/context/bayesian-sensor. AKA the chance of an umbrella given it is raining. From that information, and a prior, we work out the probability it is raining.

prob_umbrella_given_raining = 0.6 # When it is raining a slight majority (60%) of people will have umbrellas

prob_umbrella_given_not_raining = 0.01 # When it is not raining it would be very odd 1% to see an umberalla

You can read more here: https://www.home-assistant.io/integrations/bayesian/#theory

Of course it says something but definitely not 1-0.99 = 0.01

This is exactly one of the behaviours that was fixed in 2022.10 and why prob_given_false is now required

Maybe it is an idea that the user can set all 4 propabilities (true positive, true negative, false positive and false negative)

These are taken into account when prob_given_true and prob_given_false are set correctly. In fact the ‘in_bed’ example in the official documentation walks through a motion sensor that gives a false negative half the time someone is in bed.

Thanks for your extensive reply. appreciate it  I have indeed misinterpreted the propabilities of the data. I am going to rework my sensors!

I have indeed misinterpreted the propabilities of the data. I am going to rework my sensors!

Yes, I saw that. It is actually also binary, even in buckets. It is easy to realize it by template returning bool if the value is in specified range, which I actually do in some cases already. I’m talking about analog (linear, exponential, …) possibility of control of the values.

Ok, do do that the use would need to create some probability function or distribution over the possible range of values. I’m not entirely sure how that would be achieved.

If you can think of (1) some reasonably common use cases where this would have a definite benefit over buckets (2) could be done in a way that a user without a background in stats could do and (3) how to implement this in code I might consider it.

But I have to say that implementing continuous probability distributions in bayesian functions stretch the limits of my understanding of Bayesian stats.

In Bayesian - Accept more than 1 sate for numeric entities by HarvsG · Pull Request #80268 · home-assistant/core · GitHub (not yet implemented) there are a series of buckets. The prior will be updated by whichever bucket the observation is in, if there are no observations then the prior won’t be updated. So this isn’t binary.

From my perspective, Mr. Bayes, in his times, didn’t expect his theorem be used continuously for automations, calculating new results in milliseconds  My proposal is actually like that - using his “static” formula with new input values whenever they change, even slightly, even every milli. It is not breaking his theorem IMHO.

My proposal is actually like that - using his “static” formula with new input values whenever they change, even slightly, even every milli. It is not breaking his theorem IMHO.

The bucket solution is evaluating some range to true/false if I understand it well. If I’m out of that range, it is not observed at all. If I’d like to evaluate f.e. range of outdoor temperature for heating/cooling (in my country it can be from -20°C to +35°C), I’d expect linear probability change. Of course it can be realized by a lot of other ways, but I see this clean and simple. Just using a template for the threshold which returns a float between f.e. 0.3 and 0.8. Let the template output on the HA user, just accept template for threshold, given_true, given_false. Out of the range values are exceptions raising an error/unknown state.

Anyway, it is definitely up to you, you are the “pro” and author

P.S. just an example of one of my Bayes sensor using “buckets” (beside other observed sensors):

- platform: template

value_template: "{{ now().time() <= strptime(['0845', '0655'][states('binary_sensor.workday_today') | bool(true)], '%H%M').time() }}"

prob_given_true: 0.960

prob_given_false: 0.050

- platform: template

value_template: "{{ strptime(['0845', '0655'][states('binary_sensor.workday_today') | bool(true)], '%H%M').time() < now().time() <= strptime('2050', '%H%M').time() }}"

prob_given_true: 0.250

prob_given_false: 0.700

- platform: template

value_template: "{{ strptime('2050', '%H%M').time() < now().time() <= strptime(['2300', '2150'][states('binary_sensor.workday_tomorrow') | bool(true)], '%H%M').time() }}"

prob_given_true: 0.600

prob_given_false: 0.250

- platform: template

value_template: "{{ strptime(['2300', '2150'][states('binary_sensor.workday_tomorrow') | bool(true)], '%H%M').time() < now().time() }}"

prob_given_true: 0.860

prob_given_false: 0.120

This I’d like to avoid by just adjusting the threshold value.

I was inspired by @HarvsG spreadsheet

and created a python notebook that helps jumpstart my template automatically.

Problem

My cats set my motion sensors off.

Well not all of them. A handful of my motion sensors can be placed around so that it only picks up human beings.

The trouble is,there are places that I want motion sensors not to look like eyesores (the kitchen) or creepy (in a bathroom). Low placement under cabinets is great for discrete placement, but picks up my wandering kittens.

Solution

I intuitively set up a Bayesian sensor that:

- watches for well placed motion in adjacent rooms to the kitchen

- watches for light patterns that I believe indicate humans and not felines

It worked ok but needed some tuning. @HarvsG spreadsheet is a lifesaver but gathering the hours per day was a chore.

Over Engineering

So I wrote this python notebook which will spit out a starting point for your own bayes sensor.

Here are the steps:

-

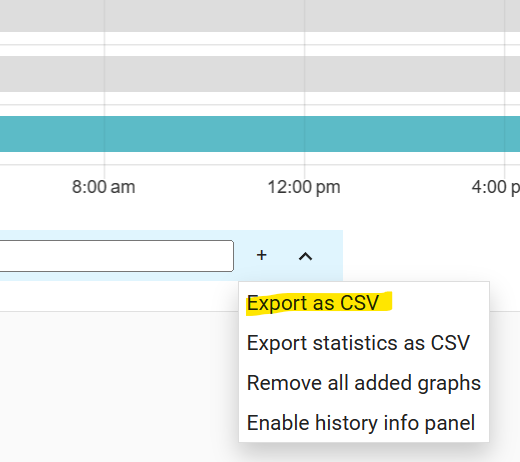

Make sure you have History Explorer installed

-

Create a permanent or temporary dashboard with all of the sensors you might believe could help you. Something like this (yellow highlights are my cats in the kitchen)

-

Add one more thing to that dashboard: a “truth sensor” (or manually edit: see later) that reflect events (like “real” motion vs “kitty” motion)

-

Export 4-5 days of statistics in the History Explorer using its “Export as CSV”

-

If you don’t have a “truth sensor”, edit that csv in some tool and add a new fake named sensor, manually putting in on/off events that mark the start and end of reality. For example, I copied my motion sensor and deleted rows corresponding to “kitty motion”.

-

Save the Jupyter notebook code at the end here with the file extension

.ipynb. -

Upload this notebook to the free Jupyter Lab.

-

Upload the csv you exported

-

edit the “CONFIGURATION” section at the top

- set your csv filename

- set the name of your “truth” column in the csv

- set how many correlated features (sensor + sensor state) combinations that you would like this to discover

-

Run the notebook by clicking on the triple arrow in the top toolbar

-

See example output at the bottom

The future

It would be great if I could turn this into a plugin (it would require numpy, and some bayesian libraries like scikit running on the homeassistant computing power) which would let you:

- select some schedul representing ground truth for your goal (ie. I was asleep these hours, or this is REAL motion)

- select a list of sensors you think my be interesting

- click ‘go’ and have it give you a suggestion.

I am not an expert in this ecosystem so this is all I’ll have for now.

Hopefully this is useful to someone.

Example Output (fully automated)

Now this output needs some hand tweaks. For example, while the kitchen motion “on” is a great indicator of motion, there is no need to ALSO have the kitchen motion “off” observation, so I’ll just delete that.

# binary_sensor.kitchen_occupancy is on 23.93% of the time

- platform: bayesian

name: Probably binary_sensor.kitchen_occupancy # CHANGE ME

prior: 0.24

probability_threshold: 0.8 # EXPERIMENT WITH ME

observations:

# When 'binary_sensor.den_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.den_occupancy

to_state: on

prob_given_true: 0.30 # (1.7 hours per day)

prob_given_false: 0.18 # (3.3 hours per day)

# When 'binary_sensor.front_yard_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.front_yard_occupancy

to_state: on

prob_given_true: 0.20 # (1.1 hours per day)

prob_given_false: 0.06 # (1.1 hours per day)

# When 'binary_sensor.hall_occupancy' is 'off' ...

- platform: state

entity_id: binary_sensor.hall_occupancy

to_state: off

prob_given_true: 0.59 # (3.4 hours per day)

prob_given_false: 0.93 # (16.9 hours per day)

# When 'binary_sensor.hall_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.hall_occupancy

to_state: on

prob_given_true: 0.41 # (2.4 hours per day)

prob_given_false: 0.07 # (1.3 hours per day)

# When 'binary_sensor.kitchen_occupancy' is 'off' ...

- platform: state

entity_id: binary_sensor.kitchen_occupancy

to_state: off

prob_given_true: 0.01 # (0.0 hours per day)

prob_given_false: 0.99 # (18.3 hours per day)

# When 'binary_sensor.kitchen_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.kitchen_occupancy

to_state: on

prob_given_true: 0.99 # (5.7 hours per day)

prob_given_false: 0.01 # (0.0 hours per day)

# When 'binary_sensor.laundry_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.laundry_occupancy

to_state: on

prob_given_true: 0.18 # (1.0 hours per day)

prob_given_false: 0.06 # (1.1 hours per day)

# When 'light.dining_room_main_lights' is 'on' ...

- platform: state

entity_id: light.dining_room_main_lights

to_state: on

prob_given_true: 0.14 # (0.8 hours per day)

prob_given_false: 0.03 # (0.5 hours per day)

Jupyter code

{

"metadata": {

"language_info": {

"codemirror_mode": {

"name": "python",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8"

},

"kernelspec": {

"name": "python",

"display_name": "Python (Pyodide)",

"language": "python"

}

},

"nbformat_minor": 4,

"nbformat": 4,

"cells": [

{

"cell_type": "code",

"source": "import dateutil.parser as dparser\nimport numpy as np\nimport os\nimport pandas\nfrom sklearn.feature_selection import SelectKBest\nfrom sklearn.feature_selection import chi2\nfrom sklearn.naive_bayes import MultinomialNB\nfrom sklearn.model_selection import train_test_split\nfrom sklearn.metrics import accuracy_score",

"metadata": {

"trusted": true

},

"execution_count": 1,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# CONFIGURATION\n###########################################\nHISTORY_EXPLORER_EXPORT_FILE='entities-exported.csv'\nTRUTH_COLUMN = 'binary_sensor.kitchen_occupancy'\nINCLUDE_TRUTH_COLUMN_AS_OBSERVATION = True\nIGNORE_COLUMNS_WHEN_CHOOSING_FEATURES = [\n 'datetime',\n 'Unnamed: 0'\n]\nTOP_N_FEATURES = 8\nSAVE_DEBUG_OUTPUT = True",

"metadata": {

"trusted": true

},

"execution_count": 2,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# read history explorer export\ndf = pandas.read_csv(HISTORY_EXPLORER_EXPORT_FILE)",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# remove junk entries\n###########################################\ndf = df[df.State != \"unavailable\"]\ndf = df[df.State != \"unknown\"]",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# Take the sensor name and pivot it into a\n# column with it's state underneath it\n###########################################\nsensor = None\nunrolled = []\nfor idx, row in df.iterrows():\n try:\n dt = dparser.parse(row[0])\n value = row[1]\n unrolled.append([sensor, dt, value])\n except dparser.ParserError:\n sensor = row[0]\n continue\n \nsorted_df = pandas.DataFrame(unrolled, columns=['sensor', 'datetime', 'value']).sort_values(by=['datetime']).reset_index()\npivoted_df = pandas.pivot_table(sorted_df, index='datetime', columns='sensor', values='value', aggfunc='last')",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# Sanity Check the truth column\n###########################################\nif TRUTH_COLUMN not in pivoted_df:\n raise SystemExit(f\"TRUTH_COLUMN '{TRUTH_COLUMN}' does not exist in your CSV file. Here are the columns:{pivoted_df.columns}\")",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# fill in the data to make sure there\n# is one data point every minute\n###########################################\nprevious_values = [np.nan for x in pivoted_df.columns]\nnew_data = []\nfor dt, row in pivoted_df.iterrows():\n cur_and_prev = list(zip(list(row), previous_values))\n filled_row = [x[0] if not pandas.isnull(x[0]) else x[1] for x in cur_and_prev]\n previous_values = list(filled_row)\n \n new_row = [dt]\n new_row.extend(filled_row)\n \n new_data.append(new_row)\n \ncolumns = ['datetime']\ncolumns.extend(pivoted_df.columns)\nfilled_state_df = pandas.DataFrame(new_data, columns=columns)\n\n# truncate data to the minute\nfilled_state_df['datetime'] = filled_state_df['datetime'].apply(lambda x: x.floor('min'))\nfilled_state_df = filled_state_df.groupby('datetime', group_keys=False).tail(1)\n\n# fill in missing records for every minute of the day\nprevious_row = []\nprevious_dt = None\nnew_data = []\nfor idx, row in filled_state_df.iterrows():\n dt = row[0]\n \n if previous_dt is not None:\n # create missing times between last row and this one\n next_dt = previous_dt + pandas.Timedelta(seconds=60)\n while dt > next_dt:\n previous_row[0] = next_dt\n new_data.append(list(previous_row))\n next_dt = next_dt + pandas.Timedelta(seconds=60)\n new_data.append(list(row))\n \n previous_row = list(row)\n previous_dt = dt\n \ndf = pandas.DataFrame(new_data, columns=filled_state_df.columns)",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# drop ignored columns\n###########################################\nif len(IGNORE_COLUMNS_WHEN_CHOOSING_FEATURES) > 0:\n for ignore in IGNORE_COLUMNS_WHEN_CHOOSING_FEATURES:\n if ignore in df.columns:\n df = df.drop(ignore, axis=1)\n else:\n print(f\"Ignored column '{ignore}' did not exist in the data\")",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# create columns for each unique state\n###########################################\norig_columns = df.columns\nfor col in orig_columns:\n \n if not INCLUDE_TRUTH_COLUMN_AS_OBSERVATION:\n if col == TRUTH_COLUMN:\n continue\n\n if col.startswith(\"sensor.\"):\n # numeric\n continue\n \n unique_states = df[col].unique()\n if len(unique_states) > 0:\n for state in unique_states:\n new_col = f\"{col}/{state}\"\n df[new_col] = df[col].copy().apply(lambda x: x == state)\n if col != TRUTH_COLUMN:\n df.drop(col, axis=1, inplace=True)\n \ndf.replace('off', False, inplace=True)\ndf.replace('on', True, inplace=True)",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# create debug file\n###########################################\nif SAVE_DEBUG_OUTPUT:\n name = \"debug.csv\"\n if os.path.exists(name):\n os.remove(name)\n df.to_csv(name, sep=',')",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# train and find features\n###########################################\nX = df.drop([TRUTH_COLUMN], axis=1) \ny = df[TRUTH_COLUMN]\n\n# Split the data into training and testing sets\nX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)\n\n# Feature selection using chi-squared\nchi2_selector = SelectKBest(chi2, k=TOP_N_FEATURES)\nX_train_chi2 = chi2_selector.fit_transform(X_train, y_train)\nX_test_chi2 = chi2_selector.transform(X_test)\n\n\n# Train a Naive Bayes classifier on the selected features\nclf = MultinomialNB()\nclf.fit(X_train_chi2, y_train)\n\n# Make predictions on the test set\ny_pred = clf.predict(X_test_chi2)\n\n# Calculate and print the accuracy of the classifier\naccuracy = accuracy_score(y_test, y_pred)\nprint(f\"# Accuracy with {TOP_N_FEATURES} selected features: {accuracy * 100:.2f}%\")\n\n# Get the indices of the selected features\nselected_feature_indices = chi2_selector.get_support(indices=True)\n\n# Get the names of the selected features\nselected_features = X.columns[selected_feature_indices]",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# print useful information for you to \n# create your baysian settings\n###########################################\ntotal_hours = len(X)/60\ntarget_on_hours = len(df[df[TRUTH_COLUMN] == 1])/60\ntarget_off_hours = len(df[df[TRUTH_COLUMN] == 0])/60\nprior = target_on_hours/total_hours\n\nprint(f\"# {TRUTH_COLUMN} is on {target_on_hours/total_hours*100:.02f}% of the time\")\nprint(f\"- platform: bayesian\")\nprint(f\" name: Probably {TRUTH_COLUMN} # CHANGE ME\")\nprint(f\" prior: {prior:.02f}\")\nprint(f\" probability_threshold: 0.8 # EXPERIMENT WITH ME\")\nprint(f\" observations:\")\n\nfor feature in selected_features:\n hours_given_true = (len(df[(df[TRUTH_COLUMN] == 1) & (df[feature] == 1)])/60)\n hours_per_day_given_true = hours_given_true/total_hours*24\n \n hours_given_false = (len(df[(df[TRUTH_COLUMN] == 0) & (df[feature] == 1)])/60)\n hours_per_day_given_false = hours_given_false/total_hours*24\n \n prob_given_true = hours_given_true/target_on_hours\n prob_given_false = hours_given_false/target_off_hours\n \n # make sure it is never 0 or 100%\n prob_given_true = 0.01 if prob_given_true < 0.01 else 0.99 if prob_given_true > 0.99 else prob_given_true\n prob_given_false = 0.01 if prob_given_false < 0.01 else 0.99 if prob_given_false > 0.99 else prob_given_false\n \n entity_id, to_state = feature.split('/')\n \n if to_state is None:\n print(f\"# skipping feature: {feature} could not be parsed\")\n continue\n print(f\" # When '{entity_id}' is '{to_state}' ...\")\n print(f\" - platform: state\")\n print(f\" entity_id: {entity_id}\")\n print(f\" to_state: {to_state}\")\n print(f\" prob_given_true: {prob_given_true:.02f} # ({hours_per_day_given_true:.01f} hours per day)\")\n print(f\" prob_given_false: {prob_given_false:.02f} # ({hours_per_day_given_false:.01f} hours per day)\")",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

}

]

}

This looks great, if you look at my goals for the Bayesian component, this overlaps significantly with points 3, 4 and 5. As you have shown, as long as you know the time periods of ‘ground truth’ and the sensors you think are correlated you can automate the production of the config.

Instead of building a plug-in it would be great to do this within core home-assistant’s config flow. We could even take it a step further and run a search on the history/state machine and pick/suggest sensors that are correlated with the ground truth time periods.

That sounds awesome. I know a lot about programming but not the home assistant development ecosystem.

I will begin reading about config flow as you suggested.

Do you have a recommended list of baysian modules already that are knows to work well on homeassistant hardware (I’m using scikit and pandas)? After reading some code for custom components I see there is a space to list requirements - just curious if there were some to avoid to ensure maximum compatibility for others.

I am personally excited about having cousin “probably is” sensors for all of my physical sensors!

I’ve made a few notable changes to the python code in the last day or so already to further improve it’s output.

- support for automatic numeric state experimentation. calculate the min, max, median, 25th percentile and 75th percentile… generate an above/below hypothesis for each of those

- support for automatic “state has been X for over X minutes” experiments (5, 15, 30, 60, 180 for now) - generate template observations

- make sure to quote values (bare on and off were doing bad things)

I’m not enormously familiar with config flow either, and particularly in order to have a nice UI we are going to want to do things like show device history graphs and have time selectors so users can input the ‘ground-truth’ in a friendly way, I am not sure how to do this, or even if it is possible. Its probably worth being familar with Options-Flow as well. This part of the docs is also helpful. I’ve asked on the discord for some idea of feasibility. Edit: I forgot I had asked this question before, apparently you can get state history in the config flow. Edit2: and here is an example of accessing hass in a config flow. And here is an example of how you can use the hass object to get state history

I don’t I’m afraid. The actual code only uses Bayes’ rule here! I think for most cases using a whole package/dependency is overkill. Perhaps it will be necessary when we want to work backwards to help the user decide which devices would be best to include in/add to the config to improve model performance as you have just shown in your automated experimentation examples - I would be very grateful for your experience and advice on this. It would be amazing to open up the config/option flow and have home assistant tell you “Adding hallway motion off for 60 mins will take your “everyone_is_asleep” sensor from 90% to 96% accuracy.”

I would love to have a look at those, do you have a git(hub) link?

Edit: Just had a thought looking at your code. I suspect we will find we cant do everything we want to in the config flow (I hope we can) but I wonder if we could fork the history-explorer card into a bayesian config generator card…

Edit: We should use the template sensor/binary sensor as a template for our config flow. And we should copy the idea of giving the option of either a binary sensor (with a threshold) or a percentage probability sensor core/homeassistant/components/template/config_flow.py at dev · home-assistant/core · GitHub