I was inspired by @HarvsG spreadsheet

and created a python notebook that helps jumpstart my template automatically.

Problem

My cats set my motion sensors off.

Well not all of them. A handful of my motion sensors can be placed around so that it only picks up human beings.

The trouble is,there are places that I want motion sensors not to look like eyesores (the kitchen) or creepy (in a bathroom). Low placement under cabinets is great for discrete placement, but picks up my wandering kittens.

Solution

I intuitively set up a Bayesian sensor that:

- watches for well placed motion in adjacent rooms to the kitchen

- watches for light patterns that I believe indicate humans and not felines

It worked ok but needed some tuning. @HarvsG spreadsheet is a lifesaver but gathering the hours per day was a chore.

Over Engineering

So I wrote this python notebook which will spit out a starting point for your own bayes sensor.

Here are the steps:

-

Make sure you have History Explorer installed

-

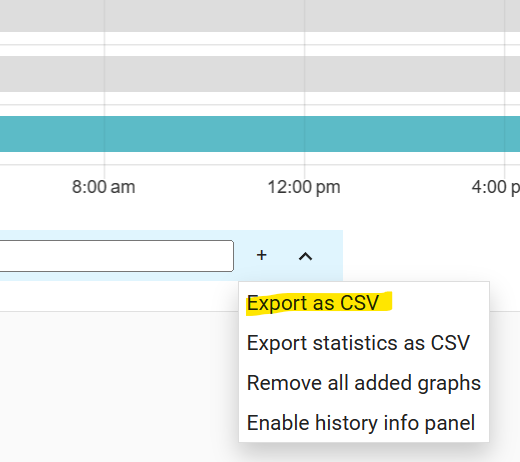

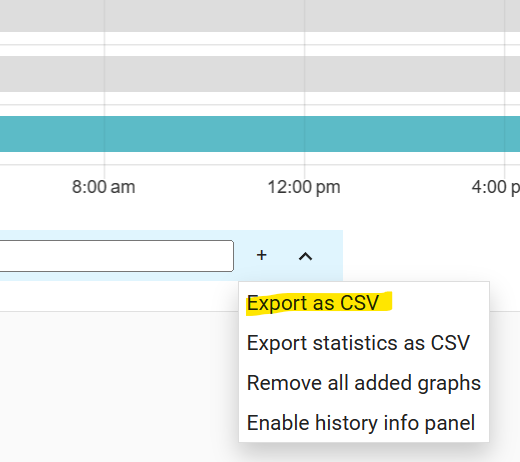

Create a permanent or temporary dashboard with all of the sensors you might believe could help you. Something like this (yellow highlights are my cats in the kitchen)

-

Add one more thing to that dashboard: a “truth sensor” (or manually edit: see later) that reflect events (like “real” motion vs “kitty” motion)

-

Export 4-5 days of statistics in the History Explorer using its “Export as CSV”

-

If you don’t have a “truth sensor”, edit that csv in some tool and add a new fake named sensor, manually putting in on/off events that mark the start and end of reality. For example, I copied my motion sensor and deleted rows corresponding to “kitty motion”.

-

Save the Jupyter notebook code at the end here with the file extension .ipynb.

-

Upload this notebook to the free Jupyter Lab.

-

Upload the csv you exported

-

edit the “CONFIGURATION” section at the top

- set your csv filename

- set the name of your “truth” column in the csv

- set how many correlated features (sensor + sensor state) combinations that you would like this to discover

-

Run the notebook by clicking on the triple arrow in the top toolbar

-

See example output at the bottom

The future

It would be great if I could turn this into a plugin (it would require numpy, and some bayesian libraries like scikit running on the homeassistant computing power) which would let you:

- select some schedul representing ground truth for your goal (ie. I was asleep these hours, or this is REAL motion)

- select a list of sensors you think my be interesting

- click ‘go’ and have it give you a suggestion.

I am not an expert in this ecosystem so this is all I’ll have for now.

Hopefully this is useful to someone.

Example Output (fully automated)

Now this output needs some hand tweaks. For example, while the kitchen motion “on” is a great indicator of motion, there is no need to ALSO have the kitchen motion “off” observation, so I’ll just delete that.

# binary_sensor.kitchen_occupancy is on 23.93% of the time

- platform: bayesian

name: Probably binary_sensor.kitchen_occupancy # CHANGE ME

prior: 0.24

probability_threshold: 0.8 # EXPERIMENT WITH ME

observations:

# When 'binary_sensor.den_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.den_occupancy

to_state: on

prob_given_true: 0.30 # (1.7 hours per day)

prob_given_false: 0.18 # (3.3 hours per day)

# When 'binary_sensor.front_yard_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.front_yard_occupancy

to_state: on

prob_given_true: 0.20 # (1.1 hours per day)

prob_given_false: 0.06 # (1.1 hours per day)

# When 'binary_sensor.hall_occupancy' is 'off' ...

- platform: state

entity_id: binary_sensor.hall_occupancy

to_state: off

prob_given_true: 0.59 # (3.4 hours per day)

prob_given_false: 0.93 # (16.9 hours per day)

# When 'binary_sensor.hall_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.hall_occupancy

to_state: on

prob_given_true: 0.41 # (2.4 hours per day)

prob_given_false: 0.07 # (1.3 hours per day)

# When 'binary_sensor.kitchen_occupancy' is 'off' ...

- platform: state

entity_id: binary_sensor.kitchen_occupancy

to_state: off

prob_given_true: 0.01 # (0.0 hours per day)

prob_given_false: 0.99 # (18.3 hours per day)

# When 'binary_sensor.kitchen_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.kitchen_occupancy

to_state: on

prob_given_true: 0.99 # (5.7 hours per day)

prob_given_false: 0.01 # (0.0 hours per day)

# When 'binary_sensor.laundry_occupancy' is 'on' ...

- platform: state

entity_id: binary_sensor.laundry_occupancy

to_state: on

prob_given_true: 0.18 # (1.0 hours per day)

prob_given_false: 0.06 # (1.1 hours per day)

# When 'light.dining_room_main_lights' is 'on' ...

- platform: state

entity_id: light.dining_room_main_lights

to_state: on

prob_given_true: 0.14 # (0.8 hours per day)

prob_given_false: 0.03 # (0.5 hours per day)

Jupyter code

{

"metadata": {

"language_info": {

"codemirror_mode": {

"name": "python",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8"

},

"kernelspec": {

"name": "python",

"display_name": "Python (Pyodide)",

"language": "python"

}

},

"nbformat_minor": 4,

"nbformat": 4,

"cells": [

{

"cell_type": "code",

"source": "import dateutil.parser as dparser\nimport numpy as np\nimport os\nimport pandas\nfrom sklearn.feature_selection import SelectKBest\nfrom sklearn.feature_selection import chi2\nfrom sklearn.naive_bayes import MultinomialNB\nfrom sklearn.model_selection import train_test_split\nfrom sklearn.metrics import accuracy_score",

"metadata": {

"trusted": true

},

"execution_count": 1,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# CONFIGURATION\n###########################################\nHISTORY_EXPLORER_EXPORT_FILE='entities-exported.csv'\nTRUTH_COLUMN = 'binary_sensor.kitchen_occupancy'\nINCLUDE_TRUTH_COLUMN_AS_OBSERVATION = True\nIGNORE_COLUMNS_WHEN_CHOOSING_FEATURES = [\n 'datetime',\n 'Unnamed: 0'\n]\nTOP_N_FEATURES = 8\nSAVE_DEBUG_OUTPUT = True",

"metadata": {

"trusted": true

},

"execution_count": 2,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# read history explorer export\ndf = pandas.read_csv(HISTORY_EXPLORER_EXPORT_FILE)",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# remove junk entries\n###########################################\ndf = df[df.State != \"unavailable\"]\ndf = df[df.State != \"unknown\"]",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# Take the sensor name and pivot it into a\n# column with it's state underneath it\n###########################################\nsensor = None\nunrolled = []\nfor idx, row in df.iterrows():\n try:\n dt = dparser.parse(row[0])\n value = row[1]\n unrolled.append([sensor, dt, value])\n except dparser.ParserError:\n sensor = row[0]\n continue\n \nsorted_df = pandas.DataFrame(unrolled, columns=['sensor', 'datetime', 'value']).sort_values(by=['datetime']).reset_index()\npivoted_df = pandas.pivot_table(sorted_df, index='datetime', columns='sensor', values='value', aggfunc='last')",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# Sanity Check the truth column\n###########################################\nif TRUTH_COLUMN not in pivoted_df:\n raise SystemExit(f\"TRUTH_COLUMN '{TRUTH_COLUMN}' does not exist in your CSV file. Here are the columns:{pivoted_df.columns}\")",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# fill in the data to make sure there\n# is one data point every minute\n###########################################\nprevious_values = [np.nan for x in pivoted_df.columns]\nnew_data = []\nfor dt, row in pivoted_df.iterrows():\n cur_and_prev = list(zip(list(row), previous_values))\n filled_row = [x[0] if not pandas.isnull(x[0]) else x[1] for x in cur_and_prev]\n previous_values = list(filled_row)\n \n new_row = [dt]\n new_row.extend(filled_row)\n \n new_data.append(new_row)\n \ncolumns = ['datetime']\ncolumns.extend(pivoted_df.columns)\nfilled_state_df = pandas.DataFrame(new_data, columns=columns)\n\n# truncate data to the minute\nfilled_state_df['datetime'] = filled_state_df['datetime'].apply(lambda x: x.floor('min'))\nfilled_state_df = filled_state_df.groupby('datetime', group_keys=False).tail(1)\n\n# fill in missing records for every minute of the day\nprevious_row = []\nprevious_dt = None\nnew_data = []\nfor idx, row in filled_state_df.iterrows():\n dt = row[0]\n \n if previous_dt is not None:\n # create missing times between last row and this one\n next_dt = previous_dt + pandas.Timedelta(seconds=60)\n while dt > next_dt:\n previous_row[0] = next_dt\n new_data.append(list(previous_row))\n next_dt = next_dt + pandas.Timedelta(seconds=60)\n new_data.append(list(row))\n \n previous_row = list(row)\n previous_dt = dt\n \ndf = pandas.DataFrame(new_data, columns=filled_state_df.columns)",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# drop ignored columns\n###########################################\nif len(IGNORE_COLUMNS_WHEN_CHOOSING_FEATURES) > 0:\n for ignore in IGNORE_COLUMNS_WHEN_CHOOSING_FEATURES:\n if ignore in df.columns:\n df = df.drop(ignore, axis=1)\n else:\n print(f\"Ignored column '{ignore}' did not exist in the data\")",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# create columns for each unique state\n###########################################\norig_columns = df.columns\nfor col in orig_columns:\n \n if not INCLUDE_TRUTH_COLUMN_AS_OBSERVATION:\n if col == TRUTH_COLUMN:\n continue\n\n if col.startswith(\"sensor.\"):\n # numeric\n continue\n \n unique_states = df[col].unique()\n if len(unique_states) > 0:\n for state in unique_states:\n new_col = f\"{col}/{state}\"\n df[new_col] = df[col].copy().apply(lambda x: x == state)\n if col != TRUTH_COLUMN:\n df.drop(col, axis=1, inplace=True)\n \ndf.replace('off', False, inplace=True)\ndf.replace('on', True, inplace=True)",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# create debug file\n###########################################\nif SAVE_DEBUG_OUTPUT:\n name = \"debug.csv\"\n if os.path.exists(name):\n os.remove(name)\n df.to_csv(name, sep=',')",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# train and find features\n###########################################\nX = df.drop([TRUTH_COLUMN], axis=1) \ny = df[TRUTH_COLUMN]\n\n# Split the data into training and testing sets\nX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)\n\n# Feature selection using chi-squared\nchi2_selector = SelectKBest(chi2, k=TOP_N_FEATURES)\nX_train_chi2 = chi2_selector.fit_transform(X_train, y_train)\nX_test_chi2 = chi2_selector.transform(X_test)\n\n\n# Train a Naive Bayes classifier on the selected features\nclf = MultinomialNB()\nclf.fit(X_train_chi2, y_train)\n\n# Make predictions on the test set\ny_pred = clf.predict(X_test_chi2)\n\n# Calculate and print the accuracy of the classifier\naccuracy = accuracy_score(y_test, y_pred)\nprint(f\"# Accuracy with {TOP_N_FEATURES} selected features: {accuracy * 100:.2f}%\")\n\n# Get the indices of the selected features\nselected_feature_indices = chi2_selector.get_support(indices=True)\n\n# Get the names of the selected features\nselected_features = X.columns[selected_feature_indices]",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

},

{

"cell_type": "code",

"source": "###########################################\n# print useful information for you to \n# create your baysian settings\n###########################################\ntotal_hours = len(X)/60\ntarget_on_hours = len(df[df[TRUTH_COLUMN] == 1])/60\ntarget_off_hours = len(df[df[TRUTH_COLUMN] == 0])/60\nprior = target_on_hours/total_hours\n\nprint(f\"# {TRUTH_COLUMN} is on {target_on_hours/total_hours*100:.02f}% of the time\")\nprint(f\"- platform: bayesian\")\nprint(f\" name: Probably {TRUTH_COLUMN} # CHANGE ME\")\nprint(f\" prior: {prior:.02f}\")\nprint(f\" probability_threshold: 0.8 # EXPERIMENT WITH ME\")\nprint(f\" observations:\")\n\nfor feature in selected_features:\n hours_given_true = (len(df[(df[TRUTH_COLUMN] == 1) & (df[feature] == 1)])/60)\n hours_per_day_given_true = hours_given_true/total_hours*24\n \n hours_given_false = (len(df[(df[TRUTH_COLUMN] == 0) & (df[feature] == 1)])/60)\n hours_per_day_given_false = hours_given_false/total_hours*24\n \n prob_given_true = hours_given_true/target_on_hours\n prob_given_false = hours_given_false/target_off_hours\n \n # make sure it is never 0 or 100%\n prob_given_true = 0.01 if prob_given_true < 0.01 else 0.99 if prob_given_true > 0.99 else prob_given_true\n prob_given_false = 0.01 if prob_given_false < 0.01 else 0.99 if prob_given_false > 0.99 else prob_given_false\n \n entity_id, to_state = feature.split('/')\n \n if to_state is None:\n print(f\"# skipping feature: {feature} could not be parsed\")\n continue\n print(f\" # When '{entity_id}' is '{to_state}' ...\")\n print(f\" - platform: state\")\n print(f\" entity_id: {entity_id}\")\n print(f\" to_state: {to_state}\")\n print(f\" prob_given_true: {prob_given_true:.02f} # ({hours_per_day_given_true:.01f} hours per day)\")\n print(f\" prob_given_false: {prob_given_false:.02f} # ({hours_per_day_given_false:.01f} hours per day)\")",

"metadata": {

"trusted": true

},

"execution_count": null,

"outputs": []

}

]

}

My proposal is actually like that - using his “static” formula with new input values whenever they change, even slightly, even every milli. It is not breaking his theorem IMHO.

My proposal is actually like that - using his “static” formula with new input values whenever they change, even slightly, even every milli. It is not breaking his theorem IMHO.