Background:

For reference my machine is a Dell Optiplex 9020 Micro with 16GB Ram, an i7-4785T (4 cores, 8 threads), an NVME SSD and a solid state drive, running proxmox. (This is not an ultra powerful machine)

My setup guide is documented here:

Google Coral USB + Frigate + PROXMOX - Third party integrations - Home Assistant Community

I recently ordered two “Home Assistant Voice PE” devices a while ago and finally found some time to get them set up. I installed the Wyoming protocol, fast-whisper and piper, and while it sort of worked,. The results were slow and unimpressive.

Meanwhile, I somehow stumbled onto a lot of videos of what people are creating with N8N, and it looked impressive.

If only there were some way to use N8N as the brains for home assistant voice assistant.

Is it possible? Yes! And it wasn’t that hard actually.

There may be other ways to achieve this,. and my setup isnt 100% local, but its mostly local and completely free.

The basic premise is to set up n8n to mirror the API specifications of ollama, and then use the ollama integration and point it at n8n.

Architecture:

My setup is as follows:

- Proxmox running on Bare Metal (Optiplex 9020)

- VM (Home Assistant Proxmox Install from here Alternative - Home Assistant )

- LXC

- Docker (with portainer installed)

- Whisper

- n8n

- n8n-import

- n8n-postgres-1

- qdrant

- Docker (with portainer installed)

Part 1 – Home Assistant Basic Setup:

- Do the basic setup for home assistant voice (I wont bother detailing this for now, you can find this elsewhere). Get to the point where your “Home Assistant Voice PE” is setup and you are able to talk to home assistant using the device.

Part 2 – Docker / Portainer Setup:

I had to fiddle with this a bit as Im not super experienced with docker and portainer. I just know enough to get by. Not that I don’t have this fully secured yet with HTTPS, so you should look into that once you are up and running. Or if/when I figure that out maybe I will post an update to this thread.

- You will need a custom DNS for this to work. Technically n8n will start without it, but a lot of the oauth providers wont let you use an IP address. Swap in your config instead of “your.domainnamehere.com”.

- Note that in the config below, the webhook is redirected to /api in order to simulate the ollama path setup. If you want to test it you will need to adjust the config and set “N8N_ENDPOINT_WEBHOOK_TEST” to api, instead of “N8N_ENDPOINT_WEBHOOK”.

- Create a folder in the LXC for your backups. I used “/home/n8n/backup”.

Also create “/home/n8n/shared”. You will need to change these in the setup below if you opt to point somewhere different. - Create a new “stack” that looks like this:

volumes:

n8n_storage:

postgres_storage:

qdrant_storage:

networks:

demo:

x-n8n: &service-n8n

image: n8nio/n8n:latest

networks: ['demo']

environment:

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=postgres

- DB_POSTGRESDB_USER=${POSTGRES_USER}

- DB_POSTGRESDB_PASSWORD=${POSTGRES_PASSWORD}

- N8N_DIAGNOSTICS_ENABLED=false

- N8N_PERSONALIZATION_ENABLED=false

- N8N_ENCRYPTION_KEY

- N8N_USER_MANAGEMENT_JWT_SECRET

- N8N_SECURE_COOKIE=false

- N8N_ENDPOINT_WEBHOOK=api

- N8N_ENDPOINT_WEBHOOK_TEST=apitest

- N8N_HOST=your.domainnamehere.com

- N8N_PORT=5678

- N8N_PROTOCOL=http

- WEBHOOK_URL=http://your.domainnamehere.com:5678/

services:

postgres:

image: postgres:16-alpine

hostname: postgres

networks: ['demo']

restart: unless-stopped

environment:

- POSTGRES_USER

- POSTGRES_PASSWORD

- POSTGRES_DB

volumes:

- postgres_storage:/var/lib/postgresql/data

healthcheck:

test: ['CMD-SHELL', 'pg_isready -h localhost -U ${POSTGRES_USER} -d ${POSTGRES_DB}']

interval: 5s

timeout: 5s

retries: 10

n8n-import:

<<: *service-n8n

hostname: n8n-import

container_name: n8n-import

entrypoint: /bin/sh

command:

- "-c"

- "n8n import:credentials --separate --input=/backup/credentials && n8n import:workflow --separate --input=/backup/workflows"

volumes:

- ./n8n/backup:/backup

depends_on:

postgres:

condition: service_healthy

n8n:

<<: *service-n8n

hostname: n8n

container_name: n8n

restart: unless-stopped

ports:

- 5678:5678

volumes:

- n8n_storage:/home/node/.n8n

- /home/n8n/backup:/backup

- /home/n8n/shared:/data/shared

depends_on:

postgres:

condition: service_healthy

qdrant:

image: qdrant/qdrant

hostname: qdrant

container_name: qdrant

networks: ['demo']

restart: unless-stopped

ports:

- 6333:6333

volumes:

- qdrant_storage:/qdrant/storage

whisper:

image: homeassistant/amd64-addon-whisper:latest

container_name: whisper

ports:

- 10300:10300

restart: unless-stopped

volumes:

- /home/whisper-data:/data

entrypoint: python3

command: -m wyoming_faster_whisper --uri tcp://0.0.0.0:10300 --model tiny-int8 --beam-size 1 --language pl --data-dir /data --download-dir /data

- Ensure you set the above “POSTGRES_DB” value to “n8n”. For some reason I couldn’t get it to work with any other DB name.

- Deploy the stack. The volumes should be created and hopefully everything starts.

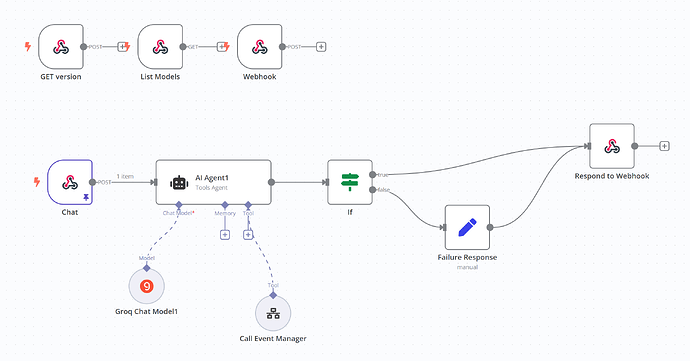

Part 3 – Create the n8n “Ollama Proxy” workflow

Note that your portainer setup must have the N8N_ENDPOINT_WEBHOOK property set to “api” for this to work normally, or otherwise N8N_ENDPOINT_WEBHOOK_TESET must be set to “api” while you are testing (but not both at the same time obviously).

Create a workflow with 4 webhook triggers:

- Get Version

- HTTP Method: GET

- Path: version

- Respond: Immediately

- Response Data: { “version”: “0.5.13” }

- List Models

- HTTP Method: GET

- Path: tags

- Respond: Immediately

- Response Data:

{ "models": [ { "name": "llama3.2:latest", "model": "llama3.2:latest", "modified_at": "2025-03-05T20:11:32.225032-08:00", "size": 2019393189, "digest": "a80c4f17acd55265feec403c7aef86be0c25983ab279d83f3bcd3abbcb5b8b72", "details": { "parent_model": "", "format": "gguf", "family": "llama", "families": [ "llama" ], "parameter_size": "3.2B", "quantization_level": "Q4_K_M" } }, { "name": "deepseek-r1:latest", "model": "deepseek-r1:latest", "modified_at": "2025-03-03T17:26:50.7367646-08:00", "size": 4683075271, "digest": "0a8c266910232fd3291e71e5ba1e058cc5af9d411192cf88b6d30e92b6e73163", "details": { "parent_model": "", "format": "gguf", "family": "qwen2", "families": [ "qwen2" ], "parameter_size": "7.6B", "quantization_level": "Q4_K_M" } }, { "name": "deepseek-r1:1.5b", "model": "deepseek-r1:1.5b", "modified_at": "2025-03-02T18:54:23.6412071-08:00", "size": 1117322599, "digest": "a42b25d8c10a841bd24724309898ae851466696a7d7f3a0a408b895538ccbc96", "details": { "parent_model": "", "format": "gguf", "family": "qwen2", "families": [ "qwen2" ], "parameter_size": "1.8B", "quantization_level": "Q4_K_M" } }, { "name": "deepseek-r1:14b", "model": "deepseek-r1:14b", "modified_at": "2025-03-02T16:09:37.6128441-08:00", "size": 8988112040, "digest": "ea35dfe18182f635ee2b214ea30b7520fe1ada68da018f8b395b444b662d4f1a", "details": { "parent_model": "", "format": "gguf", "family": "qwen2", "families": [ "qwen2" ], "parameter_size": "14.8B", "quantization_level": "Q4_K_M" } } ] }

- Chat

- HTTP Method: POST

- Path: chat

- Respond: Immediately

- Response Data: “Hello World from n8n”

Part 4 – Free AI

If you have a good GPU behind your docker setup you can install an Ollama docker container,. And point n8n at that for your models,. I tried that and it did work, but it was pretty slow, so I started looking at AI pricing. I found that groq’s pricing seemed quite a bit cheaper than everything else, so I set up an account and found that they actually have a free tier with access to lots of decent models!

Part 5 – Point Home Assistant at N8N using the Ollama integration

Go back to home assistant, install the Ollama integration, and when you are prompted for an Ip address, point it at your n8n installation running (ex. 192.168.200.122:5678).

It will make calls to list the models available etc, and your n8n webhook workflow will respond, and trick home assistant into thinking it is talking to Ollama.

Part 6 – Point Home Assistant at faster-wisper

Go back to home assistant, add an instance of faster whisper and point it at your instance running in docker. I might be wrong on this part but I found despite running on the same underlying hardware, mine seemed to be much faster when running in the docker LXC rather than inside my home assistant VM. For TTS. Just use the google option as it works just fine.

Summary:

I only just set this up on Saturday but have been having a lot of fun with it so far.

I would be interested to know if anyone else has tried this, or if anyone else manages to get this going, and what they are able to build with home assistant and n8n.