@tetele - thanks for naming this!

So in thinking through this new Voice eco system now that I generally understand the basics of Whisper, Piper, ha Voice assistants, etc, many (over 50%) of the voice commands I use every day aren’t implemented in HA via its Assistant. As a stop gap, is there a way to intercept (or know programmatically) when a command sent to HA via Assistant and fails with “Sorry I couldn’t understand that” or ANY other result for that matter… And then perform another action?

Use cases (there are dozens, especially for integrations that only Alexa supports)

Alexa play x on y (Pandora and Apple music especially, not supported on Music Assistant with HA Supervised installs)

Alexa set a timer called t on z

Alexa what’s the name of that song

Alexa add carrots to my Walmart list. (AnyList integration)

Alexa how many ounces in 1 cup

Alexa what is 2050 divided by pie

Alexa what is a recipe to substitute buttermilk

And on and on an on and on. Dozens (hundreds) more…

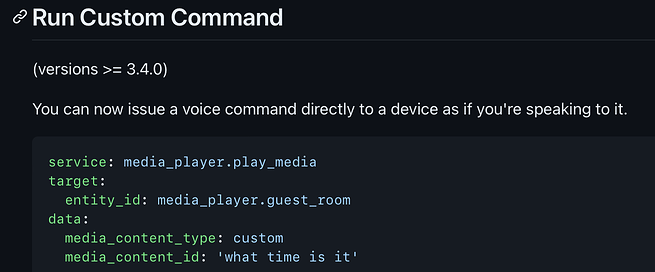

On such a failure (better yet, a new Trigger in an automation), I would send the same STT result from Assistant on to Amazon’s Alexa using this service which is basically just a text interface to Alexa instead of voice commands:

Or in a HA automation:

alias: Sorry I couldn't understand that

description: "Pass all mis-understood stuff to Alexa"

trigger:

- platform: conversation

on_response: "Sorry I couldn't understand that" #(this doesn't exist yet)

condition: [add filters for specific first-word actions like "Play" "what", etc if needed, otherwise send all failures to Alexa]

action:

- service: media_player.play_media

target:

entity_id: media_player.kitchen_alexa

data:

media_content_type: custom

media_content_id: {{conversation__agent_input_sentence}}

This would allow the commands that HA understands to execute within the HA system, and pass the rest of them that fail on to Alexa to do something with. I know the whole point is to replace these nasty, cloud based spy boxes, but we just aren’t there yet. Most of us don’t have the time or skill to write custom sentances and account for all the things we have become used to having in Alexa, Goggle, etc.

I would change Alexa’s wake word to Echo, and this would shim any remote word device (ESPHome, Atom, Box3, etc) solution squarely between HA and Amazon’s Alexa and slowly send fewer and fewer requests on to Amazon, Google, etc as time goes by and HA matures in the Voice arena.

Using the Alexa custom command service (by @alandtse) I’ve noticed that since you bypass the Amazon wake word and Amazons spotty STT, the result is pretty snappy.

How might I do this? Is this a feature request, custom integration? Or can I do this today?

Jeff