Intro

I’m running Whisper with (Nvidia) GPU support for local speech to text (STT) recognition. It was surprisingly difficult to find all required information in one place, so I thought I’ll share my results:

To be precise, I’m using the Faster Whisper implementation. There is a linuxserver/faster-whisper docker image, which adds the Wyoming protocol for HA along with GPU support.

I’ve kept my German configuration example as I think this is mostly interesting for non-English setups. Should be easy to adjust though.

Setup

I’m running it externally on a basic Ubuntu server.

Setup: Prerequisites

You’ll first need to install docker (incl. docker compose), if not done yet.

A little less common may be the requirement for Nvidia Container Toolkit to be installed on your host. Example instructions for Ubuntu:

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt-get update

export NVIDIA_CONTAINER_TOOLKIT_VERSION=1.17.8-1

sudo apt-get install -y \

nvidia-container-toolkit=${NVIDIA_CONTAINER_TOOLKIT_VERSION} \

nvidia-container-toolkit-base=${NVIDIA_CONTAINER_TOOLKIT_VERSION} \

libnvidia-container-tools=${NVIDIA_CONTAINER_TOOLKIT_VERSION} \

libnvidia-container1=${NVIDIA_CONTAINER_TOOLKIT_VERSION}

sudo nvidia-ctk runtime configure --runtime=docker

sudo systemctl restart docker

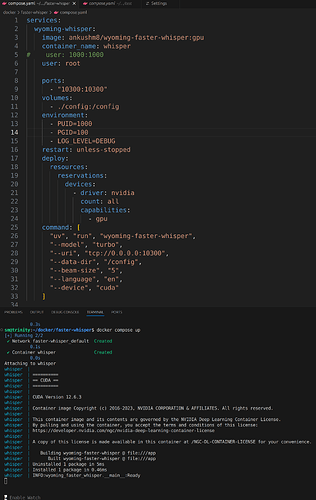

Setup: Docker Compose Service

Example setup for /opt/faster-whisper:

cd /opt

sudo mkdir faster-whisper

sudo chown server:server faster-whisper

cd faster-whisper

vim docker-compose.yml

docker-compose.yml

services:

faster-whisper:

image: lscr.io/linuxserver/faster-whisper:gpu

container_name: faster-whisper

environment:

- PUID=1000

- PGID=1000

- TZ=Europe/Berlin

- WHISPER_MODEL=large-v3

- WHISPER_LANG=de

- WHISPER_BEAM=20

- LOG_LEVEL=DEBUG

volumes:

- ./faster-whisper/data:/config

- ./faster-whisper/run:/etc/s6-overlay/s6-rc.d/svc-whisper/run

ports:

- 10300:10300

restart: unless-stopped

network_mode: host

runtime: nvidia

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities:

- gpu

- utility

- compute

Setup: Override initial-prompt (optional)

Unfortunately, the docker image does not expose the initial-prompt option yet. So I’ll simply duplicated the file with the relevant command to bind-mount it:

sudo vim /opt/faster-whisper/faster-whisper/run

faster-whisper/run

#!/command/with-contenv bash

# shellcheck shell=bash

export LD_LIBRARY_PATH=$(python3 -c 'import os; import nvidia.cublas.lib; import nvidia.cudnn.lib; print(os.path.dirname(nvidia.cublas.lib.__path__[0]) + "/lib:" + os.path.dirname(nvidia.cudnn.lib.__path__[0]) + "/lib")')

exec \

s6-notifyoncheck -d -n 300 -w 1000 -c "nc -z localhost 10300" \

s6-setuidgid abc python3 -m wyoming_faster_whisper \

--uri 'tcp://0.0.0.0:10300' \

--device cuda \

--model "${WHISPER_MODEL}" \

--beam-size "${WHISPER_BEAM:-1}" \

--language "${WHISPER_LANG:-en}" \

--data-dir /config \

--download-dir /config \

--initial-prompt "Du sollst primär Sprachbefehle für unser Smart Home erkennen. Alle Sätze sind Befehle oder Fragen. Entferne jegliche Hinweise auf Untertitel. Ein Satz fängt selten mit Ich an."

Setup: Final Steps

Run:

docker compose up [-d]

For use with Home Assistant Assist, add the Wyoming integration and supply the hostname/IP and port that Whisper is running on.

Tuning

Tuning: Beam Size

Try different values for WHISPER_BEAM. Default of the original Whisper implementation is 1. Default of Faster Whisper is 5. Higher values should result in higher accuracy, but also higher VRAM usage.

I’m currently using a quite high value of 20, because at least for German accuracy still seems to be a more relevant problem than speed.

Tuning: Model

You can adjust WHISPER_MODEL to any of the predefined models:

_MODELS = {

"tiny.en": "Systran/faster-whisper-tiny.en",

"tiny": "Systran/faster-whisper-tiny",

"base.en": "Systran/faster-whisper-base.en",

"base": "Systran/faster-whisper-base",

"small.en": "Systran/faster-whisper-small.en",

"small": "Systran/faster-whisper-small",

"medium.en": "Systran/faster-whisper-medium.en",

"medium": "Systran/faster-whisper-medium",

"large-v1": "Systran/faster-whisper-large-v1",

"large-v2": "Systran/faster-whisper-large-v2",

"large-v3": "Systran/faster-whisper-large-v3",

"large": "Systran/faster-whisper-large-v3",

"distil-large-v2": "Systran/faster-distil-whisper-large-v2",

"distil-medium.en": "Systran/faster-distil-whisper-medium.en",

"distil-small.en": "Systran/faster-distil-whisper-small.en",

"distil-large-v3": "Systran/faster-distil-whisper-large-v3",

"distil-large-v3.5": "distil-whisper/distil-large-v3.5-ct2",

"large-v3-turbo": "mobiuslabsgmbh/faster-whisper-large-v3-turbo",

"turbo": "mobiuslabsgmbh/faster-whisper-large-v3-turbo",

}

Alternatively, you can also easily use any CTranslate2-compatible model. This link gives you a filtered list of supported models on Hugging Face. Just use the model name (e.g. guillaumekln/faster-whisper-large-v2) from the website for the value of WHISPER_MODEL and Faster Whisper will automatically take care of downloading it etc.

Again, I prefer accuracy and so I’m using the “best” default model large-v3. I’ve also tried

quite a few others, but couldn’t find a better one, even though it may have been optimized for German etc.

Tuning: Initial Prompt

You may want to optimize the --initial-prompt setting mentioned earlier. I haven’t put too many thoughts into this yet though.

Performance

On my 3090 RTX, local speech-to-text recognition now takes about half a second. Quality is the best I could get so far. To be honest, it’s still rather acceptable and not too good yet. Looking forward on receiving feedback to optimize.