Long Term Aggregated Entitiy History - A script to access historical values (recorder statistics)

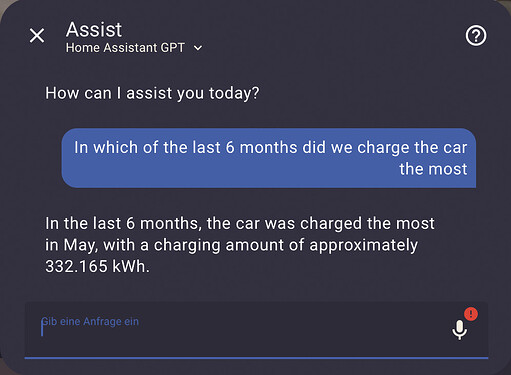

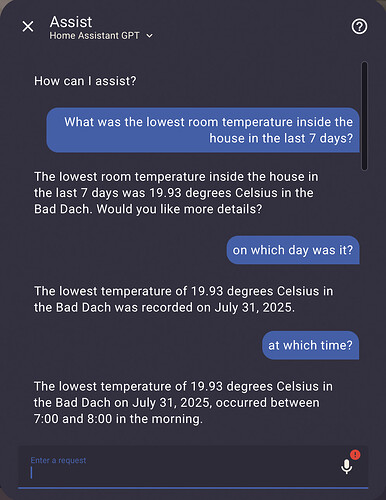

Can be used for questions like:

- How much energy did we export this month?

- Tell me the min/max temperatures in the garden over the last 30 days.

I noticed that gtp-4o-mini often fails to handle the conversion of time zone.

It likes to provide easier local time strings without timezone to scripts and either forgets about converting the UTC results back to local time (or tells you that there was no matching data in the tool result because it didn’t get the different time zones for input / output).

Giving hints and reminders about that in the tool and in the prompt didn’t help.

gtp-4.1-mini is far better with that.

To keep my Entity History script more usable with gpt-4o-mini, I edited the script to ask for local timezone dates and also to convert returned timestamps to local timezone.

This helps gpt-4o-mini a lot to keep on track.

Here’s the code:

alias: Entity History - Aggregated Statistics

icon: mdi:chart-timeline-variant

description: >

Search long-term statistics in aggregated form. RAW event data is not

accessible with this tool. Use it to get min, max, mean over a time range, or

the amount of change between two timestamps (e.g., for cumulative, monotonic

increasing sensors like energy). Suitable for numeric entities such as

temperature, power (W), or energy (kWh).

Tool usage and parameters:

Exact entity_id is required. Use "Entity Index" first if needed.

Pass entity_ids as a comma-separated string ("sensor.temp_1, sensor.temp_2")

Required:

- entity_ids: single entity_id name or a comma seperated list of multiple entity_ids

- start_time: Time string in local time like '2025-07-30 14:00:00'

- period: Aggregation timespan. One of 5minute, hour, day, week, month, total

- aggregation_types: Multiple selection possible. Allowed values are change, max, mean, min

Optional:

- end_time: time string in local time like '2025-07-30 14:00:00'. if not provided, current time is used.

Output on success:

- result:

<entity_id>:

- For period in [5minute, hour, day, week, month]: array of window objects:

{ start: 'YYYY-MM-DD HH:MM:SS', end: 'YYYY-MM-DD HH:MM:SS', change?: number, mean?: number, max?: number, min?: number }

- For period = total: array with exactly one object for the whole requested time range,

same keys as above.

- warnings (optional):

<entity_id>: [list of aggregation types that had no data]

Output on error:

- error: message

- code: error_code

- missing_entities (optional)

Notes & Hints:

- Always provide dates in local time like '2025-07-30 14:00:00'.

Returned dates and times will be in the same format and local time.

- A hint about power and energy sensors:

- A PowerSensor returns a value for a given moment in Watt. Search min, max, or mean values in a timespan.

- An EnergySensor provides complete kWh usage since installation of device and is cumulative, monotonic increasing. Search change e.g. per day, or the change from a given time until now.

Examples:

- Get min and max of temperature sensors over a timespan of a year, grouped by month for monthly temperature extremes

- Get change value of a kWh sensor over a month with daily grouping, to get the daily energy usage of this entity

mode: parallel

fields:

entity_ids:

name: Entity IDs (comma separated)

description: >-

comma-separated string of a single or multiple entity_id names (e.g.

"sensor.roomname_temperature". MANDATORY!

required: true

selector:

text: {}

start_time:

name: Start time

description: >-

Set the start date / time of your search. Always provide dates in

localtime like '2025-07-30 14:00:00'

required: true

selector:

text: {}

end_time:

name: End time

description: >-

Set the end date / time of your search. If not provided, the current time

is used. Always provide dates in localtime like '2025-07-30 14:00:00'

required: false

selector:

text: {}

period:

name: Period

description: >-

Time based grouping / aggregation period. If the search is for a short

time period which is not divisible by full hours, use 5minute as

aggregation and calculate the sum using the Calculator tool. Use 'total'

to get a single, collapsed result over the entire time range.

required: true

selector:

select:

options:

- 5minute

- hour

- day

- week

- month

- total

aggregation_types:

name: Aggregation Types

description: >-

Aggregated values that should be returned. Multiple allowed. Use mean as a

default for getting a simple single value if not requested otherwise. For

cumulative, monotinic increasing sensors like energy sensors (kWh) use

"change" to get how much was added to the counter between start and end

time. Attention: not every entity type has history values for all

aggregation types (e.g. energy sensors only provide 'change' values).

required: false

selector:

select:

multiple: true

options:

- change

- max

- mean

- min

sequence:

- action: logbook.log

data:

name: "ENTITY HISTORY - AGGREGATED STATISTICS:"

message: >

ids={{ entity_ids }}, period={{ period | default('n/a') }},

aggregation_types={{ aggregation_types | default('n/a') }}, start={{

start_time | default('n/a') }}, end={{ end_time | default('n/a') }}

entity_id: "{{ this.entity_id }}"

- variables:

_now: "{{ now() }}"

_start_ts: >-

{{ as_timestamp(start_time) if start_time is defined and start_time else

none }}

_end_ts: >-

{{ as_timestamp(end_time) if end_time is defined and end_time else

as_timestamp(_now) }}

_duration_s: >-

{{ (_end_ts - _start_ts) if _start_ts is not none and _end_ts is not

none else none }}

_start_dt: >-

{{ as_datetime(start_time) if start_time is defined and start_time else

none }}

_end_dt: >-

{{ as_datetime(end_time) if end_time is defined and end_time else _now

}}

- choose:

- conditions:

- condition: template

value_template: "{{ not entity_ids }}"

sequence:

- variables:

result: >

{{ {'error': 'Missing required parameter: entity_ids', 'code':

'missing_entity_ids'} }}

- stop: ""

response_variable: result

- conditions:

- condition: template

value_template: "{{ _start_dt is none }}"

sequence:

- variables:

result: >

{{ {'error': 'Missing or invalid start_time (use local time like

YYYY-MM-DD HH:MM:SS)', 'code': 'invalid_start_time'} }}

- stop: ""

response_variable: result

- conditions:

- condition: template

value_template: >-

{{ period not in ['5minute','hour','day','week','month','total']

}}

sequence:

- variables:

result: >

{{ {'error': 'Invalid period. Allowed: 5minute, hour, day, week,

month, total', 'code': 'invalid_period'} }}

- stop: ""

response_variable: result

- conditions:

- condition: template

value_template: |

{% set lst =

(aggregation_types is string)

and (aggregation_types | string | lower | regex_findall('change|min|max|mean'))

or

(aggregation_types if aggregation_types is sequence else []) %}

{{ (lst | list | length) == 0 }}

sequence:

- variables:

result: >

{{ {'error': 'Missing or invalid aggregation_types. Allowed:

change, max, mean, min', 'code': 'invalid_aggregation_types'} }}

- stop: ""

response_variable: result

- variables:

stat_ids: |

{% set raw = (entity_ids | string) %} {% if raw.startswith('[') %}

{% set arr = raw | from_json %}

{% else %}

{% set arr = raw | regex_findall('([a-zA-Z0-9_]+\\.[a-zA-Z0-9_]+)') %}

{% endif %} {% set ns = namespace(out=[]) %} {% for e in arr %}

{% set eid = (e | string | lower | trim) %}

{% if eid %}

{% set ns.out = ns.out + [eid] %}

{% endif %}

{% endfor %} {{ ns.out }}

typelist: |

{% if aggregation_types is not defined or aggregation_types is none %}

{{ none }}

{% elif aggregation_types is sequence %}

{% set ns = namespace(valid=[]) %}

{% for t in aggregation_types %}

{% set tt = (t | string | lower | trim) %}

{% if tt in ['change','min','max','mean'] %}

{% set ns.valid = ns.valid + [tt] %}

{% endif %}

{% endfor %}

{{ ns.valid }}

{% else %}

{{ (aggregation_types | string | lower | regex_findall('change|min|max|mean')) }}

{% endif %}

- variables:

missing_entities: >

{% set all_ids = states | map(attribute='entity_id') | list %} {% set ns

= namespace(missing=[]) %} {% for eid in stat_ids %}

{% if eid not in all_ids %}

{% set ns.missing = ns.missing + [eid] %}

{% endif %}

{% endfor %} {{ ns.missing }}

- choose:

- conditions:

- condition: template

value_template: "{{ (missing_entities | length) > 0 }}"

sequence:

- variables:

result: |

{{ {

'error': 'Some provided entity_ids do not exist.',

'code': 'entity_not_found',

'missing_entities': missing_entities

} }}

- stop: ""

response_variable: result

- variables:

_effective_period: |

{% if period == 'total' %}

{% if _duration_s is not none and _duration_s <= 3*24*3600 %}

5minute

{% else %}

hour

{% endif %}

{% else %}

{{ period }}

{% endif %}

_start_local_str: >-

{{ as_timestamp(_start_dt) | timestamp_custom('%Y-%m-%d %H:%M:%S', true)

}}

_end_local_str: >-

{{ as_timestamp(_end_dt) | timestamp_custom('%Y-%m-%d %H:%M:%S', true)

}}

- action: logbook.log

data:

name: "ENTITY HISTORY - DEBUG:"

message: >

effective_period={{ _effective_period }}, duration_s={{ _duration_s |

default('n/a') }}

entity_id: "{{ this.entity_id }}"

- variables:

call_data: >

{% set d = {'statistic_ids': stat_ids} %} {% set d = d |

combine({'start_time': _start_dt}) %} {% set d = d |

combine({'end_time': _end_dt}) %} {% set d = d | combine({'period':

_effective_period}) %} {% if typelist is not none and typelist|length >

0 %}

{% set d = d | combine({'types': typelist}) %}

{% endif %} {{ d }}

- action: recorder.get_statistics

response_variable: stats_raw

data: "{{ call_data }}"

- variables:

no_data: |

{% if stats_raw is not defined or stats_raw == none %}

true

{% elif stats_raw | length == 0 %}

true

{% else %}

{% set ns = namespace(total=0) %}

{% for _k, v in stats_raw.items() %}

{% set ns.total = ns.total + (v | length) %}

{% endfor %}

{{ ns.total == 0 }}

{% endif %}

- choose:

- conditions:

- condition: template

value_template: "{{ no_data }}"

sequence:

- variables:

result: |

{{ {

'error': "No history values found. Either the entity_ids have no long-term statistics enabled, or there are no datapoints in the requested timespan.",

'code': 'no_history_data'

} }}

- stop: ""

response_variable: result

- choose:

- conditions:

- condition: template

value_template: "{{ period != 'total' }}"

sequence:

- variables:

result: >

{%- set ns = namespace(out={}) -%} {%- for entity_id, entries in

stats_raw.statistics.items() -%}

{%- set e = namespace(items=[]) -%}

{%- for it in entries -%}

{%- set start_local = (it['start'] | as_datetime | as_local).strftime('%Y-%m-%d %H:%M:%S') -%}

{%- set end_local = (((it['end'] | as_datetime) - timedelta(seconds=1)) | as_local).strftime('%Y-%m-%d %H:%M:%S') -%}

{%- set obj = (it | tojson | from_json) | combine({'start': start_local, 'end': end_local}) -%}

{%- set e.items = e.items + [obj] -%}

{%- endfor -%}

{%- set ns.out = ns.out | combine({ entity_id: e.items }) -%}

{%- endfor -%} {{ {'result': ns.out} }}

- stop: ""

response_variable: result

- variables:

total_result: >

{%- set out = namespace(map={}, warns={}) -%} {%- for entity_id, entries

in stats_raw.statistics.items() -%}

{%- set acc = namespace(

have_change=false, change_sum=0,

have_min=false, min_val=none,

have_max=false, max_val=none,

have_mean=false, mean_sum=0, mean_count=0

) -%}

{%- for it in entries -%}

{%- if 'change' in it and it['change'] is not none -%}

{%- set acc.have_change = true -%}

{%- set acc.change_sum = acc.change_sum + (it['change'] | float(0)) -%}

{%- endif -%}

{%- if 'min' in it and it['min'] is not none -%}

{%- set acc.have_min = true -%}

{%- set acc.min_val = ( [acc.min_val, it['min']]|reject('equalto', none)|min ) if acc.min_val is not none else (it['min'] | float(0)) -%}

{%- endif -%}

{%- if 'max' in it and it['max'] is not none -%}

{%- set acc.have_max = true -%}

{%- set acc.max_val = ( [acc.max_val, it['max']]|reject('equalto', none)|max ) if acc.max_val is not none else (it['max'] | float(0)) -%}

{%- endif -%}

{%- if 'mean' in it and it['mean'] is not none -%}

{%- set acc.have_mean = true -%}

{%- set acc.mean_sum = acc.mean_sum + (it['mean'] | float(0)) -%}

{%- set acc.mean_count = acc.mean_count + 1 -%}

{%- endif -%}

{%- endfor -%}

{%- set item = {'start': _start_local_str, 'end': _end_local_str} -%}

{%- if typelist is none or 'change' in typelist -%}

{%- if acc.have_change -%}

{%- set item = item | combine({'change': acc.change_sum}) -%}

{%- else -%}

{%- set out.warns = out.warns | combine({ entity_id: (out.warns.get(entity_id, []) + ['change']) }) -%}

{%- endif -%}

{%- endif -%}

{%- if typelist is none or 'min' in typelist -%}

{%- if acc.have_min -%}

{%- set item = item | combine({'min': acc.min_val}) -%}

{%- else -%}

{%- set out.warns = out.warns | combine({ entity_id: (out.warns.get(entity_id, []) + ['min']) }) -%}

{%- endif -%}

{%- endif -%}

{%- if typelist is none or 'max' in typelist -%}

{%- if acc.have_max -%}

{%- set item = item | combine({'max': acc.max_val}) -%}

{%- else -%}

{%- set out.warns = out.warns | combine({ entity_id: (out.warns.get(entity_id, []) + ['max']) }) -%}

{%- endif -%}

{%- endif -%}

{%- if typelist is none or 'mean' in typelist -%}

{%- if acc.have_mean and acc.mean_count > 0 -%}

{%- set item = item | combine({'mean': (acc.mean_sum / acc.mean_count)}) -%}

{%- else -%}

{%- set out.warns = out.warns | combine({ entity_id: (out.warns.get(entity_id, []) + ['mean']) }) -%}

{%- endif -%}

{%- endif -%}

{%- set out.map = out.map | combine({ entity_id: [ item ] }) -%}

{%- endfor -%} {%- set payload = {'result': out.map} -%} {%- if

out.warns | length > 0 -%}

{%- set payload = payload | combine({'warnings': out.warns}) -%}

{%- endif -%} {{ payload }}

- action: logbook.log

data:

name: "ENTITY HISTORY - TOTAL:"

message: >

effective_period={{ _effective_period }}, entities={{

(stats_raw.statistics.keys() | list | length) }}, warnings={{

total_result.warnings | default({}) | tojson }}

entity_id: "{{ this.entity_id }}"

- stop: ""

response_variable: total_result

I also added this to my LLMs prompt in addition to exposing this script:

You can also check historical values of an entity using the tool “Entity History”.

But you REALLY need the entity_id of the device to get the historical values.

If you want to check the max temperature from yesterday, outside in the “driveway”, first search for the TemperatureSensor in this area using the “Entity Index” tool.

Hints:

- Energy (kWh) sensors are cumulative, monotonic increasing counter.

NEVER reply with their current state when asked about the energy used/produced in a specific timespan.

Use the “Entitiy History” tool with the aggregation type “change” to get the deviation between start-time and end-time