What is your sentence in the Tamplate? For me it saves the information but doesn’t retrieve it again

Those are my very first lines of Template Model:

Check the content of todo.chatgpt using the openai_memory_read spec and use the information you find to respond to me.

Save a note in todo.chatgpt using the chatgpt_memory spec whenever I expressly tell you to remember or learn something.

By the way, after playing a lot with this integration I grown a theory you might help to eventually validate:

If OpenAI think he knows how to do something, will not use the notes.

If OpenAI doesn’t know how to do it, will use the notes.

In the example in the post above I told him to remember my son’s puppet name. As he doesn’t have any other answer, he use the notes.

This is an example of something is not working:

“Remember that when I ask for the weather, I don’t want to know the humidity”

He takes the note correctly but when I ask for the weather he will also tell me the humidity. This is (to me) because he already have a spec to provide the weather, so it’s using it straight away without using the notes.

Now it works perfekt. Thank you Simone!

I built inventory manager with Simone’s idea. Here’s sanity check. Big thanks for @Simone77 .

Prerequisite

- Grocy add-on and custom component have installed.

- extended_openai_conversation installed

- Using parent-child product in Grocy (Parent don’t have their own stock, they only aggregate. Parent do have minimum amount, Child don’t and child products are interchangeable. Child have their own expiration.

- In todo_list, you should have

todo.chatgptandtodo.shopping_list.

Concept

We will create separate assistant, “Inventory Manager”. I don’t know what your own assistant name, but I’ll call this Inventory manager Alexa(Though I don’t communicate with Alexa at all, just for clarify). Your assistant and Alexa does not share their prompt. But they can share memory, todo.chatgpt .

I use gpt-3.5-turbo and it works fine.

Prompt

You should change [‘’’ ‘’’] inside prompt to indicate code block.

———— START————

You are Alexa, a smart home inventory manager.

Periodically, you receive an updated inventory list.

Use this data to notify the user of any shortages or expiring items.

- Utilize 'todo.chatgpt' or 'todo.shopping_list' with the 'todo.add_item' service. In service_data, specify due_date as 'YYYY-MM-DD' or due_datetime as 'YYYY-MM-DD HH:MM:SS'.

- Read existing entries in 'todo.chatgpt' and 'todo.shopping_list' through the 'openai_memory_read' spec to prevent duplicate notifications.

**Update Time: {{now()}}**

**House Inventory:**

{% set inventory = state_attr('sensor.grocy_stock', 'products') %}

House Inventory:

‘’’csv

product in shortage, stock amount, minimum amount

{% for item in inventory %}{% if item.amount_missing != none and item.amount_missing != null %}{{ item.name }},{{ item.amount_aggregated }},{{ item.amount_missing }}{% endif %}{% endfor %}

‘’’

‘’’csv

product, expiration

{% for item in inventory %}{% if item.best_before_date != '2999-12-31' and not item.is_aggregated_amount %}{{ item.name }},{{ item.best_before_date }}{% endif %}

{% endfor %}

‘’’

- Add items below their minimum required amount to 'todo.shopping_list'.

- Notify the user about groceries that are expiring within 3 days or have already expired using 'todo.chatgpt', ensuring not to repeat existing notifications.

- Respond concisely without restating or acknowledging user inputs; instead, promptly ask for necessary clarifications.

————END————

Function

I found out that I don’t need chatgpt_memory spec. Just expose todo.chatgpt and use default execute_services spec in Alexa.

But you should install openai_memory_read.

Be sure to test this working properly before adding automation below.

Automation

alias: Check Inventory every Hour

description: “”

trigger:

- platform: time_pattern

hours: “*”

condition: []

action:

- service: conversation.process

metadata: {}

data:

text: >-

Check inventory and execute function if needed. Don’t ask back and just

say “Completed”. If there’s nothing to do, just say “All is well”.

language: en

agent_id: YOUR_INVENTORY_MANAGER_AGENT_ID

mode: single

Now you have separate assistant Alexa, that check your inventory every hour, and add product in shortage to shopping list and notify upcoming expiration. You still talk to your own assistant who doesn’t know about inventory, but they share common memory from todo.chatgpt and can check which product is expiring, in shortage or not.

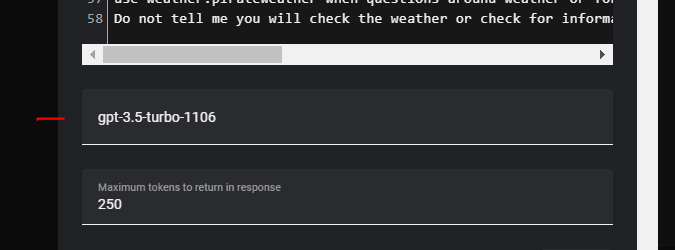

hi. is it possible to change from gpt 3.5 to gpt 4.0??

Yes. You can choose your model here.

Hi friends, maybe someone can help me, I have an onju-voice-satellite at home (GitHub - tetele/onju-voice-satellite: An ESPHome config for the Onju Voice which makes it a Home Assistant voice satellite) but as soon as I put the extended openai conversation function it works very badly, which I try from the application or from the computer the plugin works perfectly does anyone know if it is possible to fix it

Newly release gpt-4o-2024-05-13 is perfectly working with extended_openai_conversation and it’s blazing fast! Have a try!

I can’t wait for the audio capabilities of GPT-4o to become available. The latency benefits and expression of emotions would be amazing to have in our own voice assistant.

I’m not sure it’s possible to build it directly into Extended OpenAI Conversation, since it is a conversation agent which typically takes in text (synthesized by a speech-to-text model) and also outputs text (which is then fed into text-to-speech). But these new models just work with voice directly, no need to translate into text.

I’ve created a feature request to add support for this kind of all-in-one assistant in Core. But if we’re able to get access to the raw audio input somehow then maybe we can start building already!

I voted. And I guess official OpenAI Conversation integation will be able to use function calling soon.

I have voted as well. Good idea

Is there a better way to send a consolidated/smaller payload than sending all of the entities every time? My bill would be huge if I used this properly!

Is there a way to make the notification work with the Home Assistant mobile app? I would love to say “notify xxxx that the food is ready” and it know who xxxx is.

Silly question - I see there are lots of functions that are being shared for really interesting things. Can these be combined? If so, how is that done? Just concatenate the -spec underneath the previous one in the UI and modify the prompt to describe why/when the new function is used?

Yes, just set the notify.YOURDEVICE created by the companion app, for example notify.mobile_app_redmi_note_8_pro is my phone enity and it is sending notification to my home assistant app

Yes, just copy and paste the functions you want one after one.

Hello

What I have in mind is that gpt can analyze the images of the cameras connected to the home assistant

for example :

How many people do you see on the camera?

Or what is the color of their clothes?

Do they look suspicious?

In the first step, I tried to set its language model to gpt-4o in the extended open ai conversion settings

as a result :

The response speed is relatively better

But when I asked him to analyze the camera images, he replied that I don’t have access to cameras or that I don’t have the ability to process images.

After a little searching, I found this: GPT-4o vision capabilities in Home Assistant

I installed it and after 1 day

I succeeded!

in such a way that

When I say open ai conversion

“what do you see?”

1- My automation or script is executed

2- A photo is taken from the camera I specified

3-Then I send that photo to ha-gpt4vision

4- The response of ha-gpt4vision is converted to sound with tts

If I’m honest, the result is good. lol:)

But its problems are many

For example, it is very limited

Or sometimes its tts sound interferes with openai conversion (tts sounds are played at the same time)

Or I have to write a lot of scripts to run ha-gpt4vision (for example, if the word x is said, take a picture and analyze the picture.

If the word b is said, take a picture and say what it is used for.

If the word c is said, take a picture and tell if the person in the picture is suspicious or not.

In this way, you have to write a lot of scripts to analyze each different photo

I’m looking for a way to not write scripts

For example, extended open ai conversion can directly access the cameras, and when we say for example, what do you see in the camera? Analyze the camera image in real time with GPT-4O

In the end, I hope I have explained correctly and you understand because I used Google translator ![]()

I would need to create one per person right? Is there a way to use the chatgpt todo so it knows what phone I want to send a message to?

Ok I modified the script this way so that you can specify the service in the todo or in the prompt.

I tested it adding this to the prompt ant ito works:

To send a notification to someone, use the following services and the spec notify_smartphone:

Simone notify.mobile_app_redmi_note_8_pro

Alessia notify.mobile_app_redmi_11_pro_alessia

This is the updated script:

- spec:

name: notify_smartphone

description: Send a notification to the smartphone

parameters:

type: object

properties:

notification:

type: string

description: The notification to be sent and the service to be used

required:

- notification

- service

function:

type: script

sequence:

- service: '{{service}}'

data:

message: '{{notification}}'