In VMware you just rezise The partition/disk, HA image is configured to automaticly take advantage off the added disk/Partition space

thanks. using Virtual Box however, and I can not find a resize partition option at all…

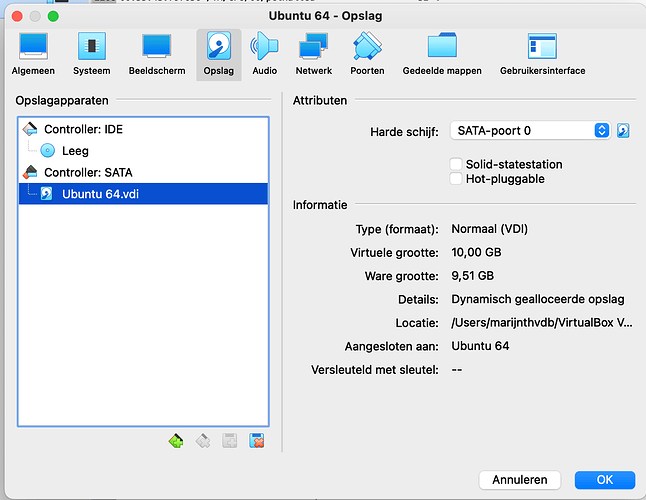

this is what I see:

and I can not get that 10,00 GB to change…

Sorry im on a f… touchpad hate these things , but here is a link… dunno if it helps

Cool, yeah i saw menu was different, maybe Ubuntu/windows versions differs, on my VMware it took a month, before HA had “claimed” all available space, but the image grew stedily untill almost full disksize

Anyone successfully upgraded to v8.x on a HP T630?

Hi, Yes you are right,

It got sorted with Home Assistant 2022.7.0

Thanks

FWIW I think I traced the problem down to my side - potentially a faulty SD card. I did a full backup, then a fresh install on a new card and restore. All good now.

There were a lot of reports of ZHA integration breaking with version 8. Are we fixed yet?

My parents house is still on v7.x as it heavily relies on ZHA/zigbee. (raspberry pi, raspee ii).

Thanks, Matt

A unnecessary early dying sd card is sadly somewhat expected with HaOS. For some (weird) reason the Home Assistant Operating System is “optimized” for mechanical hard drives or at least it uses a filesystem from that decade and no tweaks to don’t let flash storage suffer.

Afaik HaOS uses ext4 with the defaults (flush interval of 5 seconds) which will probably cause heavy write amplification for most of the users. ![]()

![]()

Avoiding HaOS and doing a supervised install on a debian OS with a “tweaked” settings of the commit interval (e.g. 100 times higher than the default) should let your sd cards live magnitudes longer (talking years here) with the same usage pattern.

So if one is not in the mood to treat sd cards as disposable items the best for now is to avoid HaOS till it is ready for the flash era ![]()

Definitely fixed.

Thanks, and yes it’s not my first sd card that’s been killed by hassio. It did surprise me a little because it was a relatively new (less than a year) Samsung pro endurance. On my todo list is to boot from something less susceptible to this issue like my nas.

I was running 8.0.4 on a NUC i3 with a SATA SSD m.2 2280 using HA 2022.8.6 and it was running fine until I updated to 8.5 this morning as it was in the settings update list and now the NUC just keeps rebooting after its starts to load the docker apps. I am watching on a console that is connected to the NUC but it’s too fast to see wher its crashing.

How do I recover? I have a full backup of HA done with HA backup at midnight last night but the OS no.

Thanks

(Heavy) write amplification will kill all flash storage much faster if they don’t have counter measures like “pseudo ram” (often SLC flash) which is typical for SSDs to avoid “badly” configured OSes one could argue.

Problem is that typical no sd cards, usb sticks, eMMC or other relatively cheap flash storage (the ones people usually use together with there relatively cheap SBCs) does feature such “pseudo ram”. This types of storage just need a proper configured filesystem to don’t die like flies ![]() .

.

As a crude visualization what the harm of this 5 second flush interval is that HaOS uses (and that is a relic from the stone hard drive age) one can imagen that a flash storage is a big block of paper ![]() . Normally one would try to put es much content/data on one page

. Normally one would try to put es much content/data on one page ![]() as possible to don’t “waste” that valuable resource. And here is what HaOS does - it just uses a new page every 5 second even if there is only one word (or even less) written on the page.

as possible to don’t “waste” that valuable resource. And here is what HaOS does - it just uses a new page every 5 second even if there is only one word (or even less) written on the page. ![]()

In a flash storage one of this page typically holds like 8 or 16kb and all this space is not only wasted when only a few bytes get written in this 5 seconds much worse because the flash storage is worn like if would have been 8/16kb written - so it ages much faster and therefor dies much earlier than necessary. This is (very simplified) write amplification in a nutshell ![]() and something every OS that is made for SBCs should know about and take the necessary (easy) counter measurements. Maybe @agners want’s to comment on that why HaOS isn’t (or doesn’t want to be?) flash friendly?

and something every OS that is made for SBCs should know about and take the necessary (easy) counter measurements. Maybe @agners want’s to comment on that why HaOS isn’t (or doesn’t want to be?) flash friendly? ![]()

you are not the first one mentioning this 5 second flush interval

it’s also mentioned here

And it might be true, But maybe there would be a reason for this interval, something we are not aware of,

but now I’m just curious.

what actually did wonders for me was to use the suggested SD card mentioned in the installation manual.

I broke 2 SD cards before and none since.

also raspbian native will work much faster with those.

What I’m wondering about is if it would do anything for performance as will if the flush interval gets increased

The only reason is that this is the default setting from a filesystem which was developed more than 15 years ago at a time spinning hard disks were still the standard and SSDs hadn’t yet hit the consumer market.

It’s quite easy: Best is to avoid any “re”-branding and only buy your products from brands that are flash manufactures - I think there is about 3 or 4 companies left in the whole world.

If you want to run your operating system from sd card you want a high i/o performance which is not in par with advertised high read/write speeds as they always measurement for sequential read/writes ![]()

So for example the “Samsung pro endurance” card which @zagnuts used is the wrong kind for a operating system. It claims “high speeds” but that is only true for continuous writing large chunks (like videos or images for example) on the other hand this card doesn’t even mention the i/o performance. ![]()

The go-to cards are ether A1 or even better A2 rated cards for the intention of running a OS on it:

Application Performance Class | SD Association

Still - if the card is rated A1/2 or not the write amplification with a 5 second commit interval will kill it much faster than necessary ![]()

Just as an idea what is possible with the “right” filesystem settings: I’m using some SBC’s with armbian (which comes with correct settings to don’t kill the flash) and they run on cheap (sub $10) 8/16GB SD cards (A1 rated) that are up to ![]() years old. Yes, running 24-7 on the first card I installed it - I expect it to continue to run for another decade or two. It might even that the sd card will survive the SBC (that probably degrades faster because of the produced heat).

years old. Yes, running 24-7 on the first card I installed it - I expect it to continue to run for another decade or two. It might even that the sd card will survive the SBC (that probably degrades faster because of the produced heat).

Yes, A1/A2 cards are “only” about performance not about life expectancy if someone kills the valuable flash cells by flushing a page every 5 seconds ![]()

No, this setting is not about performance but only to get the minimum wear out (maximum life time) out of flash storage. The commit/flush interval is actually the maximum time to wait for a write to the physical storage. So the system essential waits a maximum of the configured time (default 5 seconds) before it writes to flash (the minimum possible for flash is one page).

If instead of the default - a interval of e.g. 600 seconds is chosen the system will accumulate all data that is “ready” to write to the flash till it is enough for a full page write (that’s the optimum because no extra wear on the flash) or till the “time” is up - in that case it writes to flash after 10 minutes even when there is not yet enough data (typically 8 or 16kb) for a whole page.

The reason this is the default was that hard drives can allocate each and every bit so they don’t suffer from write amplification at all.

But one thing is to keep in mind: A potential power failure can cause data loss up to the configured interval. So if the system keeps the data which should be written to the storage for 599 seconds in RAM and wants to write it in the next second but the power fails all that data from the last 10 minutes is lost. ![]()

That said it is a very minor problem - instead of buying a sd card every year or spending a fortune on “industrial grade” sd cards one can just invest a few bucks in a power bank which can be charged and discharged at the same time ![]() aka poor mans ups

aka poor mans ups ![]()

ok, clear,

but at that point this is an ever bigger problem for products like the HA Yellow, because you will need to replace the whole CM module instead of only the SD.

In my link filesystem f2fs is suggested instead of ext4. I’m not familiar with that one. But can if solve the power failure issue?

Indeed, if the flash is embedded (like soldered eMMC or other flash modules) that can be end up very painful. Depending on the device some arm thingies have 2-stage bootloader and depend on a working internal flash to even be able to boot from a external drive and it can be worse at “loosing” the whole SBC indeed.

I don’t have any practical experiences with f2fs and actually only know that much that is made for flash storage and designed for minimum wear. How that is achieved in detail I can’t say but the prerequisite of only writing (at best) full pages on flash storage stays the same.

The documentation

and git

both talk about SquadFS and ZRAM, not sure if there are issues, but I can’t find any mention of ext4.

on the other hand it doesn’t mention grub as bootloader either.

SquashFS is a read only filesystem and used to host HaOS itself if I’m not mistaken. Actually there is even two “slots” so in case a update goes wrong the old OS can still be boot - very nice setup indeed.

The ext4 is the writable partition and some times referred as “data”(disk) or “storage”. It is the one that hosts the database, the config files, the backups - all the data that is not static essentially.

This link should show your "storage"partition/disk:

a click on the the three dots top right and another on “move datadisk” did actually mention ext4 when a suitable disk (to move the data too) is found If I remember right.

Also I remember that @agners once mentioned (can’t find it anymore) in the githubs that (back when HaOS 7 was a thing) didn’t exist any plans to move away from the ext4 defaults (that kill our beloved flash so fast) ![]()

Looks like the docs are not up2date - I think HaOS 8 introduced grub.