Read the full release announcement here

Read the full release announcement here

Great work! Setting per-user visibility for dashboards/views/cards has been on my wishlist for years.

No documentation as to how to set the AI Assist up. What is there is super confusing. You set up “OpenAI Conversation” in Devices but the Conversation Agent you set up elsewhere is actually called “ChatGPT”… Come on guys.

The actual feature works well, nice!

I am largely critical of large language models and their overuse; however, I can see the value of having some machine learning assistance for specific purposes within my smarthome, like improving voice assistant intent matching.

That said, I’m surprised that there are no mentions here of local LLMs. I have zero interest in using a cloud LLM for the same reasons I use Home Assistant in the first place. I care about privacy and keeping my data local only.

The current state of Voice Assistant and LLM integration puts me between a rock and a hard place. Assist is challenging to use in its current state without defining dozens of alternative aliases to account for natural variance in speech. An LLM integration would help, but I don’t want to sacrifice my privacy by sending data to Google or OpenAI—companies I have no shortage of antipathy toward—to get it.

My fingers are crossed that the “Voice - Chapter 7” that was teased for the end of this month will expand local-only LLM options. ![]()

The other updates are nice. Custom backgrounds are a nice QOL feature, and I appreciate the conditional visibility on dashboard cards and sections.

Looks like the Aladdin Connect integration is back (thanks to everyone involved!), but I don’t see this documented anywhere in the release notes…

- A default code can now be set in the entity settings for every alarm control panel entities. Nice work @gjohansson-ST!

I don’t see this documented, so I have no idea how to do this.

Is this available only via the UI and not YAML?

Looks like Hunter Hydrawise has an issue in 2024.6.0:

Traceback (most recent call last):

File "/usr/src/homeassistant/homeassistant/helpers/update_coordinator.py", line 312, in _async_refresh

self.data = await self._async_update_data()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/src/homeassistant/homeassistant/components/hydrawise/coordinator.py", line 43, in _async_update_data

user = await self.api.get_user()

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydrawise/client.py", line 119, in get_user

return deserialize(User, result["me"])

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydrawise/schema_utils.py", line 25, in deserialize

return _deserialize(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/apischema/deserialization/__init__.py", line 887, in deserialize

return deserialization_method(

^^^^^^^^^^^^^^^^^^^^^^^

File "apischema/deserialization/methods.pyx", line 504, in apischema.deserialization.methods.ObjectMethod.deserialize

...

File "apischema/deserialization/methods.pyx", line 1078, in apischema.deserialization.methods.ObjectMethod_deserialize

File "apischema/deserialization/methods.pyx", line 1348, in apischema.deserialization.methods.Constructor_construct

File "apischema/deserialization/methods.pyx", line 1227, in apischema.deserialization.methods.RawConstructor_construct

File "<string>", line 3, in __init__

TypeError: function missing required argument 'year' (pos 1)

I’m going to start digging into the LLM support. Is it designed to be backend agnostic? I want to connect to koboldcpp/llamacpp, which have a mature and open source APIs. I don’t see a reason it should be locked down to proprietary LLMs, but even so, if we can change the target host/port it’d be possible to run an OpenAI proxy. Has that been considered during development yet?

I feel like HA is losing its path. Why would someone who care about privacy want Google or OpenAI to know what they do!

At the end of the release notes there is a link to a developer blog post which in turn links to further documentation (didn’t read it in detail, but first scan seems useful)

I was so pleased about the collapsible function in the blueprint.

I implemented it straight away in the beta phase and wouldn’t want to be without it.

My “Cover Control Automation (CCA)” blueprint has therefore become much clearer.

Thank you very much.

The thing is, LLMs are quite trivial to interface with. There’s a generate endpoint where you send a prompt and get a response either synchronously or asynchronously. You can specify the format you want to receive in the output as part of the prompt. There’s nothing keeping HA (or contributing devs) from making an interface layer that makes it backend agnostic. I want to look into doing that if they haven’t designed it with that in mind yet.

pls get back epsonworkforce integration because its working fine!

- platform: epsonworkforce

host: 192.168.x.x

monitored_conditions:- black

- yellow

- magenta

- cyan

Hi @dwd

the Epson Workforce has been removed already in 2024.5 (see Farewell to the following) for a good reason.

While I happen to agree that privacy is important, not everyone uses Home Assistance for privacy reasons, and I think it’s fair to add features that the privacy-conscious might not use (as long as said features are optional).

Hi @parautenbach

it is more a developer related change - see the Alarm Control Panel Entity code validation blog post which is also mentioned in the release notes.

please file an new issue report, thx ![]()

Local LLM’s are definitely coming, the home assistant team cares too much about choice and privacy to let something like that slip. It wasn’t long ago they said “With Home Assistant you can be guaranteed two things: there will be options and one of those options will be local”, and I don’t think that this release is in any way walking back on that. It’s just that running LLM’s locally is complicated, and hitting a web endpoint is trivial. It’ll take some time for the local options to be ready, so it makes sense for them to release the part that works right now, and that involves an endpoint. In the meantime, there’s nothing stopping you from setting up your own endpoint with LocalAI and using the openAI integration with it instead

I wonder if the phrase “Dipping Our Toes” isn’t translating well for some folks? As a native English speaker (and from the US), that immediately keyed me to the fact that this is a very first step, obviously not the end.

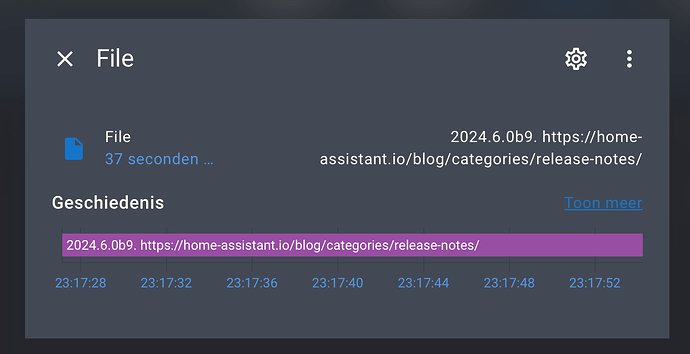

now notice we have a ui for the sensor platform: file too?

I did migrate my notify services, and deleted its yaml, but believe we can do away with this too:

sensor:

- platform: file

file_path: /config/logging/filed/filed_notifications.txt

name: Filed notifications

<<: &truncate_value

value_template: >

{% if value is not none %}

{% if value|length < 255 %} {{value}}

{% else %} Truncated: {{value|truncate(240,True, '')}}

{% endif %}

{% endif %}

given the fact we can do

and next set it up, even with a template:

Missed that during beta, and still cant find that in the Release notes. Or, did we have that already …?

add those yaml settings results in a nice new sensor.file:

hmm must check why it still shows the last beta though ![]()

there is no way to get to the template of the particular sensor once it is created however, so that might be something to look out for in a followup development of the integrations UI

checking the entity_registry on the sensors, there is no template registered:

{"aliases":[],"area_id":null,"categories":{},"capabilities":null,"config_entry_id":"1725ccfbcc9b9b3ed8d3ecd7f18af2f3","device_class":null,"device_id":null,"disabled_by":null,"entity_category":null,"entity_id":"sensor.file","hidden_by":null,"icon":null,"id":"42df2d4fa1097f3d5f3711af24f57f12","has_entity_name":false,"labels":[],"name":null,"options":{"cloud.google_assistant":{"should_expose":false},"conversation":{"should_expose":false}},"original_device_class":null,"original_icon":"mdi:file","original_name":"File","platform":"file","supported_features":0,"translation_key":null,"unique_id":"1725ccfbcc9b9b3ed8d3ecd7f18af2f3","previous_unique_id":null,"unit_of_measurement":null}

]

so I am not sure this will actually truncate those longer strings?