Hello everyone,

edit:

I decided to use this thread to share my journey with improving my LLM based assistant using OpenAI gpt-4o-mini by adding tools.

So I will add LLM tools I create, or link to LLM tools that I start to use in this thread and try to update the start post with links as an table of contents.

I learned a lot from Nathan’s Friday Party thread, but I think more user storys (and maybe less perfect/large solution as a starter) are always a good thing for others to get into the topic.

And you REALLY want to improve your LLM assistant with tools, as the standard behavior is volatile and subpar at least with the current smaller/cheaper models.

Table of contents:

Scripts:

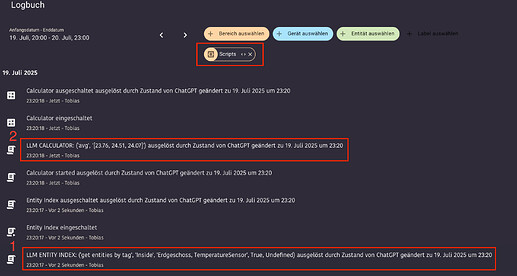

- A calculator script, as your LLM otherwise often can’t even determine the min/max value in a list of numbers. Let alone doing calculations.

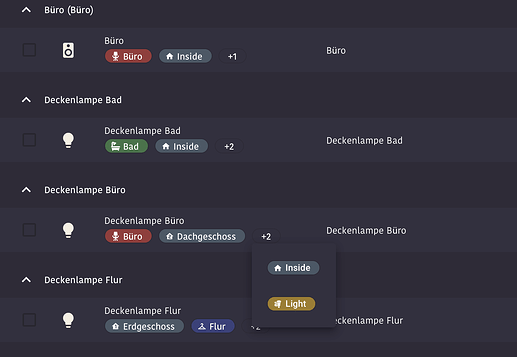

- Entity Index - A script to help the LLM finding ALL related entities to your request. This improves reliability and user experience a lot!

- Use the free Google Generative AI tier as web search for your LLM with a script.

Useful other stuff:

- How to make debugging of the tool calls made by the LLM easier

- Read the Friday’s party thread, and take your time to think about the ideas and tips. Yes, it’s long, but there’s a lot to learn from.

Start of original post, before I added this intro:

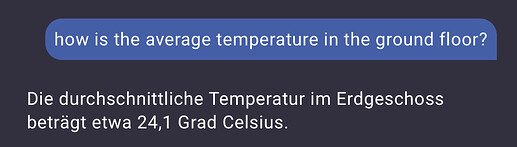

Since LLMs, we talk to Assist much more naturally and freely and are often quite happy to believe what it tells us ![]() .

.

However, I’ve noticed that the bullshit level for smart-home related questions is still quite high.

The reason for this are usually mathematical correlations, as the models do not use a calculator, for example, but answer according to “linguistic probability”.

The more expensive models usually produce far better results in this respect, but

- by far not always

- I am afraid of the costs as the children are also on board.

That’s why I started with a first test script today, to see if this can improved with a tool-set. The first one: Determine the min/max value from a list of numbers.

Here is an example about what might go wrong without additional guidance for the LLM:

“Phew, it’s so hot in my study, what’s the coldest room in the house I could move to?”

The current temperatures in the various rooms are

- Bathroom: 26.0°C

- Roof bathroom: 28.5°C

- Office: 27.96°C

- Kitchen: 25.48°C

- <name_of_kid_1>: 26.57°C

- <name_of_kid_2>: 26.46°C

- Bedroom: 25.42°C

- Games room: 27.89°C

- WC: 25.8°C

- Living room: 25.08°C

The coolest room is the bedroom at 25.42°C.

Uhhhm, well. That’s rather wrong …

And if you repeat the question a few more times, you get a different room each time. ![]()

So I created the following script and shared it with Assist:

alias: Min-Max-Calculator

icon: mdi:calculator-variant

description: >-

Returns the minimum or maximum of a list of numbers (LLM tool).

Can, for example, find the lowest temperature among many.

Depending on the mode set, the function returns the smallest or largest number

from the passed array.

mode: single

fields:

operation:

name: Operation

description: >-

'min' to get the smallest value from a list, or 'max' to get the largest

value from a list

required: true

selector:

select:

options:

- min

- max

numbers:

name: Numbers

description: A JSON array string with several numbers, e.g. [20.43, 21.5, 22.05]

required: true

selector:

text: null

sequence:

- variables:

nums: "{{ numbers | from_json }}"

result: |-

{% if operation == 'min' %}

{{ {'value': nums | min } }}

{% else %}

{{ {'value': nums | max } }}

{% endif %}

- stop: ""

response_variable: result

Then I added this text in the Conversation Agent settings (in the OpenAI Integration configuration):

You are VERY bad at calculations, finding min/max values, comparing numbers or date calculations like when what date might be tomorrow, next week or how many days it is until a given date.

Always, really always use the tools provided when possible to get the solution. Do NOT try to calculate yourself as long as there is another way.

After that, Assist can handle questions about min / max values of things like temperatures, illumination, battery states, … without problems.

At the beginning I always thought about large and cool use-cases when looking for ideas for new intends / scripts.

But I think there is a lot potential with smaller tools for Assist, as many problems in a smart home are not related to linguistic probability, but technical details instead. ![]()

Getting this right might improve the user experience a lot, at least until cheap models are getting better at that kind of problems.

Some other ideas in my head:

- Kind of a real calculator that can handle +, -, *, /, avg, …

- Date calculations like “whats the date of day after tomorrow”, “what date is next tuesday”, “what day is today + x days”.

The second example is also something I had a lot problems with.

Asking for appointments in the calendar, or asking about the weather next weekend, …

There’s really a lot where these models fail.

So if you

- have scripts like this in your setup too

- know other use-cases where the LLMs fail at general simple tasks that are needed for different daily questions

- Simply want to discuss about this

Feel free to participate. ![]()