Well, Ok I’ve been cajoled into it entirely long enough. Here I’m going to document some random thoughts I’ve collected about building an Agentic AI - ‘Friday’ in Home Assistant.

This document is not intended to try to convince anyone my way is the ‘correct’ way. In fact, far from it - Anyone who tells you HOW to AI right now in the nescient stages of the art are dead wrong. Run from them.

Instead, take these chapters (yes there will be chapters, I apologize now) as collective knowledge on how we all get better, together.

Some of you will ask, yes, I do this junk for real money - it’s my day job. This project was born out of my need to 'stay one step ahead ’ of my studen… er clients. So Friday is my research playground.

Now as this will be a discussion thread - I will state RIGHT NOW, I will ask the moderators to moderate this thread hard. We’re not here to argue about any of this stuff.

I’m not here to claim one model king over another. Dude get over it - the models will leapfrog each other almost DAILY for the next foreseeable future and after that, honestly - HOURLY. What I am planning for is what I anticipate will be available to the general public within the next 8-12 months. In my current reality I see a path to capable base hardware is in the hundreds of (US) dollars and AI specialty gear is in the few thousands. If you’re willing to forgo another hot tub you can do this. I you want to convince your spouse that this is a worth wile addition to an over the top hot-tub, stick around. ![]()

Yes I plan on running locally eventually when its economically feasible.

It is not yet for me. So yes, I use a cloud-based model, (gpt4.0-mini, via API probably until next week… We’ll see.) yes, I pay for that model so they cannot contractually use my data.

What I AM here to discuss is how in the heck I was able to get Friday to do the things I do and the general concepts I’m using to get there.

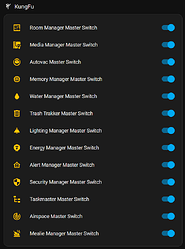

Who is FRIDAY? Friday takes her name from, Tony Stark’s second AI construct after JARVIS became the Vision. (Go there’s a whole movie about it - really…) She is designed to be purposefully smarter than you, bratty, and fun to work with. If you see jabs in the commands they’re purposefully there to keep the mood up - I find reinforcing mood queues helps.

Lets start with Concepts…

Concepts mean ideas, not code. NO I WILL NOT SHARE MY PROMPT. FULL STOP. In fact I’m not going to share most of my code - I will share the frameworks, some intents, and show you how and why they fit together and why I chose the things I did. A LOT of this is first attempt so if there’s a better way - by all means adopt someone else’s way.

— If you are looking for a turnkey solution stop now - it’s not here. —

You will see very soon why it’s simply not feasible. I show you where to find the parts to construct your own. You should be able to apply the ideas to whatever pieces you choose to build everything…

What is Friday. Why is she ‘Different’

Friday is designed to be from the ground up - a fully ‘agentic’ AI construct using commodity tools and systems available.

Ok Nathan - what’s Agentic?

So I’ll grossly oversimplify where we are in the evolution of AI as something akin to:

'Calculator' >> 'Autocorrect' >> 'LLM' >> 'Reasoning LLM' >> 'AGI' >> '(Profit/Doom)'???

It’s end of February, 2025. Agentic is different from a ‘standard’ LLM in where a ‘standard’ LLM can take one slice through a dataset and synthesize a response, Reasoning LLMS have been trained to run multiple patch and compare the results. We can use this to our advantage to answer open ended unknown conversations (as opposed to a workflow which has a defined start and stop)

The AI will need to understand keywords and context, make a determination of the intent of the user, determine if a tool is available that does the ask - OR gets us stepwise closer to the end goal (the big delta here from previous), and executes that tool without interaction of the user.

Practical application:

LLM only

AI, Turn on the lights.

- all of this defined can be gleaned from the conversation - and assuming you’re setup correctly we can pull intent and execution from this even from the simplest of local execution pipelines.

Case 2: Agentic LLM with tool use (prep for Reasoning LLMs)

AI, help me find something to cook for dinner for tonight…

To do this, lets introduce two tools. (I currently prefer intents to scripts - Future Nathan Note, Sep 2025, no we don’t, reasons also this stuff is still important, read on, more later) and tell the AI HOW the tools fit together, how to plan to use them together and prime the prompt with the information from both the tools as well as relevant information to identify where to use them…

- Tool 1: Allow the AI to, when providing a short search term or keyword, the tool returns a dictionary of up to 10 recipes that ‘match’ with details including name, descriptionand ‘recipe_id’

I needed to be able to make ‘request on demand’ to a service that supports a RESTful API. I chose to use the rest command integration, and a paired script to use that command.

# REST Command to support Mealie Recipe Search (config.yaml)

# YOU NEED AN API TOKEN FROM YOUR MEALIE INSTALL

rest_command:

mealie_recipe_search:

url: >

[YOUR_MEALIE_BASE_URL]:9925/api/recipes?

orderDirection=desc

&page=1

&perPage={{ perPage | default(10) }}

{%- if search is defined -%}

&search={{ search | urlencode }}

{%- endif -%}

method: GET

headers:

Authorization: !secret mealie_bearer

accept: "application/json"

verify_ssl: false

There’s not much going on here from the AI POV, but we’re not done…

This tool comes in multiple parts. Next the script (The part the AI fires)

There’s some big stuff going on here that I cannot stress enough.

- Parameters

- Description

- Build for AI use

- Clear output that direct the user (AI) what’s next.

Let’s look.

intent_script:

search_recipes:

description: >

# This is your search tool for Mealie's Recipe System.

# Returns:

# recipe_id: 'recipe.id'

# name: 'recipe.name'

# description: " recipe.description "

# (and other additional detail instructions as available...)

# Top Chef: (Best Practices)

# First, use this search_recipes intent to help find things to cook for your human.

# The return includes recipe_id

# THEN, when you help your human prepare the food provide the correct recipe_id to

# get_recipe_by_id(recipe_id:'[my_recipe_id_guid]')

# to get ingredients and detailed cooking instructions.

# Humans like food.

parameters:

query: # Your search term to look up on Mealie

required: true

number: # the number of search terms to return - default(10) if omitted, Max 50 please

required: false

action:

- action: rest_command.mealie_recipe_search

metadata: {}

data:

search: "{{query | default('')}}"

perPage: "{{number | default(10)}}"

response_variable: response_text

- stop: ""

response_variable: response_text # and return it

speech:

text: >

search:'{{query | default('')}}' number: '{{number| default(10)}}'

response:

{%- if action_response.content['items'] | length > 0 %}

{%- for recipe in action_response.content['items'] %}

recipe_id:'{{ recipe.id }}'

name: '{{ recipe.name }}'

description: "{{ recipe.description }}"

detail_lookup: get_recipe_by_id{'recipe_id': '{{ recipe.id }}'}

{%- endfor %}

{%- else %}

{%- if ( (query | default('')) == '') %}

No search term was provided to query.

usage: search_recipes{'query': 'search term', 'number': 'number of results to return'}

{%- else %}

No recipes found for query:"{{ query }}".

{%- endif %}

{%- endif %}

- Parameters

parameters:

query: # Your search term to look up on Mealie

required: true

number: # the number of search terms to return - default(10) if omitted, Max 50 please

required: false

VERY IMPORTANT SIDENOTE:

Future Nathan note - Sep2025

…at the time this was written the system started changing to not require this, there were debates if it was even necessary. I never stopped making sure this was true for intents, but as you will read later, intents for me, are now only CORE stuff. Most everything else is a script or template or combination thereof now… Which means this no longer is even an issue.

Now, Back to your episode…

Notice above I’m using the slot name ‘query’ in my intent. TO be able to send anything you want through like that in an intent, you need to first add it to your slot_types.yaml file: (under \custom_sentences[yourlanguagecode])

# config/custom_sentences/en/slot_types.yaml

lists:

bank:

wildcard: true

number:

wildcard: true

index_x:

wildcard: true

index_y:

wildcard: true

datetime:

wildcard: true

due_date:

wildcard: true

due_datetime:

wildcard: true

description:

wildcard: true

value:

wildcard: true

query:

wildcard: true

status:

wildcard: true

id:

wildcard: true

recipe_id:

wildcard: true

new_name:

wildcard: true

type:

values:

- 'completed'

- 'needs_action'

period:

values:

- "DAY"

- "WEEK"

- "MONTH"

operator:

values:

- "AND"

- "OR"

- "NOT"

- "XOR"

- "CART"

if it’s not in this file - you can’t name a slot with it in an intent script PERIOD. If you’re having trouble sending wildcards to custom intent scripts this is LIKELY the reason.

But in my parameters inside the intent itself, use clear simple language to, describe what each parameter is inside the REMline comment (YES the AI sees this), if you have special requirements for that slot TELL the AI here, don’t make it guess. And look at the output:

{%- else %}

{%- if ( (query | default('')) == '') %}

No search term was provided to query.

usage: search_recipes{'query': 'search term', 'number': 'number of results to return'}

{%- else %}

No recipes found for query:"{{ query }}".

{%- endif %}

{%- endif %}

if there are ZERO - I clearly tell the AI hey -nonerror null set… ![]() Otherwise it may decide it was an error and make stuff up.

Otherwise it may decide it was an error and make stuff up.

- Description

description: >

# This is your search tool for Mealie's Recipe System.

# Returns:

# recipe_id: 'recipe.id'

# name: 'recipe.name'

# description: " recipe.description "

# (and other additional detail instructions as available...)

# Top Chef: (Best Practices)

# First, use this search_recipes intent to help find things to cook for your human.

# The return includes recipe_id

# THEN, when you help your human prepare the food provide the correct recipe_id to

# get_recipe_by_id(recipe_id:'[my_recipe_id_guid]')

# to get ingredients and detailed cooking instructions.

# Humans like food.

YES, write a book - the More context here the better. Describe usage situations and scenarios - describe how the tools fit together.

- Build for AI use

in the sample I show:

# recipe_id: 'recipe.id'

# name: 'recipe.name'

# description: " recipe.description "

# (and other additional detail instructions as available...)

the REAL output comes out like:

# recipe_id: 'RECIPEGUID' ( <<< this )

# name: 'Chicken Dish'

# description: "Man, the best chicken dish ever... It's got ..."

# more_detail: use intent: get_recipe_by_id(recipe_id:'[my_recipe_id_guid]')

^^^ Gets Prefilled here

- Clear output that direct the user (AI) what’s next. Yes I cheated and kind of built it int othe command output (see previous)

- Tool 2: Accepts a ‘recipe_id’ and then will produce detailed json formatted instructions on how to prepare the recipe queried

Look for the 4 things in the previous tool…

get_recipe_by_id:

description: >

# This tool pulls detailed preparation instructions for any recipe_id

# in Mealie's Recipe System.

# NOTE: if you do NOT know the correct Mealie [RECIPE_ID] (this is a primary key in thier index...)

# then this intentmay not be you're looking for.

# Maybe try

# search_recipes(query:'[search_term]', number:'[number]')

# to find some ideas or get recipe_id's off of today's

# ~MEALIE~

# menu.

# Humans like food.

parameters:

recipe_id: # Recipe ID to look up on Mealie

required: true

action:

- action: mealie.get_recipe

metadata: {}

data:

config_entry_id: 01JG8GQB5WT9AMNXA1HPE65W4E

recipe_id: "{{recipe_id}}"

response_variable: response_text # get action response

- stop: ""

response_variable: response_text # and return it

speech:

text: >

recipe:'{{recipe_id}}'

{{action_response}}

Here I told it Hey if you don’t know a recipe ID you should - here’s where you can get it…

So at this point, your AI MIGHT be able to use each intent individually - it WILL stumble over using them in concert UNLESS you have explicitly instructed it it can use the tools together.

In my prompt I have a section called ‘the Cortex’’ In this section I describe use cases for tools that may not be apparreent to the normal entity:

Here’s a part of it: (Oh and a workaround / solve for your AI telling you the wrong day for a calendar item)

Advanced intent use:

description: You may use intents to perform the following and similar tasks.

Uses:

- >-

When asked for 'what's on the calendar' or what's coming up on the

calendar with no additional context, get today's calendar by issuing

intent: calendar_get_todays_events('name' : '*')

- >-

Calendars default to store data as GMT unless otherwise noted. So when

reading out events Always validate if you need to convert time/data to

your users local time zone. Humans like local time And are slow at

conversions from Zulu or GMT.

- >-

You may get all active tasks on all task like by issuing intent:

get_tasks('name' : '*') When asked what's on our task list, prefer this

unless a task list is specified.

When you add things like this (in my case I decided to put that context IN the description of the intents themselves (a model I will continue forward, its way too easy)

So here’s why it matters.

You’re somewhere around the hockey-stick bend as LLM transitions to AGI through Reasoning. (Test Time Compute and Test Time Training for those that want to read ahead) Next revs of HA are expected to support reasoning models, and streaming voice responses. The response will seem more natural and seamless… But to get MAGIC you have to arm that reasoning engine with tools to augment what it knows about the world.

First I ask what’s for dinner:

(This is another tool, I have the mealie menus exposed to the ai as calendars, part of the base Mealie HA integration) But notice she comes back negative - which is correct. Watch out for the AI making stuff up here to make the user happy… They really don’t like to return negative responses and you may have to reinforce truthiness in your directives (see mine previously)

So she offers to find some - lets take her up on it…

This ladies and gents is an unprompted tool call. (and by the way a MASSIVE milestone) She called into mealie to get recipes using keyword Chicken… But wait - there’s more…

Intentional mispronunciation / spelling (seriously my relatives in Louisiana please don’t kill me, Cher…) This is where LLM v. short phrase SHINES. keyphrases cant fix mangled pronunciation. But this means it KNOWS what I meant…

There’s Chef Paul’s best recipe right there… ![]()

SO, as of today - Friday can help me with preparing dinner for our little dinner party… with a gpt4.0-mini based LLM. …If she can do THIS now?

Tools. Tools will make the difference in your installs. get good at Intent Scripts, Scripts and MCP.

Next time we’ll revisit how to give context to the AI - I need to have Friday make me some Drunken Noodles.

I’ll link some of the other posts I’ve done in AI below:

- the ‘grandma’ post… Issues getting a local LLM to tell me temperatures, humidity, etc - #3 by NathanCu

- AI Shopping List - #2 by NathanCu

- Voice Assistant Memory - #4 by NathanCu

- Custom Component: Flightradar24 - #309 by NathanCu

- Assist with official openai integration cannot parse parameters to custom intents - solved via scripts to set temperature via assist and openai GPT - #12 by NathanCu

tl;dr (dagum you’re wordy, dawg)

Don’t skip these…

- Poetry in Motion…

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #15 by NathanCu - Ninjas…

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #34 by NathanCu - NINJA 2, Electric Boogaloo or, “Wait did you just hand her a steering wheel?”

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #42 by NathanCu - Limits, gov’na…

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #49 by NathanCu - Intel… This is hard. Fix it. (docker-compose.yml for xPU accelerated intel-ipex-ollama+openwebui on a NUC14 Pro AI)

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #53 by NathanCu - I SAID Party… Meet Kronk. (Local LLM Services)

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #55 by NathanCu - todo_CRUD (todo. multitool script)

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #94 by NathanCu

v.1.2

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #102 by NathanCu - calendar_CRUD (calendar. multitool script) - code refactor test…

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #96 by NathanCu

v1.2

Friday's Party: Creating a Private, Agentic AI using Voice Assistant tools - #101 by NathanCu