I have to say this is one of the most useful posts I’ve read here  very good idea to use automations to publish mqtt discovery data instead since that format won’t really change whereas HA config is fluid, thank you!

very good idea to use automations to publish mqtt discovery data instead since that format won’t really change whereas HA config is fluid, thank you!

This post, and the ones in the thread you linked, were extremely helpful - I would not have known I could create my sensors this way without your posts. I have removed all the manually created MQTT sensors for my weather station, and instead of changing them to the new MQTT sensor format, I re-created them using MQTT discovery. This seems like a much better solution. And now as a bonus I have my weather station showing as a device in HA. Thank you so much!

2nd Sunday of the month isn’t it?

I wish it was every day. My Database was 2.4gb went down to 800mb and now my backups have gone down from 1000mb to 430mb.

Can I make it run this purge daily? I have tried running the service with repack but it doesn’t work.

I think we’re getting caught up in terminology here.

The daily purge deletes any records older than purge_keep_days. The default is 10, but you can set this to whatever you want.

The monthly database routine compacts the database, recovering unused space.

The last two HA Core versions did some database upgrades at install time, resulting in more efficient storage. This savings would be realized after the monthly routine happens, or if you do a compact yourself.

Anyway, this is my current understanding. Feel free to correct me if I got something wrong!

That should be a one time drop as all the old format rows are likely gone from your database now assuming you upgraded to 2022.6 more than 10 days ago and you have the default retention period of 10 days. Unless you change how long data is kept or add new entities it should stay around the same size +/- 10% until next months repack

Point is you can’t do that repack yourself or at least it doesn’t work for me.

I did yeah. Db size right now is 1017.6 so 200mb in a day growth

Anyone else noticed the IMAP sensor has stopped working in this release?

EDIT: Seems like 30th May they changed some security settings and you now MUST use 2FA and an app password

Using the recorder.purge service with the repack option selected seems to work for me. It did take me a while to figure out that I not only had to select the check box, but also the switch way over on the right-hand side of the screen:

I’ve also been doing some DB maintenance off-line with HA shut down, using the SQLite DB Browser, where it’s called “compact” instead of “repack.” I think those are the same thing. I’m hoping I don’t have to do this any more, with the dramatic improvements in efficiency and the monthly automatic process.

I suspect your executor is overloaded at startup, or there is I/O in your event loop, or something is CPU bound which causes the executor overload in the first place. It is likely it is not going to be a single fix that solves the issue as usually there are many contributing factors that get the system to that point.

These two will probably help

If you open an issue and provide a few py-spy dumps during startup, we can probably figure out why it is so slow.

Will do thanks Nick! Now where do I find instructions to install py spy on my Ha OS install?

I was using a script but also used the UI and it didn’t seem to do anything at all. I am using mariadb though.

-

py-spy instructions (but update python’s version from

3.8to3.9in the path of one of the commands.

If all else fails, download the .whl file from Release v0.3.12 · benfred/py-spy · GitHub and unzip it with the unzip command and extract the binary

I also have problems after upgrading. Are you seeing the same issue in your log:

Error while setting up growatt_server platform for sensor

Traceback (most recent call last):

File "/usr/src/homeassistant/homeassistant/helpers/entity_platform.py", line 249, in _async_setup_platform

await asyncio.shield(task)

File "/usr/src/homeassistant/homeassistant/components/growatt_server/sensor.py", line 74, in async_setup_entry

devices, plant_id = await hass.async_add_executor_job(get_device_list, api, config)

File "/usr/local/lib/python3.9/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/src/homeassistant/homeassistant/components/growatt_server/sensor.py", line 42, in get_device_list

login_response = api.login(config[CONF_USERNAME], config[CONF_PASSWORD])

File "/usr/local/lib/python3.9/site-packages/growattServer/__init__.py", line 119, in login

data = json.loads(response.content.decode('utf-8'))['back']

File "/usr/local/lib/python3.9/json/__init__.py", line 346, in loads

return _default_decoder.decode(s)

File "/usr/local/lib/python3.9/json/decoder.py", line 337, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

File "/usr/local/lib/python3.9/json/decoder.py", line 355, in raw_decode

raise JSONDecodeError("Expecting value", s, err.value) from None

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0

Hello All!

Have a problem with my gasmeter:

Energy Dashboard is complaining about wrong device_class:

My configuration:

rest:

- resource: http://192.168.133.91/counter

scan_interval: 60

sensor:

- name: gas counter data

value_template: "OK"

json_attributes:

- gas meter

- company

- s/n

- install date

- timestamp

- counter

- volume

- millis

binary_sensor:

- name: gas delivery

value_template: '{{ value_json.delivery }}'

template:

- sensor:

- name: gas meter name

state: "{{ state_attr('sensor.gas_counter_data', 'gas meter') }}"

- name: gas meter company

state: "{{ state_attr('sensor.gas_counter_data', 'company') }}"

- name: gas meter serial number

state: "{{ state_attr('sensor.gas_counter_data', 's/n') }}"

- name: gas meter install date

state: "{{ state_attr('sensor.gas_counter_data', 'install date') }}"

- name: gas counter timestamp

state: "{{ state_attr('sensor.gas_counter_data', 'timestamp') }}"

- name: gas counter

state: "{{ state_attr('sensor.gas_counter_data', 'counter') }}"

state_class: measurement

- name: gas volume

state: "{{ state_attr('sensor.gas_counter_data', 'volume') }}"

device_class: gas

unit_of_measurement: "m³"

- name: gas counter millis

state: "{{ state_attr('sensor.gas_counter_data', 'millis') }}"

state_class: measurement

utility_meter:

gasmeter_yearly:

source: sensor.gas_volume

cycle: yearly

gasmeter_monthly:

source: sensor.gas_volume

cycle: monthly

gasmeter_weekly:

source: sensor.gas_volume

cycle: weekly

gasmeter_daily:

source: sensor.gas_volume

cycle: daily

All entyties are there, no other problems but my energy dashboard reports wrong device_class.

Tryed device_class: “gas” and “energy”.

unit_of_measurement: “m³”

Any hints

Marc

I appear to have an issue with 2022.6.6 whereby UniFi switches for network devices are no longer able to be turned off.

When turned off the switch bounces back to on within a second. Was working in 2022.6.5 and earlier. Can’t see anything in the logs or in the above thread so thought I’d see if anyone else has encountered this.

Cheers

James

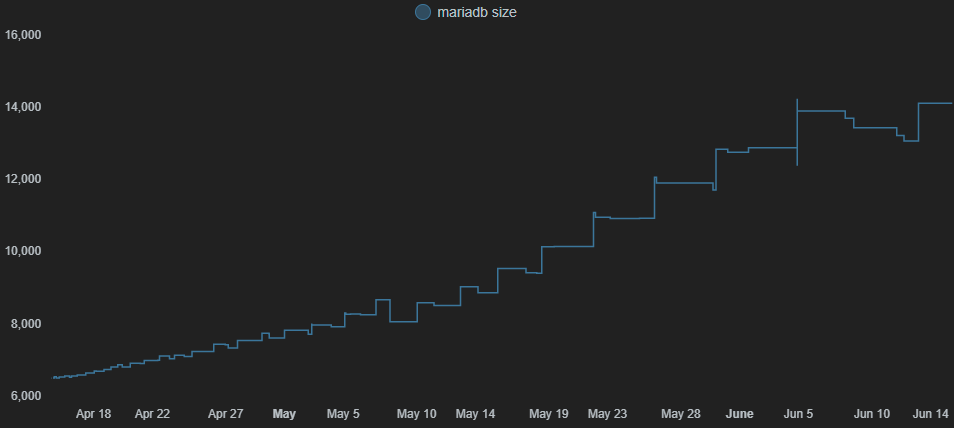

I have not added any significant number of entities or automations recently. I only use includes in my recorder config; mostly I include specific entities and only use a few globs where necessary, so I think I’m being very judicious in what I record.

I queried the DB and identified a few “heavy hitters” that I decided really didn’t need to be captured. I’ve removed those and have performed multiple Recorder: Purge calls with the repack option checked and enabled.

Despite that, the DB continues to grow.

Have you looked at the energy tables (statistics*)? I had problems with those always growing a few releases ago, but for me they seem to have settled down a bit now.

If that’s not it, then it’s back to checking the events and states tables. Check your purge_keep_days and make sure the excludes you’ve added are working as intended.