Hey I have managed (I think) to solve the issue which was bothering me for a long time and I have decided to share the solution with you guys. I hope that someone finds it helpful.

Problem

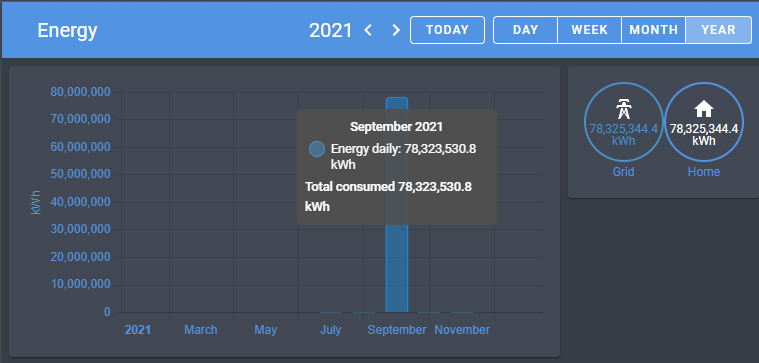

I have OWL energy sensor which sends the data via RF433. The problem is that sometimes the received values are not correct. Pretty often I get “0” as the energy_total and once the value jumped significantly by 78M.

I don’t have the sensor data to investigate what has happened that day and what were the actual sensor values. The sensor data which I show below is the data from utility_meter.

Solutiuon

Skip known invalid values (e.g. “0”)

This solution can be applied for any value which can be quickly recognized as invalid like 0 for energy or more than 400 for outside temperature.

My entity for energy total sensor is called sensor.owl_energy_total and to filtering out 0 states I have created another sensor (template one) with very simple condition:

sensor:

- platform: template

sensors:

energy_total:

friendly_name: "Energy total"

device_class: energy

unit_of_measurement: kWh

value_template: >

{% if states('sensor.owl_energy_total') | float == 0 %}

{{ states('sensor.energy_total') }}

{% else %}

{{ states('sensor.owl_energy_total') | float | multiply(0.001) | round(1) }}

{% endif %}

This way you can be sure that sensor.energy_total entity will always have values not equal 0.

Fix invalid statistics data

Please create a backup/snapshot before proceeding with below steps.

You will need some database explorer/viewer tool. Which you will choose depends on the DB you use in you HA. Since SQLite is the default one I will use the “SQLite Web” add-on.

-

Check when exactly the issue happened.

I think that the best way to check the day is to go to Energy tab, switch to mothly view and go to prev months (if there is a need).

-

Go to the DB explorer and check the entity metadata_id. You can find it in

statistics_metatable.

-

Get the exact IDs for table entries.

Switch to “Query” tab and type similar query (you need to update the ID and dates). Sample result at the beginning of this post.select id, created, state, sum from statistics where metadata_id == 10 and created between '2021-09-04' and '2021-09-05' -

Fix the state values

Here we have the tricky part. First I was hoping that updating thestatecolumn values will be enough but it turned out it isn’t. Pasting here an example query to update these values anyway.We assume here that the first invalid value was’t affecting the state so it will be the same as the prev row.

update statistics set state = round(state - 78323324.8, 1) where metadata_id == 10 and id between 17667 and 17891; -

Fixing sum values

Initially I have decided to change the values starting from the point where the issue happened onwards - in more or less the same way how I have fixedstatevalues above. So I have tried to reduce the values in all records after 17653 (2021-09-04 06:12:01). But then it turned out that my most recent readings (last day) has this enormous spike.I have tried to find the current entity state value somewhere in the

.storagedir but I couldn’t find it and I didn’t bother to look at the utility_meter code to check where does it keep its state.The other solution was to update the previous states (the ones <= 17653) and this worked perfectly.

78323328.5 - 592.4 = 78323324.8update statistics set sum = sum + 78323324.8 where metadata_id == 10 and id <= 17653;

Note:

I recommend to experiment on external DB before applying any DB changes in your HA. Here you have saved SQL fillde which you can use: DB Fiddle - SQL Database Playground.

Here is a simple SQL for adding table with sample data (in case above link stops working)

CREATE TABLE statistics (

id INTEGER NOT NULL,

created DATETIME,

metadata_id INTEGER,

start DATETIME,

mean FLOAT,

min FLOAT,

max FLOAT,

last_reset DATETIME,

state FLOAT,

sum FLOAT,

PRIMARY KEY (id)

);

INSERT INTO `statistics` (`id`,`created`,`metadata_id`,`start`,`mean`,`min`,`max`,`last_reset`,`state`,`sum`)

VALUES

(17233,"2021-09-03 00:12:01.562213",10,"2021-09-02 23:00:00",null,null,null,"2021-09-02 23:00:00.010124",0.5,577.9999999999978),

(17247,"2021-09-03 01:12:01.484162",10,"2021-09-03 00:00:00",null,null,null,"2021-09-02 23:00:00.010124",0.7,578.1999999999979);

I have created as well a simple JS script for converting exported JSON data from “SQLite Web” to SQL INSERT statement. Script available here, you just need to paster the JSON there.

and avoid messing data around, because you can fix this very easy.

and avoid messing data around, because you can fix this very easy.